The rise of high-density rack configurations has transformed data centers, pushing the boundaries of performance and efficiency. These configurations, capable of handling up to 50kW per rack, demand innovative solutions to manage the immense heat and energy they generate. Advanced cooling systems, such as liquid cooling, have emerged as essential tools to maintain optimal temperatures. Efficient power distribution ensures uninterrupted operations, while real-time monitoring enhances reliability. By addressing these critical aspects, you can optimize your infrastructure to meet the growing demands of modern computing environments.

Key Takeaways

Implement advanced cooling solutions like liquid cooling and immersion cooling to effectively manage heat in high-density racks, ensuring optimal performance.

Adopt high-efficiency power systems to minimize energy loss and reduce operational costs, contributing to a more sustainable data center environment.

Utilize real-time monitoring tools to track power density and system performance, allowing for immediate corrective actions to prevent overheating and downtime.

Incorporate predictive analytics to forecast potential issues and schedule maintenance proactively, enhancing system reliability and longevity.

Consider redundancy models in power distribution to ensure uninterrupted operations, safeguarding against potential power failures.

Select energy-efficient hardware to lower energy consumption and heat generation, which supports both performance and sustainability goals.

Consolidate workloads in high-density racks to maximize computational power while minimizing physical space and energy requirements.

Understanding High-Density Rack Configurations

Characteristics of High-Density Rack Configurations

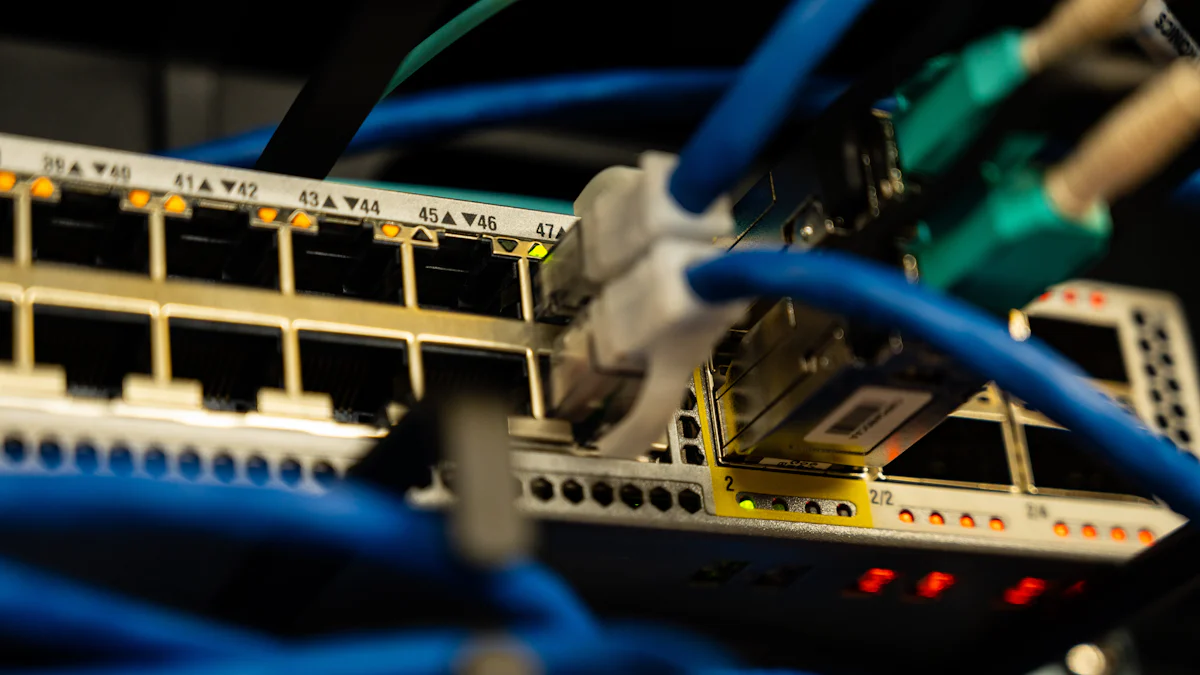

High-density systems have redefined how data centers operate, enabling them to handle unprecedented workloads. A high-density rack, capable of supporting up to 50kW rack density, represents a significant leap in computational power. These racks are designed to accommodate advanced hardware, such as GPUs and CPUs, which generate substantial heat during operation. The compact nature of these configurations allows you to maximize floor space while achieving higher performance levels.

Modern high-density computing environments often integrate innovative cooling mechanisms to manage the thermal load effectively. Liquid cooling and immersion cooling are becoming standard solutions for maintaining optimal temperatures. Additionally, these racks require robust power distribution systems to ensure consistent energy delivery. By leveraging these features, you can enhance the efficiency and scalability of your data center architecture.

Challenges of Achieving 50kW Rack Density

Reaching a 50kW rack density presents several challenges that demand careful planning and execution. One of the primary obstacles is managing the immense heat generated by high-density systems. Traditional air cooling methods often fall short, making advanced cooling technologies essential. Without proper heat management, the risk of hardware failure increases significantly.

Power distribution is another critical challenge. High-density racks require efficient and reliable power systems to handle their energy demands. Any disruption in power delivery can lead to downtime, affecting the overall performance of your data center. Furthermore, the integration of these systems into existing data center architectures can be complex, requiring significant infrastructure upgrades.

Lastly, achieving such high rack densities necessitates precise monitoring and analytics. Real-time data on temperature, power usage, and system health is crucial for maintaining stability. Without these insights, optimizing performance and preventing failures becomes nearly impossible.

Infrastructure Requirements for High Power Density

To support a 50kW rack density, your infrastructure must meet specific requirements. Cooling systems play a pivotal role in managing the thermal load. Liquid cooling, direct-to-chip cooling, and immersion cooling are among the most effective solutions for dissipating heat in high-density configurations. These technologies ensure that your systems remain operational even under extreme conditions.

Power distribution systems must also be designed to handle the demands of high-density racks. High-efficiency power units, combined with redundancy models, provide the reliability needed for uninterrupted operations. These systems minimize energy loss and reduce the risk of power-related failures.

Additionally, your data center must incorporate advanced monitoring tools. These tools provide real-time insights into power density, heat levels, and system performance. Predictive analytics can further enhance your ability to address potential issues before they escalate. By investing in these infrastructure components, you can create a robust foundation for high-density computing environments.

Cooling Solutions for 50kW Racks

Efficient cooling is the backbone of high-density rack configurations, especially when managing 50kW power loads. Traditional air-based cooling methods often fail to meet the demands of such systems, making advanced cooling solutions essential. By adopting innovative cooling techniques, you can ensure optimal thermal management, improve system reliability, and maximize performance.

Liquid Cooling for High-Density Racks

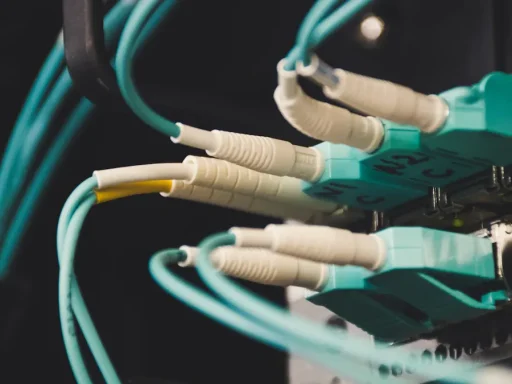

Liquid cooling has revolutionized thermal management in high-density data centers. Unlike air cooling, liquid cooling leverages the superior thermal transfer properties of liquids to dissipate heat more effectively. This method enables you to maintain stable operating temperatures even under extreme workloads.

Modern liquid cooling technologies include solutions like rear door heat exchangers and cold plates. Rear door heat exchangers can remove significant amounts of heat directly from the rack, reducing the strain on your overall cooling infrastructure. Cold plates, a key component of direct-to-chip cooling, target specific heat-generating components like CPUs and GPUs, ensuring precise thermal control.

“Water is 24 times more efficient at cooling than air and can hold 3200 times more heat,” as industry experts highlight. This efficiency makes liquid cooling indispensable for high-density environments.

Implementing liquid cooling requires careful planning. You must consider factors like space allocation, infrastructure costs, and system monitoring. However, the benefits—enhanced reliability, reduced energy consumption, and the ability to support higher power densities—far outweigh the challenges.

Direct-to-Chip Cooling for Enhanced Efficiency

Direct-to-chip cooling represents one of the most targeted and efficient cooling solutions available today. This method uses cold plates to transfer heat directly from the chip to a liquid coolant, bypassing the inefficiencies of traditional air cooling. By focusing on the primary heat sources, direct-to-chip cooling can remove up to 80% of a server’s heat.

This approach is particularly beneficial for high-performance computing (HPC) and AI workloads, where heat generation is intense. Direct-to-chip cooling not only ensures thermal stability but also allows you to achieve higher computational densities without compromising system performance.

To integrate direct-to-chip cooling into your data center, you need to evaluate your existing infrastructure. Ensure compatibility with your hardware and plan for the installation of liquid distribution units (LDUs) to manage the flow of coolant. With proper implementation, this method can significantly enhance your cooling efficiency.

Immersion Cooling for Extreme Power Density

Immersion cooling takes thermal management to the next level by submerging servers or components in a dielectric liquid. This liquid acts as both a coolant and an insulator, preventing electrical discharge while efficiently dissipating heat. Immersion cooling is ideal for extreme power densities, making it a perfect fit for 50kW racks.

This method offers several advantages. It eliminates the need for traditional air cooling systems, reduces noise levels, and minimizes energy consumption. Companies like KDDI have demonstrated the effectiveness of immersion cooling, achieving a 43% reduction in power consumption and a Power Usage Effectiveness (PUE) below 1.07.

Immersion cooling also simplifies maintenance. The dielectric liquid protects components from dust and other contaminants, extending their lifespan. However, adopting this technology requires a shift in data center design and operational practices. You must invest in specialized tanks, coolants, and monitoring systems to fully leverage its benefits.

By exploring and implementing these advanced cooling solutions, you can optimize your high-density rack configurations for 50kW power loads. These methods not only address the thermal challenges of modern computing environments but also pave the way for sustainable and scalable data center operations.

Power Distribution Strategies for 50kW Rack Density

Efficient power distribution forms the backbone of high-density rack configurations. When managing 50kW rack density, you must adopt strategies that ensure consistent energy delivery while minimizing waste. By implementing advanced power systems and redundancy models, you can enhance reliability and optimize energy usage in your data centers.

High-Efficiency Power Systems for Data Centers

High-efficiency power systems are essential for supporting the energy demands of 50kW racks. These systems convert electrical input into usable output with minimal energy loss, reducing waste heat and improving overall efficiency. Power supplies with certifications like 80 PLUS exemplify this approach. They deliver more usable power while consuming less electricity, which directly lowers operational costs.

Modern data centers benefit from these systems by achieving better energy utilization. High-efficiency power units also contribute to sustainability by reducing the environmental impact of operations. For example, they help maintain lower cooling requirements since less waste heat is generated. This synergy between power efficiency and thermal management ensures that your infrastructure remains both cost-effective and environmentally responsible.

To integrate high-efficiency power systems, you should evaluate your current setup and identify areas for improvement. Upgrading to energy-efficient hardware and optimizing power distribution pathways can significantly enhance performance. Additionally, monitoring tools can provide real-time insights into energy usage, enabling you to make informed decisions about system upgrades.

Redundancy Models to Ensure Reliability

Reliability is critical when operating high-density racks at 50kW. Any disruption in power delivery can lead to downtime, affecting the performance of your data centers. Redundancy models address this challenge by providing backup systems that ensure uninterrupted operations even during failures.

A common approach involves deploying N+1 redundancy, where an additional power unit supports the primary system. If one unit fails, the backup seamlessly takes over, preventing interruptions. For more robust setups, 2N redundancy offers complete duplication of power systems, ensuring maximum reliability. While this model requires higher investment, it provides unparalleled assurance for mission-critical applications.

Redundancy models also simplify maintenance. You can perform repairs or upgrades on one system without affecting the overall operation. This flexibility minimizes risks and enhances the long-term stability of your infrastructure. To implement redundancy effectively, you should assess your power requirements and choose a model that aligns with your operational goals.

By combining high-efficiency power systems with redundancy models, you can create a resilient and energy-efficient foundation for your high-density racks. These strategies not only optimize power usage but also ensure that your data centers remain reliable and scalable in the face of growing demands.

Energy Efficiency and Sustainability in High-Density Racks

Selecting Energy-Efficient Hardware for 50kW Racks

Choosing the right hardware is essential for achieving energy efficiency in high-density environments. Modern hardware components, such as processors and GPUs, are designed to deliver higher performance while consuming less power. By selecting energy-efficient hardware, you can reduce operational costs and minimize the environmental impact of your data centers.

High-efficiency power supplies play a critical role in supporting 50kW rack configurations. These systems convert electrical energy with minimal loss, ensuring that more power is directed toward operational needs. Certifications like 80 PLUS provide a benchmark for identifying hardware that meets stringent energy efficiency standards. Incorporating such components into your racks not only optimizes energy usage but also reduces heat generation, which eases the burden on cooling systems.

Additionally, advancements in server technology have introduced features like dynamic voltage scaling and power capping. These features allow you to adjust power consumption based on workload demands, further enhancing energy efficiency. For example, servers equipped with these technologies can operate at lower power levels during periods of reduced activity, conserving energy without compromising performance.

When selecting hardware, consider the long-term benefits of energy-efficient options. While the initial investment may be higher, the savings in energy costs and the reduced need for extensive cooling infrastructure make it a worthwhile choice. By prioritizing energy-efficient hardware, you can create a sustainable foundation for your high-density racks.

Operational Practices to Enhance Sustainability

Sustainability in high-density data centers goes beyond hardware selection. Operational practices play a significant role in reducing energy consumption and promoting environmental responsibility. Implementing best practices can help you optimize resource utilization and minimize waste.

Start by adopting intelligent cooling strategies. Advanced cooling methods, such as liquid cooling, are more efficient than traditional air-based systems. Liquid cooling not only manages the heat generated by 50kW racks effectively but also reduces overall energy consumption. For instance, liquid cooling systems can achieve up to 30% energy savings compared to conventional methods, making them a sustainable choice for high-density environments.

Monitoring and analytics tools are indispensable for maintaining sustainability. Real-time monitoring provides insights into power usage, temperature levels, and system performance. These insights enable you to identify inefficiencies and take corrective actions promptly. Predictive analytics can further enhance sustainability by forecasting potential issues and allowing you to address them before they escalate.

Another effective practice involves consolidating workloads. High-density racks allow you to pack more computational power into a smaller space, reducing the need for additional infrastructure. This consolidation not only saves floor space but also lowers energy requirements. For example, transitioning from low-density to high-density configurations can reduce the number of racks needed, leading to significant cost and energy savings.

Finally, consider renewable energy sources to power your data centers. Solar panels, wind turbines, and other renewable options can offset the environmental impact of high-density operations. By integrating renewable energy into your power supply, you can further enhance the sustainability of your infrastructure.

By combining energy-efficient hardware with sustainable operational practices, you can optimize your high-density racks for both performance and environmental responsibility. These efforts not only align with industry trends but also position your data centers as leaders in sustainability.

Monitoring and Analytics for High-Density Rack Configurations

Monitoring and analytics are essential for optimizing high-density rack configurations. They provide you with the tools to ensure operational stability, maximize power density, and maintain system health. By leveraging real-time monitoring and predictive analytics, you can proactively address potential issues and enhance the efficiency of your data centers.

Real-Time Monitoring for Power Density Optimization

Real-time monitoring allows you to track critical metrics like power density, temperature, and energy consumption. These insights help you identify inefficiencies and take immediate corrective actions. For high-density racks operating at 50kW, this level of visibility is crucial to prevent overheating and ensure consistent performance.

Modern monitoring tools, such as Prometheus and Dynatrace, offer advanced capabilities for tracking power density in real time. These tools provide dashboards that display live data, enabling you to make informed decisions quickly. For example, if a cooling system underperforms, real-time monitoring alerts you to the issue before it escalates into a failure.

“Proactive monitoring reduces downtime and improves system availability,” says a Monitoring and Observability Specialist. This approach ensures that your data centers remain reliable even under extreme workloads.

To optimize power density, you should also monitor the performance of your cooling systems. Liquid cooling and immersion cooling, commonly used in high-density environments, require precise temperature control. Real-time data helps you maintain optimal cooling efficiency, which directly impacts the stability of your infrastructure.

Implementing real-time monitoring involves integrating sensors and software into your data center management strategy. These systems collect and analyze data continuously, giving you a comprehensive view of your operations. By adopting this technology, you can enhance the reliability and scalability of your high-density racks.

Predictive Analytics for System Health and Maintenance

Predictive analytics takes monitoring a step further by using historical data to forecast potential issues. This technology helps you anticipate failures and schedule maintenance proactively, reducing the risk of unexpected downtime. For high-density configurations, where power density reaches 50kW, predictive analytics is invaluable for maintaining system health.

Tools like the ELK Stack and Nagios excel at analyzing trends and identifying anomalies. These platforms use machine learning algorithms to detect patterns that indicate potential problems. For instance, a gradual increase in power consumption might signal an impending hardware failure. Addressing such issues early prevents costly disruptions.

Predictive analytics also enhances the efficiency of your cooling systems. By analyzing data from sensors, these tools can predict when cooling components need maintenance or replacement. This foresight ensures that your systems operate at peak performance, even in demanding environments.

“Monitoring the monitoring tools themselves is equally important,” emphasizes the Monitoring and Observability Specialist. Ensuring the accuracy and reliability of your analytics platforms guarantees that you receive actionable insights.

To implement predictive analytics, you need to invest in robust software and train your team to interpret the data effectively. Combining this approach with real-time monitoring creates a comprehensive strategy for managing high-density racks. This dual approach not only optimizes power density but also extends the lifespan of your infrastructure.

By integrating real-time monitoring and predictive analytics into your data center management practices, you can achieve unparalleled efficiency and reliability. These technologies empower you to address challenges proactively, ensuring that your high-density racks perform optimally under any conditions.

Optimizing high-density rack configurations for 50kW power loads is essential for meeting the demands of modern data centers. By adopting innovative cooling methods, you can effectively manage heat and ensure system stability. Efficient power distribution strategies enhance reliability and reduce operational costs. Advanced monitoring tools provide real-time insights, enabling you to maintain peak performance. Proactive planning and the integration of cutting-edge solutions position your infrastructure for future scalability. These efforts not only improve operational efficiency but also support sustainability, ensuring your data centers remain competitive in a rapidly evolving industry.

FAQ

How can you support 100+ kW per rack densities?

Supporting 100+ kW per rack densities requires innovative strategies to address the challenges posed by such extreme power loads. These configurations are becoming increasingly relevant with the rise of AI workloads, which demand high computational power. Hyperscalers and colocation data centers are leading the way in accommodating these densities. To achieve this, you need to focus on advanced cooling systems, such as liquid cooling or immersion cooling, to manage the immense heat generated. Additionally, robust power distribution systems and enhanced security measures are essential for maintaining reliability and ensuring seamless interconnection with edge data centers.

Pro Tip: Start by evaluating your current infrastructure and identifying areas where upgrades are necessary to handle these extreme densities effectively.

What are the best ways to optimize power infrastructure costs for densities exceeding 35kW per rack?

To optimize power infrastructure costs while maintaining reliability and safety for densities exceeding 35kW per rack, you should consider integrating liquid cooling systems. Studies, such as those conducted by engineering teams at Schneider Electric, have shown that liquid cooling can significantly reduce power distribution costs in IT rooms. This approach minimizes energy loss and enhances efficiency, making it a cost-effective solution for high-density environments. Focus on designing your power systems to align with international standards, such as IEC, to ensure compatibility and safety.

Key Takeaway: Liquid cooling not only optimizes costs but also improves system reliability, making it a valuable investment for high-density data centers.

Why is liquid cooling preferred for high-density racks?

Liquid cooling is preferred for high-density racks because it offers superior thermal management compared to traditional air cooling. Liquids have higher thermal conductivity, allowing them to dissipate heat more efficiently. This method is particularly effective for managing the heat generated by 50kW or higher power loads. By using technologies like direct-to-chip cooling or rear door heat exchangers, you can maintain stable operating temperatures and improve system performance.

“Water is 24 times more efficient at cooling than air,” as industry experts emphasize, making liquid cooling indispensable for modern data centers.

How does immersion cooling benefit extreme power densities?

Immersion cooling benefits extreme power densities by submerging servers in a dielectric liquid, which acts as both a coolant and an insulator. This method eliminates the need for traditional air cooling systems and reduces energy consumption. Immersion cooling is ideal for configurations exceeding 50kW per rack, as it efficiently manages heat while minimizing noise and maintenance requirements. Companies adopting this technology have reported significant energy savings and improved system reliability.

Did You Know? Immersion cooling can achieve a Power Usage Effectiveness (PUE) below 1.07, making it one of the most sustainable cooling solutions available.

What role does real-time monitoring play in high-density rack configurations?

Real-time monitoring plays a critical role in optimizing high-density rack configurations. It provides you with actionable insights into power usage, temperature levels, and system performance. By tracking these metrics, you can identify inefficiencies and address potential issues before they escalate. For racks operating at 50kW or higher, real-time monitoring ensures consistent performance and prevents overheating.

Expert Insight: Proactive monitoring reduces downtime and enhances system availability, making it an essential tool for managing high-density data centers.

How can predictive analytics improve system health in high-density environments?

Predictive analytics improves system health by using historical data to forecast potential failures. This technology helps you schedule maintenance proactively, reducing the risk of unexpected downtime. For high-density environments, predictive analytics can identify patterns in power consumption or cooling performance, allowing you to address issues before they impact operations. By combining predictive analytics with real-time monitoring, you can create a comprehensive strategy for maintaining system reliability.

Tip: Invest in robust analytics tools and train your team to interpret data effectively for maximum benefits.

What are the key considerations for integrating renewable energy into high-density data centers?

Integrating renewable energy into high-density data centers involves evaluating your power requirements and identifying suitable renewable sources, such as solar or wind energy. Renewable energy can offset the environmental impact of high-density operations and enhance sustainability. To maximize efficiency, you should also consider energy storage solutions, like batteries, to ensure a consistent power supply during periods of low renewable energy generation.

Sustainability Tip: Combining renewable energy with energy-efficient hardware and cooling systems creates a greener and more cost-effective data center.

How can you ensure reliability in power distribution for 50kW racks?

Ensuring reliability in power distribution for 50kW racks requires implementing redundancy models. N+1 redundancy provides an additional power unit to support the primary system, while 2N redundancy offers complete duplication for maximum reliability. These models prevent downtime by seamlessly transferring operations to backup systems during failures. Regular maintenance and real-time monitoring further enhance the reliability of your power distribution infrastructure.

Best Practice: Choose a redundancy model that aligns with your operational goals and budget to maintain uninterrupted operations.

What are the advantages of consolidating workloads in high-density racks?

Consolidating workloads in high-density racks allows you to maximize computational power while minimizing physical space requirements. This approach reduces the number of racks needed, lowering energy consumption and operational costs. High-density configurations also simplify infrastructure management, making it easier to monitor and maintain systems. By consolidating workloads, you can achieve greater efficiency and scalability in your data center operations.

Efficiency Insight: Transitioning from low-density to high-density configurations can lead to significant cost and energy savings.

How do advanced cooling methods contribute to sustainability in data centers?

Advanced cooling methods, such as liquid cooling and immersion cooling, contribute to sustainability by reducing energy consumption and improving thermal efficiency. These systems manage the heat generated by high-density racks more effectively than traditional air cooling, resulting in lower operational costs and a smaller carbon footprint. By adopting these technologies, you can align your data center operations with environmental goals while maintaining high performance.

Green Tip: Implementing advanced cooling solutions is a step toward creating sustainable and future-ready data centers.

In the realm of artificial intelligence, understanding the financial implications of infrastructure investments has become indispensable. A well-executed TCO analysis empowers organizations to make informed decisions by evaluating the long-term costs and benefits of their AI systems. This approach ensures that businesses balance immediate expenses with sustainable returns. For instance, companies that optimize their AI cost analysis often achieve up to 3X higher ROI compared to those with fragmented strategies. By adopting a structured evaluation, organizations can unlock greater efficiency, reduce hidden costs, and maximize the value of their artificial intelligence initiatives.

Key Takeaways

Understanding Total Cost of Ownership (TCO) is essential for making informed financial decisions regarding AI infrastructure, as it encompasses all costs over the system’s lifecycle.

A structured TCO analysis can help organizations avoid hidden costs and optimize their AI investments, potentially achieving up to 3X higher ROI compared to fragmented strategies.

When evaluating deployment models, consider the unique cost structures of on-premises, cloud-based, and SaaS solutions to align with your organization’s budget and operational needs.

Regularly revisiting and updating TCO calculations ensures that businesses remain prepared for unforeseen financial demands and can adapt to changing project requirements.

Implementing cost optimization strategies, such as efficient resource allocation and leveraging hybrid models, can significantly reduce the total cost of ownership for AI systems.

Negotiating vendor contracts effectively is crucial for minimizing costs and ensuring long-term value, so businesses should focus on transparency and flexibility during negotiations.

Understanding TCO and Its Importance

What Is TCO?

Total Cost of Ownership (TCO) refers to the comprehensive assessment of all costs associated with acquiring, operating, and maintaining a system or infrastructure over its entire lifecycle. In the context of artificial intelligence, TCO encompasses expenses such as hardware procurement, software licensing, labor, data acquisition, and ongoing operational costs. This holistic approach ensures that organizations account for both visible and hidden costs when planning AI deployments.

Unlike simple upfront cost evaluations, TCO calculations provide a detailed view of long-term financial commitments. For example, deploying AI systems often involves recurring expenses like cloud service subscriptions or hardware upgrades. By calculating TCO, businesses can avoid underestimating these costs and ensure sustainable investments. Tools like TCO calculators, which evaluate deployment and running costs, help organizations estimate expenses such as cost per request and labor requirements. These tools also highlight potential areas for cost optimization.

“The Google Cloud article emphasizes the importance of modeling TCO for each AI use case to optimize costs and improve efficiency.” This insight underscores the need for businesses to tailor their TCO analysis to specific AI applications, ensuring accurate financial planning.

Why Is TCO Critical for AI Infrastructure?

TCO analysis plays a pivotal role in guiding organizations toward informed decision-making for AI infrastructure. Artificial intelligence systems, particularly those involving large-scale models, demand significant resources. Without a clear understanding of total cost of ownership, businesses risk overspending or underutilizing their investments. A structured TCO analysis helps mitigate these risks by providing a roadmap for cost management.

AI cost analysis becomes especially critical when comparing deployment models such as on-premises infrastructure, cloud-based solutions, or SaaS platforms. Each model presents unique cost structures, and TCO calculations enable businesses to weigh these options effectively. For instance, cloud-based AI services may reduce upfront costs but introduce variable operational expenses. On the other hand, on-premises setups require substantial initial investments but offer greater control over long-term costs.

Hidden costs, such as workforce re-engagement or hardware maintenance, often go unnoticed during initial budgeting. By incorporating these factors into TCO analysis, organizations can identify potential financial pitfalls. This proactive approach ensures that AI deployments remain financially viable over time.

In industries with strict data sovereignty requirements, TCO analysis becomes even more critical. Businesses must balance compliance needs with cost considerations, particularly when evaluating cloud-based AI solutions. A thorough understanding of TCO allows organizations to align their financial strategies with operational goals, ensuring both efficiency and compliance.

Breaking Down the Key Cost Components

Infrastructure Costs

Infrastructure costs form the foundation of any AI system. These expenses include hardware, software, and networking components required to build and maintain AI infrastructure. High-performance computing resources, such as GPUs and TPUs, are essential for training and deploying AI models. The cost of these components can vary significantly based on the scale and complexity of the project. For instance, small-scale AI projects may require investments ranging from $50,000 to $500,000, while large-scale initiatives can exceed $5 million.

Cloud-based solutions often provide scalable infrastructure options, reducing the need for substantial upfront investments. However, they introduce recurring operational expenses, such as subscription fees and data transfer costs. On-premises setups, on the other hand, demand significant initial capital but offer greater control over long-term costs. Businesses must carefully evaluate these options through detailed TCO calculations to determine the most cost-effective approach for their specific needs.

Additionally, infrastructure maintenance plays a critical role in sustaining AI systems. Regular hardware upgrades, software updates, and system monitoring ensure optimal performance. Neglecting these aspects can lead to inefficiencies and increased operational costs over time.

Labor Costs

Labor costs represent a significant portion of the total cost of ownership for AI infrastructure. Developing, deploying, and maintaining AI systems require skilled professionals, including data scientists, machine learning engineers, and IT specialists. Salaries for these roles can vary widely depending on expertise and geographic location. For example, hiring a team of experienced AI professionals for a complex project may cost hundreds of thousands of dollars annually.

Beyond salaries, businesses must consider the cost of training and upskilling their workforce. AI technologies evolve rapidly, necessitating continuous learning to stay updated with the latest advancements. Investing in employee development ensures that teams remain proficient in managing AI systems, ultimately reducing the risk of errors and inefficiencies.

Outsourcing certain tasks, such as data annotation or model development, can help manage labor costs. However, organizations must weigh the benefits of outsourcing against potential challenges, such as quality control and data security concerns. Calculating TCO accurately requires factoring in all labor-related expenses, including hidden costs like recruitment and onboarding.

Data Costs

Data serves as the backbone of AI systems, making data-related costs a critical component of TCO analysis. These costs encompass data collection, storage, preprocessing, and management. For instance, acquiring high-quality datasets often involves expenses for data licensing, annotation, and cleaning. Complex AI projects may require vast amounts of data, driving up these costs significantly.

Storage and management of large datasets also contribute to data costs. Cloud storage solutions offer scalability but come with recurring fees based on usage. On-premises storage provides greater control but demands substantial initial investments in hardware and maintenance. Organizations must evaluate these options carefully to optimize data-related expenses.

Preprocessing and ensuring data quality are equally important. Poor-quality data can lead to inaccurate model predictions, resulting in wasted resources and additional costs for retraining. Businesses should prioritize robust data management practices to minimize these risks and enhance the efficiency of their AI systems.

“AI is not possible without massive amounts of data, leading to various data-related costs that should be factored into the potential cost of an AI system.” This insight highlights the importance of incorporating all data-related expenses into TCO calculations to ensure comprehensive financial planning.

Operational Costs

Operational costs represent a significant portion of the total cost of ownership for AI infrastructure. These expenses arise from the day-to-day functioning and upkeep of AI systems, ensuring their reliability and efficiency over time. Businesses must account for several key areas when evaluating operational costs to maintain financial sustainability.

One major contributor to operational costs is energy consumption. AI systems, particularly those involving high-performance computing, require substantial power to operate. Training large-scale models or running inference tasks can lead to high electricity bills, especially in on-premises setups. Organizations should monitor energy usage closely and explore energy-efficient hardware or cooling solutions to mitigate these expenses.

System maintenance also plays a critical role in operational costs. Regular updates to software, firmware, and security protocols ensure that AI systems remain functional and secure. Neglecting maintenance can result in system downtime, which disrupts operations and incurs additional costs. Businesses should allocate resources for routine inspections and timely upgrades to avoid such issues.

Another factor to consider is the cost of managing data pipelines. AI systems rely on continuous data ingestion, preprocessing, and storage. Operational costs increase as data volumes grow, particularly for projects requiring real-time data processing. Cloud-based solutions offer scalability but introduce recurring fees tied to data transfer and storage. On-premises systems demand investments in physical storage and IT personnel to manage these pipelines effectively.

Organizations must also address the costs associated with monitoring and troubleshooting AI systems. Deploying AI models in production environments often requires constant oversight to ensure optimal performance. Issues such as model drift or hardware failures can arise, necessitating immediate intervention. Businesses should invest in robust monitoring tools and skilled personnel to minimize disruptions and maintain system efficiency.

“Operational costs often escalate due to hidden factors like energy consumption and system downtime. Proactive management of these elements ensures long-term cost efficiency.” This insight highlights the importance of a comprehensive approach to operational cost analysis.

To optimize operational costs, businesses can adopt strategies such as automating routine tasks, leveraging predictive maintenance tools, and implementing hybrid infrastructure models. These measures reduce manual intervention, enhance system reliability, and balance cost efficiency with performance.

Comparing Deployment Models for AI Infrastructure

Selecting the right deployment model for AI infrastructure is a critical decision that impacts both cost and performance. Businesses must evaluate their unique requirements, including scalability, control, and budget, to determine the most suitable option. This section explores three primary deployment models: on-premises, cloud-based, and SaaS (Software as a Service), highlighting their distinct characteristics and implications for total cost of ownership (TCO).

On-Premises AI Infrastructure

On-premises AI infrastructure provides organizations with complete control over their hardware and software resources. This model involves deploying AI systems within a company’s physical data centers, utilizing specialized hardware such as GPUs, TPUs, or ASICs. While this approach offers enhanced security and compliance, it demands significant upfront investments in equipment and facilities.

The TCO for on-premises infrastructure includes not only the initial hardware costs but also ongoing expenses for maintenance, energy consumption, and system upgrades. For instance, high-performance computing resources require regular updates to remain efficient, which can increase operational costs over time. Additionally, businesses must allocate resources for IT personnel to manage and monitor these systems effectively.

Despite the higher initial costs, on-premises infrastructure can be advantageous for organizations with strict data sovereignty requirements or those handling sensitive information. By maintaining full control over their systems, businesses can ensure compliance with industry regulations while optimizing performance for specific AI workloads.

Cloud-Based AI Infrastructure

Cloud-based AI infrastructure has gained popularity due to its flexibility and scalability. This model allows businesses to access computing resources through cloud service providers, eliminating the need for substantial upfront investments. Companies can scale their resources up or down based on demand, making it an attractive option for projects with variable workloads.

The TCO for cloud-based infrastructure primarily consists of recurring operational expenses, such as subscription fees and data transfer costs. Cloud TCO models help organizations estimate these expenses by analyzing factors like usage patterns and resource allocation. For example, inference tasks often rely on expensive GPU-based compute resources, which can drive up costs if not managed efficiently.

Cloud-based solutions also simplify the AI deployment process by providing pre-configured environments and tools. However, businesses must consider potential challenges, such as data security and vendor lock-in. A thorough ai cost analysis ensures that organizations can balance the benefits of scalability with the risks associated with cloud-based systems.

“Comprehensive financial planning is essential for cloud-based AI projects to prevent unexpected expenses and ensure accurate budgeting.” This insight underscores the importance of evaluating all cost components when adopting cloud-based infrastructure.

SaaS (Software as a Service) for AI

SaaS for AI offers a streamlined approach to deploying AI applications. This model enables businesses to access AI tools and services through subscription-based platforms, eliminating the need for extensive infrastructure investments. SaaS solutions are particularly beneficial for organizations seeking to implement AI quickly without the complexities of managing hardware and software.

The TCO for SaaS models includes subscription fees, which often cover software updates, maintenance, and support. While these costs are predictable, businesses must assess the scalability and customization options offered by SaaS providers. Limited flexibility may pose challenges for organizations with unique requirements or large-scale AI projects.

SaaS platforms excel in reducing the time and effort required for AI deployment. They provide ready-to-use solutions that cater to specific use cases, such as natural language processing or image recognition. However, businesses should evaluate the long-term costs and potential limitations of relying on third-party providers for critical AI functions.

“SaaS solutions simplify AI adoption but require careful consideration of scalability and customization needs.” This perspective highlights the importance of aligning SaaS offerings with organizational goals to maximize value.

Strategies for Cost Optimization in TCO Analysis

Optimizing Infrastructure Usage

Efficient use of infrastructure plays a pivotal role in reducing the total cost of ownership for AI systems. Organizations must evaluate their resource allocation to ensure optimal performance without unnecessary expenditure. For instance, businesses can adopt workload scheduling techniques to maximize the utilization of high-performance computing resources like GPUs and TPUs. By aligning computational tasks with periods of lower demand, companies can minimize idle time and reduce energy consumption.

Another effective approach involves leveraging automation tools to monitor and manage infrastructure usage. These tools provide real-time insights into resource consumption, enabling businesses to identify inefficiencies and implement corrective measures. For example, scaling down unused virtual machines or reallocating underutilized resources can significantly lower operational costs.

“Continuous monitoring of infrastructure usage ensures that organizations avoid overspending while maintaining system efficiency.” This proactive strategy helps businesses achieve sustainable cost management.

Additionally, organizations should consider adopting containerization and orchestration platforms, such as Kubernetes, to streamline resource management. These platforms enable efficient deployment and scaling of AI workloads, ensuring that infrastructure resources are used effectively. Through detailed TCO calculations, businesses can identify areas for improvement and implement strategies to optimize infrastructure usage.

Reducing Data Costs

Data-related expenses often constitute a significant portion of AI cost analysis. To reduce these costs, organizations must prioritize efficient data management practices. For instance, businesses can implement data deduplication techniques to eliminate redundant information, thereby reducing storage requirements. Compressing datasets before storage also minimizes costs associated with cloud-based solutions.

Another strategy involves selecting high-quality datasets that align with specific AI objectives. Instead of acquiring vast amounts of generic data, organizations should focus on targeted datasets that directly contribute to model accuracy and performance. This approach not only reduces acquisition costs but also minimizes preprocessing efforts.

“AI is not possible without massive amounts of data, leading to various data-related costs that should be factored into the potential cost of an AI system.” By addressing these costs strategically, businesses can enhance the financial viability of their AI initiatives.

Cloud TCO models offer valuable insights into data storage and transfer expenses. These models help organizations evaluate the cost implications of different storage options, such as on-premises systems versus cloud-based solutions. For example, businesses handling sensitive data may opt for hybrid storage models to balance cost efficiency with compliance requirements. Calculating TCO accurately ensures that all data-related expenses are accounted for, enabling informed decision-making.

Leveraging Hybrid Models

Hybrid models combine the benefits of on-premises and cloud-based AI infrastructure, offering a flexible and cost-effective solution. This approach allows organizations to allocate workloads based on specific requirements, optimizing both performance and expenses. For instance, businesses can use on-premises systems for sensitive data processing while leveraging cloud resources for scalable tasks like model training.

The hybrid model also provides a safeguard against vendor lock-in, enabling organizations to diversify their infrastructure investments. By distributing workloads across multiple platforms, businesses can mitigate risks associated with dependency on a single provider. This strategy enhances operational resilience while maintaining cost efficiency.

“To optimize AI costs, we recommend these strategies: It is essential to select AI projects that directly address specific, measurable business objectives and contribute to the overall strategic vision.” Hybrid models align with this recommendation by enabling tailored resource allocation for diverse AI projects.

Organizations adopting hybrid models should invest in robust integration tools to ensure seamless communication between on-premises and cloud systems. These tools facilitate efficient data transfer and workload distribution, minimizing operational disruptions. Through comprehensive TCO calculations, businesses can evaluate the financial impact of hybrid models and identify opportunities for further optimization.

Negotiating Vendor Contracts

Negotiating vendor contracts plays a crucial role in optimizing the total cost of ownership (TCO) for AI infrastructure. Businesses must approach this process strategically to secure favorable terms that align with their financial and operational goals. Effective negotiation not only reduces costs but also ensures long-term value from vendor partnerships.

Key Considerations for Vendor Negotiations

Organizations should evaluate several factors when negotiating contracts with vendors. These considerations help businesses identify opportunities for cost savings and mitigate potential risks:

Pricing Models: Vendors often offer various pricing structures, such as pay-as-you-go, subscription-based, or tiered pricing. Businesses must analyze these models to determine which aligns best with their usage patterns and budget constraints.

Service Level Agreements (SLAs): SLAs define the performance standards and support levels vendors must meet. Clear and enforceable SLAs ensure accountability and minimize disruptions caused by service failures.

Hidden Costs: Contracts may include hidden fees, such as data transfer charges, maintenance costs, or penalties for exceeding usage limits. Identifying and addressing these costs during negotiations prevents unexpected expenses.

Scalability Options: AI workloads often fluctuate, requiring flexible infrastructure solutions. Vendors offering scalable resources enable businesses to adapt to changing demands without incurring excessive costs.

Strategies for Successful Negotiations

To achieve favorable outcomes, businesses should adopt a structured approach to vendor negotiations. The following strategies can enhance the effectiveness of this process:

Conduct Thorough Research

Organizations must gather detailed information about vendors, including their pricing structures, service offerings, and market reputation. Comparing multiple vendors provides leverage during negotiations and helps identify the most competitive options.Define Clear Objectives

Businesses should establish specific goals for the negotiation process, such as reducing costs, securing flexible terms, or obtaining additional support services. Clear objectives guide discussions and ensure alignment with organizational priorities.Leverage Competitive Bidding

Inviting multiple vendors to submit proposals fosters competition and encourages them to offer better terms. This approach enables businesses to evaluate various options and select the most cost-effective solution.Negotiate Custom Terms

Standard contracts may not always meet the unique needs of an organization. Businesses should request customized terms that address their specific requirements, such as tailored pricing models or enhanced support services.Focus on Long-Term Value

While upfront cost reductions are important, businesses must also consider the long-term value of vendor partnerships. Factors such as reliability, scalability, and support quality significantly impact the overall TCO.

Common Pitfalls to Avoid

During vendor negotiations, businesses should remain vigilant to avoid common pitfalls that could undermine their efforts:

Overlooking Contract Details: Failing to review contract terms thoroughly may result in unfavorable conditions or hidden costs. Organizations must scrutinize all clauses to ensure transparency and fairness.

Relying on a Single Vendor: Dependence on one vendor increases the risk of vendor lock-in and limits bargaining power. Diversifying vendor relationships enhances flexibility and reduces risks.

Neglecting Exit Clauses: Contracts should include clear exit clauses that allow businesses to terminate agreements without excessive penalties. This ensures flexibility in case the vendor fails to meet expectations.

“Negotiating vendor contracts effectively requires a balance between cost reduction and value creation. Businesses must prioritize transparency, flexibility, and long-term benefits to achieve sustainable success.”

By adopting these strategies and avoiding common pitfalls, organizations can optimize their vendor contracts and reduce the TCO of their AI infrastructure. A well-negotiated contract not only minimizes expenses but also strengthens vendor relationships, ensuring reliable support for AI initiatives.

Limitations and Considerations in TCO Analysis

Challenges in Accurate Cost Estimation

Accurately estimating the total cost of ownership (TCO) for AI infrastructure presents significant challenges. AI systems involve numerous cost components, many of which are dynamic and difficult to predict. For instance, hardware expenses, such as GPUs or TPUs, can fluctuate based on market demand and technological advancements. These variations make it challenging for businesses to forecast long-term costs with precision.

Operational costs further complicate cost estimation. Energy consumption, system maintenance, and data storage requirements often increase as AI systems scale. Businesses may underestimate these expenses during initial planning, leading to budget overruns. Additionally, hidden costs, such as those associated with workforce training or unexpected system downtime, frequently go unnoticed. These overlooked factors can significantly impact the overall financial viability of AI projects.

Another layer of complexity arises from the integration of AI into existing systems. Customizing AI solutions to align with organizational workflows often incurs additional development and deployment costs. These expenses vary widely depending on the complexity of the AI model and the compatibility of existing infrastructure. For example, integrating a simple AI model may cost $5,000, while deploying a complex solution could exceed $500,000.

“Achieving a high return on investment (ROI) poses a considerable challenge for businesses that lack effective AI cost optimization strategies.” This highlights the importance of thorough cost estimation to ensure that AI investments deliver measurable value.

To address these challenges, businesses must adopt a structured approach to ai cost analysis. Leveraging tools like TCO calculators can help organizations identify potential cost drivers and estimate expenses more accurately. Regularly revisiting and updating cost projections ensures that businesses remain prepared for unforeseen financial demands.

Balancing Cost and Performance

Balancing cost and performance is a critical consideration in TCO analysis for AI infrastructure. Organizations often face trade-offs between minimizing expenses and achieving optimal system performance. For instance, investing in high-performance hardware may increase upfront costs but significantly enhance the speed and accuracy of AI models. Conversely, opting for lower-cost alternatives could result in slower processing times and reduced model efficiency.

The choice of deployment model also influences this balance. On-premises infrastructure offers greater control over performance but demands substantial capital investment. Cloud-based solutions provide scalability and flexibility, yet they introduce recurring operational expenses. Businesses must carefully evaluate these options to align their financial strategies with performance goals.

Data quality plays a pivotal role in this equation. High-quality datasets improve model accuracy but often come at a premium. Acquiring and preprocessing such data can drive up costs, particularly for projects requiring extensive data annotation or cleaning. However, poor-quality data can lead to inaccurate predictions, necessitating costly retraining efforts. Striking the right balance between data quality and cost is essential for maintaining both financial and operational efficiency.

“AI requires computational resources, and the overall costs for implementing and maintaining AI can vary widely depending on various factors.” This underscores the need for businesses to weigh cost considerations against performance requirements when planning AI deployments.

To achieve this balance, organizations should prioritize cost-effective strategies that do not compromise performance. For example, leveraging hybrid models allows businesses to allocate workloads based on specific needs, optimizing both cost and efficiency. Additionally, adopting energy-efficient hardware and automating routine tasks can reduce operational expenses while maintaining system reliability.

Ultimately, balancing cost and performance requires a holistic approach to TCO analysis. Businesses must consider all cost components, from hardware and labor to data and operations, while aligning these factors with their performance objectives. This ensures that AI investments deliver sustainable value without exceeding budgetary constraints.

TCO analysis remains a cornerstone for effective decision-making in AI infrastructure. By understanding the key cost components—such as infrastructure, labor, data, and operations—businesses can allocate resources wisely and avoid financial pitfalls. Comparing deployment models, including on-premises, cloud-based, and SaaS, further empowers organizations to align their strategies with operational goals. Cost optimization strategies, like leveraging cloud TCO models or refining ai cost analysis, enhance both financial and operational efficiency. Calculating TCO should not be a one-time task but a continuous process, ensuring sustainable AI deployment and long-term success.

FAQ

What is TCO in the context of AI infrastructure?

Total Cost of Ownership (TCO) in AI refers to the comprehensive evaluation of all expenses involved in AI projects. These include costs for model serving, training and tuning, cloud hosting, training data storage, application layers, and operational support. TCO provides a holistic view of financial commitments, ensuring businesses account for both visible and hidden costs.

Why is understanding TCO important for AI and cloud investments?

Understanding TCO enables businesses to anticipate all associated costs, preventing unexpected financial burdens. This insight ensures accurate budgeting, promotes sustainable growth, and enhances operational efficiency. For AI and cloud investments, TCO analysis serves as a critical tool for aligning financial strategies with long-term objectives.

What are the key cost elements to consider in building AI systems?

Building AI systems involves several cost elements, including expenses for data scientists, AI engineers, software developers, project managers, and business analysts. Hardware and specialized expertise also contribute significantly. Recognizing these components helps organizations allocate resources effectively and plan budgets with precision.

Why is measuring the costs and returns of AI implementation crucial for healthcare decision-makers?

Healthcare decision-makers must measure the costs and returns of AI implementation to ensure regulatory compliance and achieve a positive ROI. Comprehensive TCO analyses and healthcare-specific ROI models guide informed decisions, leading to improved patient outcomes and enhanced operational efficiency.

“In healthcare, understanding TCO ensures that AI investments align with both financial goals and patient care priorities.”

What are the main expenses included in the total AI TCO?

The total AI TCO primarily includes infrastructure costs, such as hardware and software setups, and labor costs for engineers and specialists. Tools like AI TCO calculators focus on deployment and operational expenses, excluding additional costs like hardware maintenance or workforce re-engagement.

What does the AI Cloud Total Cost of Ownership model analyze?

The AI Cloud TCO model evaluates various factors, including historical and future GPU rental prices, install base projections, inference and training costs, and GPU throughput optimizations. It provides a detailed analysis of financial implications, helping businesses optimize their cloud-based AI investments.

Why is calculating TCO important for organizations considering the adoption of language models?

Calculating TCO for language models offers organizations valuable insights into the financial impact of implementing these technologies. A reliable TCO calculator aids in budgeting, comparing solutions, and ensuring long-term sustainability. This analysis is essential for organizations aiming to adopt large-scale language models effectively.

What are the various costs associated with implementing AI features in a business?

The costs of implementing AI features vary based on specific use cases and requirements. Key factors driving these expenses include data acquisition, model development, infrastructure, and operational support. Understanding these variables ensures businesses can budget accurately and achieve successful AI integration.

What is the range of costs for AI applications?

AI application costs range widely, from $5,000 for basic models to over $500,000 for advanced, cutting-edge solutions. Effective budgeting requires a thorough understanding of cost components, from initial development to ongoing maintenance, ensuring informed decision-making and resource allocation.

“Investing in AI demands careful financial planning to balance innovation with affordability.”

AI workloads are transforming data centers, pushing their power density to unprecedented levels. Traditional cooling methods struggle to manage the heat generated by these high-performance systems. Cooling systems now account for nearly a third of energy consumption in data centers, highlighting their critical role. Liquid cooling has emerged as a game-changer, offering superior heat dissipation and energy efficiency. By adopting advanced cooling strategies, you can ensure optimal performance, reduce operational costs, and maintain system reliability in your data center. Effective cooling is no longer optional—it’s essential for meeting the demands of modern workloads.

Key Takeaways

Liquid cooling is essential for managing the high heat output of AI workloads, significantly outperforming traditional air cooling methods.

Implementing energy-efficient cooling solutions can reduce operational costs and environmental impact, making liquid cooling a preferred choice for modern data centers.

Scalability is crucial; choose cooling systems that can adapt to increasing heat loads as your data center grows to avoid costly retrofits.

Prioritize infrastructure compatibility to ensure smooth integration of new cooling systems, minimizing installation challenges and expenses.

Invest in preventive maintenance and specialized expertise to enhance the reliability and performance of advanced cooling systems.

Utilize real-time temperature monitoring to proactively manage heat levels, preventing overheating and extending equipment lifespan.

Consider the total cost of ownership when selecting cooling systems, focusing on long-term savings through energy efficiency and reduced downtime.

Challenges of Cooling High-Density AI Deployments

Heat Density in AI Workloads

AI workloads generate a significantly higher heat output compared to traditional data centers. This is due to the immense power density of AI servers, which often exceeds 20 kW to 80 kW per rack. Such high heat density demands advanced cooling systems to maintain optimal performance. Conventional air cooling methods struggle to dissipate this level of heat effectively. Liquid cooling, on the other hand, has become a preferred solution. It directly addresses the thermal challenges posed by AI deployments, ensuring efficient heat removal and preventing overheating. Without proper thermal management, your data center risks reduced system reliability and potential hardware failures.

Energy Efficiency and Cost Management

Cooling accounts for a substantial portion of a data center’s energy consumption, often nearing one-third of the total usage. For AI deployments, this figure can rise even higher due to the increased cooling demands. Implementing energy-efficient solutions is essential to control operational costs and reduce environmental impact. Liquid cooling systems, such as direct-to-chip cooling, offer superior energy efficiency by targeting heat sources directly. Additionally, hybrid cooling systems combine air and liquid technologies to optimize energy use. By adopting these advanced cooling methods, you can achieve significant cost savings while maintaining high-performance standards.

Environmental Impact of Cooling Systems

The environmental footprint of cooling systems is a growing concern for data centers. Traditional cooling methods rely heavily on energy-intensive processes, contributing to higher carbon emissions. AI deployments exacerbate this issue due to their elevated cooling requirements. Transitioning to energy-efficient solutions like liquid cooling can mitigate environmental impact. These systems not only reduce energy consumption but also support sustainability goals. Furthermore, innovations in green cooling technologies, such as AI-driven optimization and renewable energy integration, are paving the way for more eco-friendly data center operations. By prioritizing environmentally conscious cooling strategies, you can align your data center with global sustainability efforts.

Types of Cooling Systems for AI-Powered Data Centers

Choosing the right cooling systems for your AI-powered data center is essential to ensure efficiency and reliability. Advanced cooling technologies have been developed to address the unique challenges posed by high-density workloads. Below, you’ll find an overview of three key cooling solutions that can transform your data center operations.

Direct-to-Chip Liquid Cooling

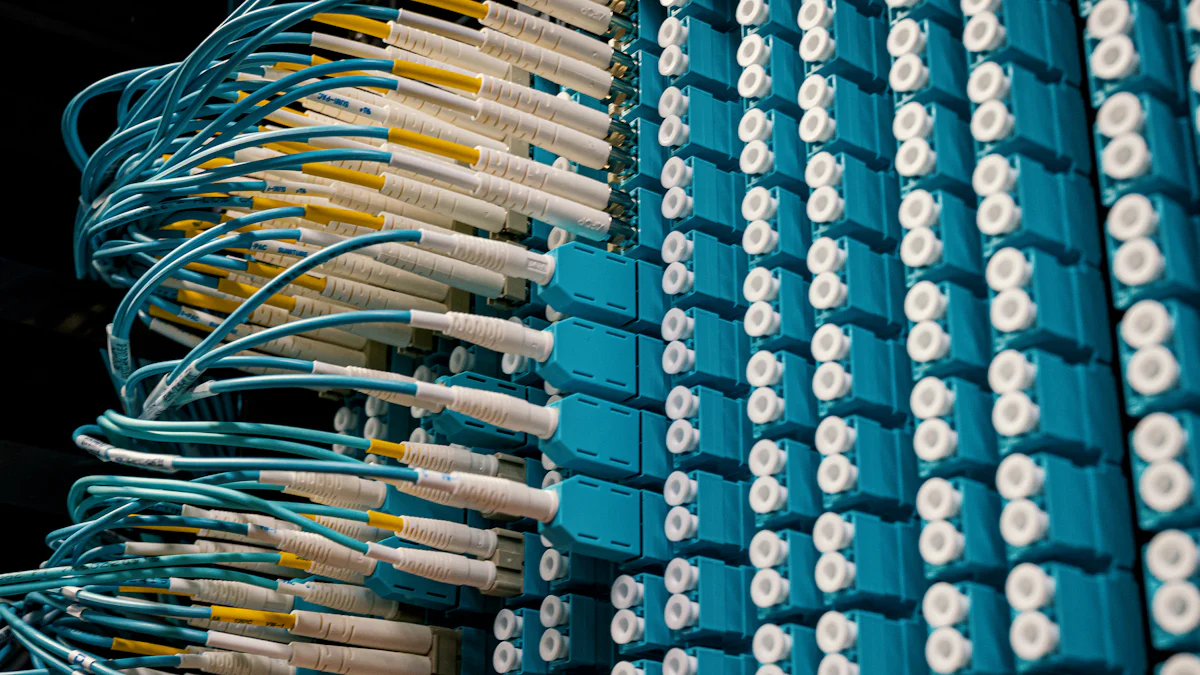

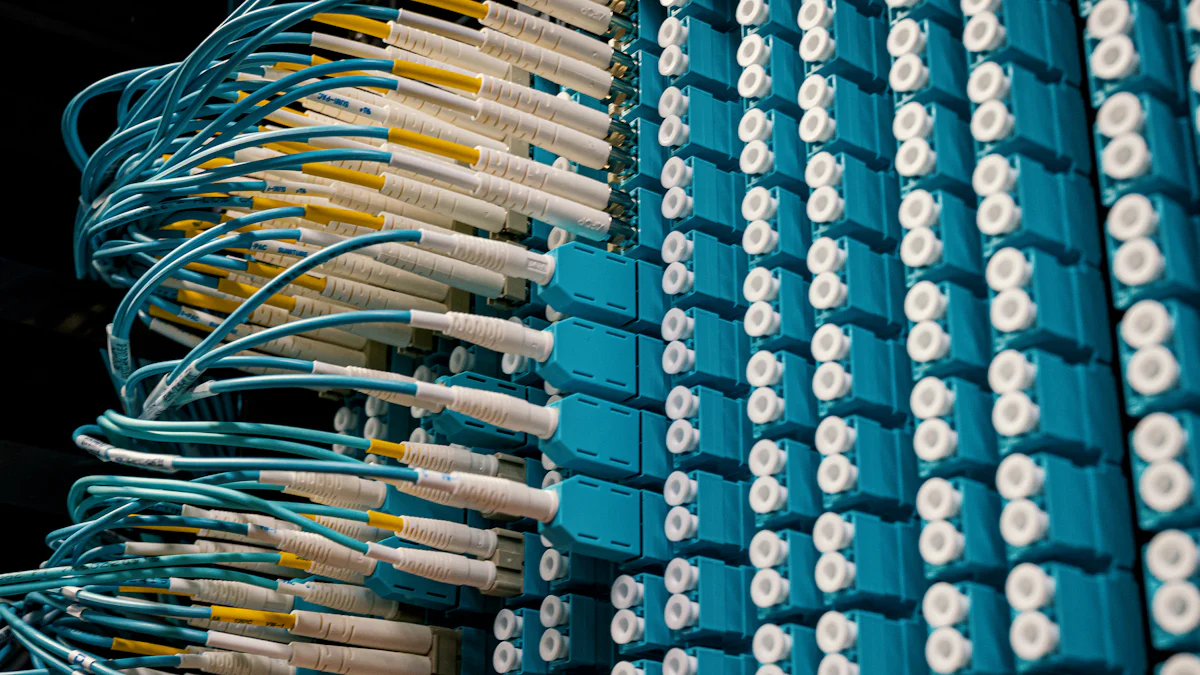

Direct-to-chip cooling is one of the most efficient methods for managing heat in AI workloads. This system uses liquid-cooled cold plates that come into direct contact with CPUs and GPUs. The liquid absorbs heat from these components and transfers it away through a coolant loop. By targeting the heat source directly, this method ensures precise thermal management and reduces the need for airflow within the server.

You’ll find this solution particularly effective for AI training clusters, where processors generate immense heat. Direct-to-chip cooling not only enhances performance but also minimizes energy consumption. It’s a scalable option that integrates seamlessly into modern data center liquid cooling setups, making it ideal for high-performance computing environments.

Immersion Cooling Systems

Immersion cooling takes a different approach by submerging entire servers in a dielectric liquid. This liquid is non-conductive, allowing it to safely absorb heat from all components. Immersion cooling systems can handle extremely high heat loads, often exceeding 100 kW per tank, making them perfect for AI and HPC workloads.

This method eliminates the need for fans and airflow, which reduces noise and energy usage. Immersion cooling also offers excellent thermal stability, ensuring consistent performance even under heavy workloads. If you’re looking for a cutting-edge solution to tackle the heat generated by advanced processors, immersion cooling provides unmatched efficiency and reliability.

Rear-Door Heat Exchangers

Rear-door heat exchangers (RDHx) integrate liquid cooling into the rear door of a server rack. These systems use chilled water to extract heat as it exits the rack. RDHx is a hybrid solution that combines the benefits of liquid cooling with the simplicity of air-based systems. It’s an excellent choice for retrofitting existing data centers without requiring significant infrastructure changes.

This cooling method is highly effective in managing moderate to high heat loads. By removing heat directly at the rack level, RDHx reduces the strain on traditional air conditioning systems. If your data center needs a flexible and cost-effective cooling upgrade, rear-door heat exchangers offer a practical solution.

Hybrid Cooling Systems (Air and Liquid)

Hybrid cooling systems combine the strengths of air and liquid cooling to create a versatile solution for AI-powered data centers. These systems adapt to varying cooling demands by seamlessly switching between air and liquid technologies. This flexibility makes hybrid cooling an excellent choice for environments with fluctuating workloads or evolving infrastructure needs.

The principle behind hybrid cooling involves a direct heat exchange process. Cool water absorbs heat from hot air, significantly enhancing the system’s cooling capacity. This approach ensures efficient thermal management, even in high-density setups. By integrating air and liquid cooling, hybrid systems provide a balanced solution that optimizes energy use while maintaining reliable performance.

Hybrid cooling systems are particularly advantageous for retrofitting existing data centers. They require minimal modifications to infrastructure, making them a cost-effective option for facilities transitioning to advanced cooling technologies. While moderately more expensive than traditional air-based systems, hybrid cooling offers long-term savings through improved efficiency and reduced energy consumption.

However, it’s essential to consider the water usage associated with hybrid cooling. These systems consume significant amounts of water during operation, which may impact sustainability goals. To address this, you can explore water-efficient designs or pair hybrid systems with renewable energy sources to minimize environmental impact.

For AI workloads, hybrid cooling systems strike a balance between performance, cost, and adaptability. They enhance the overall efficiency of data center liquid cooling setups, ensuring your facility remains future-ready without compromising on reliability.

Benefits of Liquid Cooling Technology

Enhanced Performance for AI Workloads

Liquid cooling significantly enhances the performance of AI workloads by efficiently managing the heat generated by high-density computing environments. Unlike traditional air-based systems, liquid cooling absorbs and dissipates heat much faster, ensuring that processors operate at optimal temperatures. This capability is especially critical for AI deployments, where chips often run hotter due to their intensive computational demands.

According to studies, liquid cooling is 3,000 times more effective than air cooling in dissipating heat from server components. This efficiency ensures that your AI systems maintain peak performance without throttling or overheating.

By adopting liquid cooling solutions, you can unlock the full potential of your high-performance computing infrastructure. The improved thermal management allows processors to handle more complex workloads, enabling faster training and inference for AI models. This makes liquid cooling indispensable for modern data centers aiming to support advanced AI applications.

Improved Energy Efficiency

Energy efficiency is a cornerstone of liquid cooling technology. Traditional cooling systems consume vast amounts of energy to maintain the required temperatures in data centers. Liquid cooling, however, directly targets heat sources, reducing the need for energy-intensive air circulation and cooling mechanisms.

Research highlights that liquid cooling technologies not only lower energy consumption but also align with sustainability goals by minimizing carbon emissions. This makes them a preferred choice for environmentally conscious data centers.

By integrating data center liquid cooling into your operations, you can achieve substantial energy savings. For instance, direct-to-chip cooling eliminates the inefficiencies of air-based systems by transferring heat directly from CPUs and GPUs to a coolant loop. This targeted approach reduces overall power usage, cutting operational costs while maintaining high cooling efficiency.

Increased Reliability in High-Density Environments

Reliability is a critical factor in high-density environments, where even minor temperature fluctuations can lead to hardware failures. Liquid cooling provides consistent and effective thermal management, ensuring that your systems remain stable under heavy workloads. This reliability is particularly vital for AI workloads, which demand uninterrupted performance.

Studies emphasize that liquid cooling is essential for AI servers, as it prevents overheating and extends the lifespan of critical components. By maintaining stable operating conditions, liquid cooling reduces the risk of downtime and costly repairs.

In addition to enhancing system reliability, liquid cooling supports scalability. As your data center grows to accommodate more powerful hardware, liquid cooling systems can adapt to meet increasing thermal demands. This scalability ensures that your infrastructure remains future-ready, capable of handling the evolving needs of AI and high-performance computing.

How to Evaluate and Choose the Right Cooling System

Selecting the right cooling system for your data center requires careful evaluation. Each decision impacts performance, energy consumption, and long-term operational costs. By focusing on key factors like scalability, infrastructure compatibility, and cost-effectiveness, you can ensure your cooling strategy aligns with your data center’s needs.

Assessing Scalability and Future Needs

Scalability is a critical factor when evaluating cooling systems. As AI workloads grow, your data center must handle increasing heat loads without compromising performance. A scalable cooling solution ensures your infrastructure adapts to future demands, saving you from costly retrofits or replacements.

For instance, liquid cooling systems excel in scalability. They can accommodate higher power densities as your compute requirements expand. This makes them ideal for businesses investing in AI or edge deployments. By planning for growth, you avoid disruptions and maintain operational efficiency.

Pro Tip: Choose a cooling system designed to scale with your data center. This approach minimizes downtime and ensures seamless integration of new technologies.

Integration with Existing Infrastructure

Your cooling system must integrate smoothly with your current setup. Compatibility reduces installation challenges and avoids unnecessary expenses. Evaluate whether the cooling solution aligns with your data center’s layout, power distribution, and existing equipment.

Hybrid cooling systems offer flexibility in this regard. They combine air and liquid cooling, making them suitable for retrofitting older facilities. Rear-door heat exchangers are another practical option, as they require minimal modifications while enhancing cooling efficiency.

Key Insight: Prioritize solutions that complement your infrastructure. This ensures a faster deployment process and reduces the risk of operational disruptions.

Cost Considerations and ROI

Cost plays a significant role in choosing a cooling system. However, focusing solely on upfront expenses can lead to higher operational costs over time. Instead, evaluate the total cost of ownership (TCO), which includes installation, maintenance, and energy consumption.

Liquid cooling systems, while initially more expensive, often deliver better ROI due to their energy efficiency. By targeting heat sources directly, they reduce energy usage and lower utility bills. Additionally, these systems extend hardware lifespan, minimizing repair and replacement costs.

Did You Know? Cooling accounts for nearly one-third of a data center’s energy consumption. Investing in energy-efficient solutions can significantly cut operational expenses.

When assessing ROI, consider long-term benefits like improved reliability and reduced downtime. A well-chosen cooling system not only saves money but also enhances overall performance.

Expertise and Maintenance Requirements

Maintaining advanced cooling systems for AI-powered data centers demands specialized expertise. These systems, particularly liquid cooling solutions like immersion cooling, require precise handling to ensure optimal performance and reliability. Without the right knowledge and skills, you risk operational inefficiencies and costly downtime.

Industry Expert Insight: “Liquid cooling systems often require specialized maintenance protocols. Relying on outdated maintenance strategies can compromise system performance.”

To manage these systems effectively, you need a team trained in modern cooling technologies. For example, immersion cooling systems involve regular checks of the coolant’s integrity to prevent contamination. You must also monitor fluid levels, especially when adding or removing servers. Neglecting these tasks can lead to overheating or reduced system efficiency.

Preventive maintenance plays a crucial role in avoiding unexpected failures. Studies reveal that reactive approaches to maintenance can cost three to four times more than planned servicing. A comprehensive preventive plan should include regular analysis of all fluids, not just oil, to detect mechanical or chemical issues early.

Key Fact: “75% of the total cost of ownership (TCO) is attributed to ongoing maintenance, while only 25% goes to system implementation.”