Data encryption is the process of converting plain text or data into a coded form that can only be accessed by authorized individuals or systems. It is a crucial aspect of cybersecurity and is used to protect sensitive information from unauthorized access, theft, or manipulation. Encryption ensures that even if data is intercepted or stolen, it remains unreadable and unusable to anyone without the decryption key.

The process of data encryption involves using an algorithm and a key to convert the original data into an encrypted form. The algorithm determines how the data is transformed, while the key is a unique piece of information that is required to decrypt the encrypted data back into its original form. Without the correct key, it is virtually impossible to decrypt the data.

There are two main types of data encryption: symmetric encryption and asymmetric encryption. Symmetric encryption uses a single key for both encryption and decryption, while asymmetric encryption uses a pair of keys – a public key for encryption and a private key for decryption. Each type has its own advantages and use cases, depending on the specific requirements of the data and the system.

Key Takeaways

- Data encryption is the process of converting plain text into a coded message to protect sensitive information.

- Data encryption is important for safeguarding data from unauthorized access, theft, and cyber attacks.

- Data encryption in transit secures data during transmission by using encryption protocols and algorithms.

- Choosing the right encryption protocol and algorithm is crucial for ensuring the security of data.

- Key management is essential for ensuring the security of encryption keys and preventing unauthorized access to data.

Why Data Encryption is Important for Safeguarding Data

Data breaches have become increasingly common in today’s digital landscape, with cybercriminals constantly seeking ways to gain unauthorized access to sensitive information. The risks associated with data breaches are significant and can have severe consequences for individuals and organizations alike.

Protecting sensitive data is of utmost importance as it can include personal information such as social security numbers, financial records, health records, or intellectual property. If this information falls into the wrong hands, it can lead to identity theft, financial fraud, reputational damage, or even legal liabilities.

The legal and financial consequences of data breaches can be substantial. Organizations that fail to adequately protect sensitive data may face regulatory fines, lawsuits from affected individuals, loss of customer trust, and damage to their brand reputation. Additionally, the costs associated with investigating and remediating a data breach can be significant, including hiring cybersecurity experts, notifying affected individuals, and implementing measures to prevent future breaches.

Data Encryption in Transit: Securing Data During Transmission

Data is constantly being transmitted between systems, networks, and devices. This data is vulnerable to interception by cybercriminals who can eavesdrop on communication channels and steal sensitive information. Data encryption in transit is essential to ensure that data remains secure during transmission.

When data is transmitted, it typically travels over networks or the internet. This data can be intercepted by attackers using various techniques such as packet sniffing or man-in-the-middle attacks. Without encryption, the intercepted data can be easily read and exploited.

To secure data during transmission, encryption protocols such as Secure Sockets Layer (SSL) or Transport Layer Security (TLS) are used. These protocols establish a secure connection between the sender and receiver by encrypting the data being transmitted. This ensures that even if the data is intercepted, it remains unreadable to unauthorized individuals.

Encryption Protocols and Algorithms: Choosing the Right One for Your Needs

There are various encryption protocols and algorithms available, each with its own strengths and weaknesses. When choosing encryption methods, several factors need to be considered, including the level of security required, compatibility with existing systems, performance impact, and ease of implementation.

Some popular encryption protocols include SSL/TLS, IPsec (Internet Protocol Security), and SSH (Secure Shell). SSL/TLS is commonly used for securing web traffic and is supported by most web browsers. IPsec is used for securing network communications and is often used in virtual private networks (VPNs). SSH is primarily used for secure remote access to systems.

Encryption algorithms determine how the data is transformed during encryption and decryption. Commonly used algorithms include Advanced Encryption Standard (AES), RSA (Rivest-Shamir-Adleman), and Triple DES (Data Encryption Standard). AES is widely regarded as one of the most secure encryption algorithms and is used by governments and organizations worldwide.

Data Encryption at Rest: Protecting Data on Storage Devices

Data encryption at rest refers to the process of encrypting data that is stored on storage devices such as hard drives, solid-state drives, or cloud storage. This ensures that even if the storage device is lost, stolen, or accessed by unauthorized individuals, the data remains protected.

Theft or unauthorized access to storage devices is a significant risk for organizations. If sensitive data is stored in plain text, it can be easily accessed and exploited. Encrypting data at rest adds an additional layer of security by rendering the data unreadable without the decryption key.

There are several methods of encrypting data at rest, including full disk encryption (FDE), file-level encryption, and database encryption. FDE encrypts the entire storage device, ensuring that all data stored on it is protected. File-level encryption allows for selective encryption of specific files or folders. Database encryption encrypts specific databases or tables within a database.

Best practices for securing data on storage devices include using strong encryption algorithms, implementing access controls to limit who can access the encrypted data, regularly updating encryption keys, and securely managing and disposing of storage devices when they are no longer needed.

Key Management: Ensuring the Security of Encryption Keys

Encryption keys are a critical component of data encryption. They are used to encrypt and decrypt data and play a crucial role in ensuring the security of encrypted information. Proper key management is essential to prevent unauthorized access to encrypted data.

Encryption keys need to be securely generated, stored, and managed to prevent theft or loss. If an encryption key falls into the wrong hands, it can be used to decrypt encrypted data and gain unauthorized access to sensitive information.

Best practices for key management include using strong cryptographic algorithms for key generation, storing keys in secure hardware or software-based key management systems, regularly rotating encryption keys, and implementing access controls to limit who can access the keys.

Best Practices for Implementing Data Encryption

Implementing data encryption requires a comprehensive strategy that covers all aspects of data protection. It is not enough to encrypt data in transit or at rest; organizations need to have a holistic approach to data encryption to ensure the security of sensitive information.

A comprehensive encryption strategy should include the following steps:

1. Identify sensitive data: Determine what data needs to be protected and classify it based on its sensitivity level.

2. Assess encryption requirements: Evaluate the specific encryption requirements for different types of data and systems.

3. Select appropriate encryption methods: Choose the encryption protocols, algorithms, and key management practices that best meet the organization’s needs.

4. Implement encryption controls: Deploy encryption solutions across all relevant systems and devices to ensure that data is protected at all times.

5. Train employees: Educate employees on the importance of data encryption and provide training on how to properly handle encrypted data.

6. Regularly update encryption practices: Stay up-to-date with the latest encryption technologies and best practices and regularly review and update encryption policies and procedures.

Common Challenges in Data Encryption and How to Overcome Them

While data encryption is essential for protecting sensitive information, there are several challenges that organizations may face when implementing encryption solutions. These challenges include key management issues, compatibility issues with legacy systems, and user adoption challenges.

Key management is a critical aspect of data encryption, and organizations need to ensure that encryption keys are securely generated, stored, and managed. However, managing a large number of keys can be complex and time-consuming. Implementing a centralized key management system can help streamline key management processes and ensure the security of encryption keys.

Compatibility issues with legacy systems can also pose challenges when implementing data encryption. Older systems may not support modern encryption protocols or algorithms, making it difficult to encrypt data without impacting system performance. In such cases, organizations may need to upgrade or replace legacy systems to ensure compatibility with encryption solutions.

User adoption is another common challenge in data encryption. Employees may resist using encryption tools or may not fully understand how to properly handle encrypted data. Providing comprehensive training and education on the importance of data encryption and how to use encryption tools effectively can help overcome user adoption challenges.

Compliance and Regulatory Requirements for Data Encryption

Data protection regulations have become increasingly stringent in recent years, with many countries implementing laws to protect the privacy and security of personal information. Organizations need to comply with these regulations and ensure that sensitive data is adequately protected through encryption.

Different industries have specific compliance requirements when it comes to data encryption. For example, the healthcare industry is subject to the Health Insurance Portability and Accountability Act (HIPAA), which requires the encryption of electronic protected health information (ePHI). The financial industry is subject to regulations such as the Payment Card Industry Data Security Standard (PCI DSS), which requires the encryption of credit card data.

To meet compliance requirements, organizations need to implement encryption solutions that align with the specific regulations applicable to their industry. They also need to regularly review and update their encryption practices to ensure ongoing compliance.

Future Trends in Data Encryption: What to Expect in the Coming Years

The field of data encryption is constantly evolving, driven by advancements in technology and emerging cybersecurity threats. Several trends are expected to shape the future of data encryption in the coming years.

Advancements in encryption technology are likely to lead to more secure and efficient encryption algorithms and protocols. Quantum computing, for example, has the potential to break current encryption algorithms, leading to the development of post-quantum cryptography that can withstand attacks from quantum computers.

Emerging trends such as homomorphic encryption, which allows for computations on encrypted data without decrypting it, are likely to gain traction. This can enable secure data processing in cloud environments, where data is encrypted both in transit and at rest.

The future of data encryption is also likely to be influenced by the increasing adoption of artificial intelligence (AI) and machine learning (ML) technologies. AI and ML can be used to enhance encryption algorithms, detect anomalies in encrypted data, and improve key management practices.

Data encryption is a critical aspect of cybersecurity and is essential for protecting sensitive information from unauthorized access, theft, or manipulation. The risks associated with data breaches are significant, and organizations need to implement robust encryption solutions to safeguard their data.

Implementing data encryption requires a comprehensive strategy that covers all aspects of data protection, including encryption in transit and at rest, key management, and compliance with regulatory requirements. Organizations also need to overcome common challenges such as key management issues, compatibility issues with legacy systems, and user adoption challenges.

The future of data encryption is likely to be shaped by advancements in technology, emerging trends such as homomorphic encryption and the increasing adoption of AI and ML. As the digital landscape continues to evolve, it is crucial for organizations to stay up-to-date with the latest encryption technologies and best practices to ensure the security of their sensitive information.

If you’re interested in learning more about data center security and how to protect against cyber attacks, check out this informative article: “The Importance of Data Center Security and How to Protect Against Cyber Attacks.” This article provides valuable insights into the best practices for physical and digital measures to ensure the security of your data center. With the increasing threat of cyber attacks, it’s crucial to stay informed and take proactive steps to safeguard your data. Read the full article here.

FAQs

What is data encryption?

Data encryption is the process of converting plain text or data into a coded language to prevent unauthorized access to the information.

What is data encryption in transit?

Data encryption in transit refers to the process of encrypting data while it is being transmitted over a network or the internet to prevent interception and unauthorized access.

What is data encryption at rest?

Data encryption at rest refers to the process of encrypting data that is stored on a device or server to prevent unauthorized access in case the device or server is lost, stolen, or hacked.

What are the benefits of data encryption?

Data encryption provides an additional layer of security to protect sensitive information from unauthorized access, theft, or interception. It also helps organizations comply with data protection regulations and avoid costly data breaches.

What are the common encryption algorithms used for data encryption?

Common encryption algorithms used for data encryption include Advanced Encryption Standard (AES), Data Encryption Standard (DES), Triple DES (3DES), and Rivest-Shamir-Adleman (RSA).

What are the best practices for data encryption?

Best practices for data encryption include using strong encryption algorithms, implementing secure key management, regularly updating encryption protocols, and limiting access to encrypted data to authorized personnel only.

What are the challenges of data encryption?

Challenges of data encryption include the potential impact on system performance, the complexity of managing encryption keys, and the risk of data loss if encryption keys are lost or compromised.

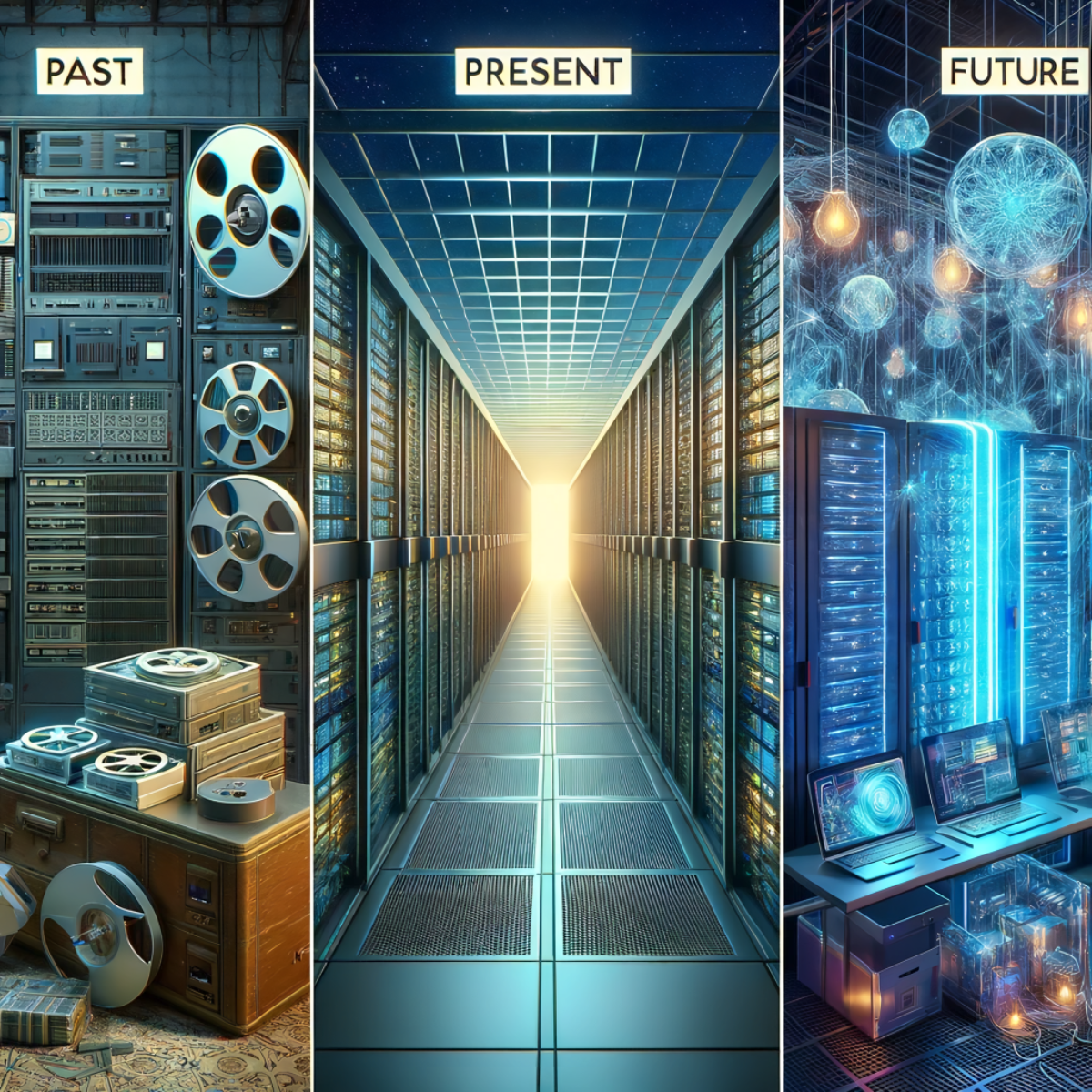

Data centers have come a long way since their inception, playing a crucial role in the ever-expanding digital age we find ourselves in today. From humble beginnings to paving the way for a futuristic future, these facilities have evolved and adapted to meet the growing demands of technology. This article aims to trace the journey of data centers from their early stages and highlight how they have revolutionized our world, while exploring the advances that will shape their promising future. So let’s delve into this fascinating evolution and discover how data centers have become an integral part of our modern society.

The Early Days: A Glimpse into the Origins of Data Centers

Data centers have come a long way since their humble beginnings. In the early days, data centers were not much more than small rooms filled with mainframe computers and stacks of punch cards. These rooms were often housed in large corporate buildings or universities, where they served as the central hub for storing and processing data.

As technology advanced, so too did data centers. In the 1970s and 1980s, companies began to realize the importance of centralized IT infrastructure and started building larger dedicated facilities to house their growing computer systems. These facilities featured raised floors for cable management, cooling systems to prevent overheating, and backup power supplies to ensure continuous operation.

Despite these advancements, data centers still faced numerous challenges in their early days. They had limited storage capacity compared to today’s standards and were prone to frequent outages due to hardware failures or power disruptions. However, these challenges would pave the way for future innovations in data center design and serve as the foundation for what we now know as modern-day data centers.

The Birth of Mainframes: The Foundation of Modern Data Centers

Mainframes, the early pioneers of data processing, marked a turning point in the evolution of data centers. Developed in the 1950s and 1960s, these colossal machines revolutionized computing capabilities by enabling large-scale data handling and storage. With their immense size and power, mainframes became the foundation upon which modern data centers were built.

Mainframe computers boasted impressive features that set them apart from previous generations. They had extensive memory capacity, reliable performance, and robust security measures—an essential requirement for businesses dealing with sensitive information. These powerful machines played a critical role in industries such as banking, insurance, and government agencies where vast amounts of disparate data needed to be processed rapidly.

The emergence of mainframe technology brought organizations closer to achieving centralized control over their growing volumes of critical information. By harnessing this newfound potential through mainframe deployments within controlled environments known as “data centers,” companies could streamline operations while ensuring maximum uptime reliability—a concept that has endured throughout the history of data center evolution.

From Punch Cards to Magnetic Tapes: Data Storage in the Early Years

Early Data Storage Methods

In the early years of data storage, punch cards were commonly used to store and process information. These cards had holes punched in specific positions to represent data, allowing for automated processing by computers. However, punch cards were limited in their storage capacity and required manual handling.

To address these limitations, magnetic tape technology was introduced. Magnetic tapes allowed for larger amounts of data to be stored and retrieved more efficiently. They consisted of a thin plastic strip coated with a magnetic recording material, enabling them to be read or written using tape drives. Magnetic tapes revolutionized data storage, as they offered greater capacity and faster access times compared to punch cards.

Challenges Faced

Though magnetic tape technology brought significant improvements over punch cards, it also presented challenges. The physical nature of tapes made them vulnerable to damage from environmental factors such as humidity or heat. Additionally, accessing specific parts of the data stored on a tape could be time-consuming due to the linear nature of reading the information sequentially.

Despite these challenges, magnetic tapes continued to dominate as an essential data storage medium throughout the early years’ development of data centers. Their significance would pave the way for further advancements in digital storage technologies that we rely on today.

The Rise of Mini Computers: Smaller, Faster, and More Efficient

Mini computers have revolutionized the way data centers operate. These compact devices are smaller in size yet pack a powerful punch. With their increased processing speed and efficiency, they have become an integral part of modern data centers.

- Compact Size: Unlike their larger counterparts, mini computers take up less physical space. This allows for more efficient use of rack space in data centers.

- Enhanced Processing Power: Despite their diminutive size, mini computers boast impressive processing capabilities. They can handle complex algorithms and perform multiple tasks simultaneously.

- Improved Energy Efficiency: Mini computers are designed to operate on lower power levels while still delivering exceptional performance. This translates into reduced energy consumption and subsequently lower operational costs for data centers.

In conclusion, the rise of mini computers signifies a significant advancement in the evolution of data centers. Their compact size, enhanced processing power, and improved energy efficiency make them indispensable components for achieving a futuristic future in the world of technology.

The Advent of Local Area Networks: Connecting Computers for Better Communication

Local Area Networks (LANs) revolutionized the way computers communicate with each other, enhancing collaboration and data sharing within organizations. LANs allow multiple computers in close proximity to connect and share resources, such as files, printers, and applications.

- LAN technology emerged in the 1970s, enabling organizations to establish a private network within their premises.

- The introduction of LANs eliminated the need for individual connections between every computer and peripheral device.

- Centralized servers acted as hubs for managing communication between computers on a LAN.

This marked a significant shift in data center design. Instead of relying solely on mainframes or stand-alone machines, organizations could now harness the power of interconnected systems to improve productivity and streamline operations.

The Emergence of Client-Server Architecture: Distributing Workload for Enhanced Performance

Client-server architecture emerged as a solution to the increasing demand for faster and more efficient data processing. With this model, tasks are distributed between clients (users) and servers (computers), allowing for improved performance.

- Enhanced Efficiency: In client-server architecture, the workload is divided between multiple computers, with each server handling specific tasks. This distribution allows for increased efficiency as servers can specialize in certain functions or processes.

- Improved Scalability: By using client-server architecture, organizations have the flexibility to add or remove servers based on their needs without disrupting operations. This scalability ensures that resources can be allocated efficiently, even during periods of high demand.

- Centralized Data Management: Servers store and manage data centrally in client-server architecture which provides easier access control and better security measures compared to decentralized systems where data might be scattered across various devices.

Overall, the emergence of client-server architecture played a significant role in enhancing performance within data centers by distributing workloads across different machines while providing centralized storage and management capabilities.

The Internet Revolution: Data Centers in the Age of Connectivity

With the advent of the internet, data centers have become an integral part of our daily lives. These large facilities house numerous servers and networking equipment that enable us to access information and services instantaneously.

- Efficiency and Scale:

- Data centers are designed for efficiency and scale, with advanced cooling systems and power management solutions.

- They can handle enormous volumes of data simultaneously, ensuring uninterrupted connectivity for users worldwide.

- Global Connectivity:

- Today’s data centers form a vast network that spans continents, connecting people across boarders in ways never before imagined.

- This global connectivity has paved the way for seamless communication, collaborative work environments, efficient cloud computing services, and much more.

- The Rise of Cloud Computing:

- Data centers play a crucial role in enabling cloud computing services by providing storage space and processing power required to host applications remotely.

- This shift towards cloud-based solutions has revolutionized how businesses operate by offering scalability, flexibility, reliability, and cost-effectiveness.

As we continue to push boundaries in technology advancements, data centers will remain at the forefront of innovation – propelling us into a futuristic future where our reliance on digital connectivity knows no bounds.

Virtualization: Maximizing Efficiency and Utilization of Resources

Virtualization has proven to be a game-changer in the world of data centers. By separating physical infrastructure from software applications, virtualization allows for better utilization of resources and increased efficiency.

- With virtualization, multiple operating systems can run on a single server, eliminating the need for individual machines for each application.

- This consolidation reduces power consumption, space requirements, and cooling needs.

- Through virtual machines (VMs), organizations experience improved agility as they are able to quickly deploy new software environments without the constraints of physical hardware.

Virtualization also offers benefits such as:

- Enhanced disaster recovery capabilities by enabling easy migration of VMs between different servers or even data centers.

- Increased security through isolation of sensitive applications within dedicated VMs.

- Simplified management with centralized control over multiple VMs.

Overall, virtualization plays a crucial role in maximizing the efficiency and utilization of resources within modern day data centers. Its flexibility facilitates seamless scalability while reducing costs associated with hardware procurement and maintenance. As technology continues to advance, it is safe to say that virtualization will continue evolving alongside it.

The Cloud Computing Era: Data Centers in the Digital Age

With the advent of cloud computing, data centers have taken on a crucial role in shaping our digital future. These centralized hubs now store vast amounts of data and provide access to it over the internet.

- Data centers have become essential for businesses and individuals alike, as they offer scalable storage solutions that can be accessed anytime, anywhere.

- As more applications and services move to the cloud, data centers have had to evolve to meet increasing demands. They now utilize advanced technologies like virtualization and containerization to maximize efficiency and optimize resource allocation.

- Furthermore, the rise of edge computing has led to smaller data centers being deployed closer to end-users. This reduces latency and improves performance for time-sensitive applications such as autonomous vehicles or real-time gaming.

In this digital age, data centers are truly the backbone of our connected world. Their ongoing evolution ensures that we can continue to harness technology’s potential far into the future.

Green Data Centers: Sustainability and Energy Efficiency in the Modern Era

Data centers have come a long way since their inception. In the modern era, the focus has shifted towards sustainability and energy efficiency, leading to the emergence of green data centers. These eco-friendly facilities are designed to minimize their environmental impact while maximizing efficiency.

- Energy-efficient infrastructure: Green data centers employ innovative technologies like virtualization and advanced cooling systems to reduce energy consumption. By optimizing server utilization and airflow management, these facilities can significantly lower their carbon footprint.

- Renewable energy sources: Another key feature of green data centers is their reliance on renewable energy sources such as solar or wind power. By harnessing clean energy, these facilities not only help combat climate change but also reduce operating costs.

- Waste reduction and recycling: Green data centers prioritize waste reduction through efficient equipment disposal practices and recycling programs for materials like e-waste. This commitment to responsible waste management contributes to a more sustainable future for the industry.

The rise of green data centers represents a significant shift in how we approach technology infrastructure. As society becomes increasingly aware of our environmental impact, these sustainable solutions pave the way for a brighter future where digital innovation coexists harmoniously with ecological responsibility.

The Rise of Hyperscale Data Centers: Meeting the Demands of Big Data

As data continues to grow exponentially, traditional data centers are struggling to keep up with the demand. Enter hyperscale data centers – massive facilities designed specifically for handling big data. By leveraging economies of scale and advanced technologies, these centers can store and process vast amounts of information efficiently.

Hyperscale data centers use innovative design techniques to maximize efficiency. They employ modular construction, allowing for easy scalability as demands increase. Additionally, these centers utilize virtualization and software-defined networking to optimize resource utilization and decrease energy consumption.

With their immense storage capabilities and processing power, hyperscale data centers have become a vital part of our digital infrastructure. They enable businesses to analyze large volumes of data in real-time, powering artificial intelligence applications and facilitating breakthroughs in various industries such as healthcare and finance.

Benefits of Hyperscale Data Centers:

- Scalability: These facilities can easily accommodate ever-growing storage needs without significant disruptions or delays.

- Cost-efficiency: With their efficient design and optimized use of resources, hyperscale data cen ters offer cost savings compared to traditional alternatives.

- Enhanced performance: The advanced infrastructure allows for faster processing speeds, reducing latency in accessing critical information.

- Reliability: Hyperscale data centers have redundant systems that ensure high availability and minimize the risk of downtime.

In conclusion, hyperscale data centers represent an evolution in the way we manage big data. With their ability to store, process, and analyze vast amounts of information at unparalleled speeds, they are shaping our present digital landscape while paving the way for a futuristic future where even greater possibilities await.

Edge Computing: Bringing Data Centers Closer to the User

Edge computing is a revolutionary concept in the world of data centers. It aims to bring data processing and storage closer to the source of its generation, reducing latency and enhancing user experience.

- The traditional model of centralized data centers located in remote areas has limitations when it comes to real-time applications.

- Edge computing proposes installing smaller, localized data centers at or near the point where data is being generated.

- This allows for faster processing and analysis, as well as improved response times for critical applications such as autonomous vehicles or industrial IoT devices.

Modular Data Centers: Scalability and Flexibility for Rapid Deployment

Modular data centers offer a scalable and flexible solution for companies in need of rapid deployment. These data centers are designed with interchangeable modules that can be easily added or removed, allowing businesses to quickly expand their capacity as needed. With the ability to scale up or down on demand, companies can avoid the unnecessary costs associated with traditional data center construction or leasing additional space.

One key benefit of modular data centers is their flexibility. Organizations have the freedom to customize their infrastructure based on specific needs by selecting modules that are tailored to fit their requirements. Additionally, these modules can be easily moved or relocated as business demands change, providing a level of agility that traditional data centers cannot match.

Benefits of Modular Data Centers:

- Scalability: The modular nature of these data centers allows for seamless scalability, meaning businesses can increase capacity without disrupting operations.

- Rapid Deployment: Compared to conventional data centers, modular ones minimize construction time and enable quick deployment, reducing downtime and saving valuable resources.

- Cost Efficiency: With the option to add only necessary components when required, organizations reduce upfront capital expenditures while maximizing energy efficiency in operational spend.

- Design Flexibility: Various configurations allow customization based on unique business requirements, enabling organizations to adapt swiftly according to evolving technology trends.

- Mobility: Unlike fixed brick-and-mortar facilities, modular centers provide portability options which are especially useful for industries with frequently changing project sites.

In summary, as technology continues its rapid advancement into the future requiring more efficient and agile systems in place for scaling computing power at accelerated speed — modular data centers prove themselves indispensable assets capable of meeting those demands effectively.

Data Center Infrastructure Management: Ensuring Optimal Performance and Reliability

Data center infrastructure management (DCIM) plays a vital role in ensuring the smooth functioning of modern data centers.

- DCIM offers an integrated view of all critical components, including servers, cooling systems, power distribution units, and network equipment.

- Real-time monitoring tools enable administrators to identify potential issues promptly and take proactive measures to avoid downtime or performance degradation.

- Using historical data analysis, DCIM helps optimize resource utilization by identifying inefficiencies and suggesting improvements.

As technology continues to advance at a rapid pace, data centers must evolve to meet increasing demands for computing power and storage. Implementing robust DCIM solutions is crucial for addressing these evolving needs while maintaining optimal performance and reliability.

Artificial Intelligence in Data Centers: Optimizing Operations and Security

Artificial intelligence (AI) has revolutionized data center operations by optimizing efficiency and enhancing security measures. With AI, data centers are able to analyze massive amounts of information in real-time, enabling proactive troubleshooting and preventive maintenance. This helps prevent costly downtime and ensures continuous availability of critical services.

- Real-time monitoring: AI-powered systems continuously monitor the health and performance of hardware, software, and network infrastructure. They can identify potential issues before they cause any disruption or damage.

- Predictive analytics: Advanced algorithms allow data centers to anticipate failures or capacity bottlenecks based on historical patterns, improving resource allocation and reducing operational costs.

- Enhanced cybersecurity: AI-driven security solutions detect anomalies in network traffic patterns quickly, identifying potential cyber threats before they can infiltrate the system.

By leveraging AI capabilities within data centers, businesses can achieve higher operational efficiency while safeguarding vital assets from cyber attacks.

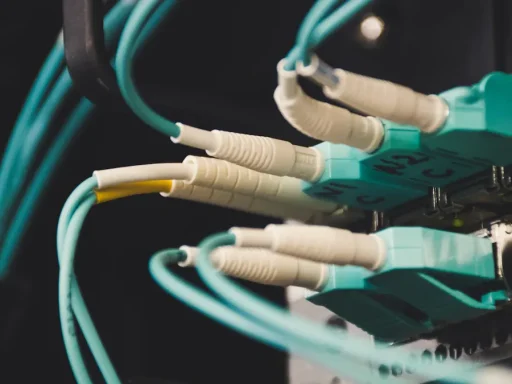

Data Center Interconnectivity: Enabling Seamless Data Transfer and Collaboration

Data center interconnectivity has emerged as a crucial factor in today’s digital landscape. It allows for seamless data transfer between different data centers, enabling organizations to collaborate effectively and exchange information effortlessly.

- Improved Efficiency: The interconnectedness of data centers ensures that data can be shared efficiently across multiple locations. This eliminates the need for transferring large amounts of data over long distances, reducing latency issues and enhancing overall operational efficiency.

- Enhanced Collaboration: With interconnected data centers, organizations can easily collaborate with their partners or branches located in different geographical locations. It enables real-time access to applications and resources hosted at various sites, fostering better teamwork, decision-making, and ultimately boosting productivity.

- Disaster Recovery: Interconnected data centers play a vital role in disaster recovery strategies by providing redundant backup systems to ensure business continuity during unexpected events like natural disasters or system failures. In case one site fails, another connected site takes over seamlessly without any interruption or loss of critical business operations.

- Cost Savings: Sharing resources among interconnected data centers helps optimize infrastructure costs by eliminating the need for duplicate services or equipment at every location independently.

Interconnecting these geographically dispersed hubs through high-speed networks creates a unified ecosystem where people can work collaboratively irrespective of their physical distance. Significant advancements in networking technologies have made it possible for businesses to reap the benefits of this seamless collaboration paradigm shift while avoiding cumbersome manual processes associated with traditional remote workplaces.

As more enterprises recognize the importance of uninterrupted connectivity throughout their distributed infrastructure landscape, bandwidth-hungry applications such as IoT devices are driving network operators toward implementing cutting-edge solutions like software-defined networking (SDN) and virtual private clouds (VPCs).

Such technological leaps exemplify how evolving practices ensure that tomorrow’s futuristic aspirations intertwine flawlessly with present-day possibilities—a testament to progress indeed!

Security Challenges: Safeguarding Data Centers in an Evolving Threat Landscape

As technology advances, data centers face increasingly sophisticated security challenges.

- Cyber attacks have become more frequent and devastating, targeting critical infrastructure with ransomware, malware, and DDoS attacks.

- In order to safeguard sensitive data, organizations must implement robust network firewalls and intrusion detection systems.

- Regular vulnerability scans and patch management are crucial for identifying and addressing potential weaknesses in the system.

Additionally,

- Physical security measures like biometric authentication, surveillance cameras, access controls should be implemented to protect against unauthorized entry.

- Training employees on cybersecurity best practices is essential as human error often becomes an exploitable vulnerability.

Furthermore,

- Encrypted connections using SSL/TLS protocols should be enforced for secure communication between servers.

- Implementing strong access controls by assigning roles and privileges to users prevents unauthorized access or modification of information within a data center.

The Role of Data Centers in Disaster Recovery and Business Continuity

Data centers play a crucial role in ensuring disaster recovery and business continuity for organizations.

1. Reliable data storage and backup capabilities

With increasing reliance on digital information, it is essential to have a secure place to store and protect critical data. Data centers provide the infrastructure necessary for companies to securely store their data off-site.

2. Redundancy and resilience

Data centers are designed with redundancy measures such as power backups, cooling systems, and multiple network connections to ensure continuous operations even during natural disasters or other disruptions.

3. Quick recovery time objective (RTO)

In the event of a disaster or system failure, data centers facilitate quick restoration of services through efficient backup processes, reducing downtime for businesses.

4. Geographic diversity

Having multiple geographically dispersed data center locations ensures that organizations can restore operations quickly even if one site is affected by a regional disaster.

Overall, data centers are an integral part of modern business strategies aimed at mitigating risks associated with potential disasters or disruptive events while maintaining seamless operation continuity.

Data Centers and the Internet of Things: Supporting a Connected World

As technology continues to advance, data centers have become essential for supporting the interconnected world of the Internet of Things (IoT).

- Data Processing Power: With billions of devices connected to the internet, data centers play a vital role in processing and analyzing vast amounts of information. They ensure that data from IoT devices is collected, stored, and processed efficiently.

- Reliability and Security: As more critical processes rely on IoT, it becomes crucial for data centers to provide reliable operations. These facilities employ robust security measures to protect sensitive data against cyber threats.

- Reduced Latency: By hosting edge computing capabilities closer to end-users or IoT devices, data centers reduce latency issues associated with sending large amounts of data back and forth. This enables faster response times for real-time applications such as smart homes or autonomous vehicles.

With the continuous growth of IoT devices globally, data center infrastructure must continue evolving in order to meet the increasing demands for connectivity and processing power.

Emerging Technologies: Shaping the Future of Data Centers

- Artificial Intelligence (AI) to Optimize Efficiency: AI has become increasingly integral in data center operations, helping organizations streamline processes and improve efficiency. By using machine learning algorithms, AI can analyze massive amounts of data to identify patterns and optimize server utilization, cooling efficiency, and energy consumption.

- Edge Computing for Real-Time Applications: Traditional centralized data centers have limitations when it comes to handling real-time applications such as IoT devices or autonomous vehicles. Edge computing brings processing power closer to the point of data generation, reducing latency and enhancing performance. With smaller edge nodes strategically deployed at various locations, organizations can provide faster response times while minimizing network congestion.

- Hardware Innovations Driving Performance: As demand for computational power increases alongside big data analytics and high-performance compute workloads like artificial intelligence and machine learning models, industry leaders are developing advanced hardware technologies like neuromorphic chips or quantum computers that promise unprecedented speed and processing capabi

In today’s rapidly evolving digital landscape, businesses around the globe are recognizing the critical importance of robust and reliable technology infrastructure. Enter Digital Realty – a pioneering leader in the field of data storage and tech infrastructure solutions. With an unmatched expertise in providing cutting-edge facilities, this article delves into exploring Digital Realty’s comprehensive range of services designed to meet the ever-expanding needs of businesses in their quest for efficient data management, seamless connectivity, and superior performance. From state-of-the-art data centers to transformative interconnection strategies, we will delve into how Digital Realty is revolutionizing the way we store and utilize our digital assets while forging ahead as an industry powerhouse. Join us on a journey of discovery as we uncover the depth and breadth of Digital Realty’s offerings that continue to shape modern technological landscapes worldwide.

The Evolution of Tech Infrastructure: A Look into Digital Realty’s Impact

As technology continues to advance at an exponential rate, the need for robust and scalable tech infrastructure has become paramount. Enter Digital Realty, a global leader in data center solutions.

A game-changer in data storage: Digital Realty has revolutionized the way companies store their valuable data. With its innovative approach to digital real estate, it offers secure and reliable storage solutions that can accommodate even the most demanding needs. By providing flexible options for businesses to scale up or down as necessary, they have reshaped how organizations manage their digital assets.

Building a resilient foundation: In today’s interconnected world, downtime is simply not an option. Thanks to Digital Realty’s state-of-the-art facilities and expert team of professionals, enterprises can enjoy uninterrupted access to their critical systems. By investing in redundant power supplies and advanced cooling technologies, this pioneering company ensures maximum uptime for its clients’ operations.

Digital Realty stands at the forefront of shaping our digital landscape by enabling businesses to thrive in an increasingly technology-driven era. Whether it’s through redefining data storage or building resilient foundations for uninterrupted operations, this industry leader continues to make a profound impact on tech infrastructure worldwide.

The Power of Data Storage: Exploring Digital Realty’s Solutions

The Power of Data Storage

Data storage is a critical aspect of any organization’s digital infrastructure. It enables businesses to securely store, manage, and access vast amounts of information with ease. Digital Realty offers innovative solutions that address the ever-growing need for efficient data storage.

- Scalable Infrastructure: Digital Realty provides scalable storage solutions that can adapt to an organization’s changing needs. Whether a business requires a small or large amount of storage space, their flexible infrastructure allows for seamless expansion without compromising performance.

- Reliability and Security: With the increasing prevalence of cyber threats, ensuring the security and reliability of data is paramount. Digital Realty employs robust security protocols and utilizes cutting-edge technology to safeguard valuable information from unauthorized access or potential breaches.

- Reduced Costs: Traditional on-site data storage can be expensive to maintain and upgrade. By leveraging Digital Realty’s state-of-the-art facilities, businesses can minimize costs associated with equipment maintenance, power consumption, cooling systems, and physical space requirements.

Digital Realty’s comprehensive range of data storage solutions empowers organizations to efficiently manage their ever-increasing amounts of information while providing peace of mind in terms of reliability and security.

Unleashing the Potential of Cloud Computing with Digital Realty

Cloud computing has transformed the way businesses operate, allowing them to tap into vast amounts of computing power and storage space without investing in expensive on-premises infrastructure. With companies increasingly relying on cloud services, having reliable data centers is crucial. This is where Digital Realty comes in.

Digital Realty is a leading provider of data center solutions that enable organizations to harness the full potential of cloud computing. Their state-of-the-art facilities offer scalable and secure environments for storing and managing vast amounts of digital information. By partnering with Digital Realty, businesses can leverage their expertise and robust infrastructure to ensure uninterrupted access to their critical applications and data.

The Advanced Capabilities of Digital Realty’s Data Centers

Digital Realty’s data centers are designed with cutting-edge technologies to meet the complex demands of modern businesses. These facilities feature high-density power configurations, redundant cooling systems, and advanced security measures – all aimed at ensuring 24/7 availability, optimal performance, and protection against cyber threats.

Additionally, the strategic locations of these data centers allow for low-latency connections across major global markets. This facilitates faster delivery speeds for cloud-based applications while optimizing network performance for end-users worldwide.

By choosing Digital Realty as a trusted partner for their tech infrastructure needs, companies can unlock the true potential of cloud computing while enjoying enhanced reliability, scalability, security features – ultimately giving them an edge over competitors in today’s rapidly evolving digital landscape.

Redefining Connectivity: Digital Realty’s Network Services

Digital Realty is revolutionizing connectivity with its cutting-edge network services.

- Seamless global reach: With data centers strategically located across the world, Digital Realty offers unparalleled global connectivity. Its extensive network enables businesses to efficiently connect and exchange data with partners, customers, and employees worldwide.

- Diverse interconnection options: Digital Realty provides a diverse range of interconnection options to meet the unique needs of each business. Whether it’s direct connections with leading cloud providers or access to an ecosystem of network service providers, companies can choose the connectivity solution that suits their requirements best.

- Robust security measures: Recognizing the importance of secure connections, Digital Realty ensures that its network services are safeguarded against cyber threats. Employing state-of-the-art security protocols and encryption techniques, businesses can trust in the reliability and confidentiality of their data transmissions.

In today’s globally connected marketplace, having reliable and flexible network services is crucial for organizations seeking success in the digital age. And with its innovative approach to redefining connectivity through seamless global reach, diverse interconnection options, and robust security measures.

The Role of Colocation in Modern Tech Infrastructure: Digital Realty’s Approach

Colocation plays a crucial role in modern tech infrastructure by providing businesses with the physical space and power they need to house their servers and networking equipment. Digital Realty understands this importance and offers colocation services that cater to the diverse needs of its clients.

Benefits of Colocation

- Cost savings: By opting for colocation, businesses can eliminate the significant costs associated with building and maintaining their own data centers.

- Reliability: Digital Realty’s colocation facilities are built to withstand extreme weather conditions, ensuring uptime even during unforeseen events.

- Scalability: With Digital Realty’s colocation services, businesses have the flexibility to scale their server infrastructure as needed without investing in additional physical space or hardware upgrades.

- Enhanced security: Physical security measures like 24/7 monitoring, biometric access controls, and video surveillance ensure that clients’ critical data is protected from unauthorized access.

Why Choose Digital Realty?

- Global presence: With over 280 locations worldwide, including major metropolitan areas such as London, Singapore, and Sydney, Digital Realty provides businesses with a vast network of interconnected data centers.

- Industry expertise: As a trusted leader in data center solutions for over two decades, Digital Realty has unparalleled experience in managing complex IT infrastructures across various industries.

- Robust connectivity options: Digital Realty offers direct access to leading cloud providers such as AWS and Microsoft Azure through its ecosystem of carrier-neutral interconnectivity options.

Digital Realty’s approach to colocation goes beyond merely providing physical space; it encompasses reliable infrastructure support, scalability opportunities, enhanced security measures while keeping costs in check—all contributing factors making them an optimal choice for businesses seeking state-of-the-art tech infrastructure solutions.

Ensuring Data Security: Digital Realty’s Robust Solutions

Robust Data Security Measures

Digital Realty takes data security seriously and has implemented robust solutions to protect their clients’ valuable information.

- They employ state-of-the-art encryption techniques to safeguard data both at rest and in transit.

- Physical security is also a top priority, with strict access controls in place to prevent unauthorized entry to their facilities.

Industry-best Practices

Digital Realty follows industry best practices when it comes to data storage and protection.

- They operate under a multi-layered approach, ensuring that even if one layer fails, others remain intact for added security.

- Regular audits and assessments are conducted by third-party experts to ensure compliance with industry standards such as ISO 27001.

Disaster Recovery Solutions

In the event of a disaster or system failure, Digital Realty has comprehensive disaster recovery solutions in place.

- Their data centers are strategically located across different regions for redundancy purposes.

- Automated backup processes guarantee minimal downtime and quick recovery times, allowing businesses to resume operations seamlessly.

Green Initiatives: Digital Realty’s Commitment to Sustainable Tech Infrastructure

Digital Realty has taken significant steps towards creating a sustainable future with its commitment to green initiatives. By implementing innovative strategies, the company is reducing energy consumption and minimizing its carbon footprint.

Energy Efficiency Measures

To ensure efficient use of resources, Digital Realty utilizes advanced cooling technologies in their data centers. These systems optimize airflow and temperature control, reducing energy usage while maintaining high performance levels. Additionally, they have implemented virtualization techniques that consolidate servers and minimize power requirements.

Renewable Energy Sources

Digital Realty actively supports the adoption of renewable energy sources for powering their facilities. They have formed partnerships with utility companies to procure wind and solar power for their data centers. By transitioning towards clean energy alternatives, the company aims to reduce reliance on fossil fuels and contribute to a greener environment.

In conclusion, Digital Realty’s dedication to sustainability sets them apart in the tech infrastructure industry. Through measures such as improved energy efficiency and utilization of renewable resources, they are making substantial progress in creating environmentally-friendly data storage solutions.

Exploring Digital Realty’s Data Center Design and Construction Methods

Building an efficient and reliable data center requires careful planning and meticulous attention to detail. Digital Realty understands this mandate, which is why they employ cutting-edge design and construction methods to meet the demands of their clients. From innovation in cooling systems to maximizing energy efficiency, here are some key aspects of their approach:

- Environmentally-friendly infrastructure: Digital Realty prioritizes sustainability by utilizing green building techniques and materials that minimize environmental impact. The company designs their data centers with features such as advanced cooling systems that significantly reduce energy consumption while maintaining optimal server performance.

- Modular architecture for scalability: One of the distinguishing factors of Digital Realty’s construction methods is using modular architectural designs. This allows for easy expansion and modification as technology demands grow or change over time. By employing prefabricated components, the construction process becomes streamlined, reducing both downtime during expansion projects and overall costs.

- Rigorous testing protocols: To ensure reliability, every aspect of a data center’s design goes through rigorous testing before deployment. This includes simulating various environmental conditions like extreme temperatures or sudden power outages to identify vulnerabilities early on and implement necessary measures accordingly.

By investing in modern infrastructure advancements like these, Digital Realty stays at the forefront of data center design and continues to deliver high-quality services tailored to meet evolving industry needs while ensuring minimal environmental impact along the way.

The Future of Edge Computing: Digital Realty’s Edge Data Centers

Edge computing is a rapidly advancing technology that is poised to revolutionize the way data is processed and stored. With traditional cloud-based architectures facing challenges in latency, security, and bandwidth consumption, edge computing offers a decentralized solution that brings processing closer to the source of data generation.

Digital Realty’s edge data centers play a crucial role in enabling this transformation. By placing these facilities close to densely populated areas or major network nodes, Digital Realty ensures low-latency connections for end-users while reducing reliance on centralized cloud infrastructure.

These edge data centers provide enhanced reliability and performance for critical applications such as Internet of Things (IoT), artificial intelligence (AI), and autonomous driving. By bringing computational power closer to where it’s needed most – at the “edge” – organizations can unlock new possibilities for faster decision-making, improved responsiveness, and reduced dependencies on centralized resources. As demand for real-time processing increases across industries from healthcare to smart cities, Digital Realty continues to lead in shaping the future of edge computing with its cutting-edge facilities and expertise.

Unlocking the Potential of Hybrid IT with Digital Realty

Hybrid IT: Maximizing Potential

Hybrid IT is revolutionizing the tech industry by harnessing the power of both on-premises and cloud-based solutions. Digital Realty, a renowned leader in data center infrastructure, understands the immense potential that lies within this hybrid approach.

Data Storage Solutions for the Modern World

As businesses navigate their digital transformation journeys, they require reliable and secure data storage solutions that can keep up with their evolving needs. Digital Realty’s state-of-the-art facilities offer scalable storage options to meet any organization’s demands.

Their innovative connectivity solutions ensure low latency and high-speed access to stored data, enhancing productivity across industries such as finance, healthcare, and e-commerce.

Digital Realty also prioritizes security through strategic partnerships with leading cybersecurity providers. As a result, businesses gain peace of mind knowing that their critical information is safeguarded against cyber threats.

Digital Realty’s Interconnection Solutions: Enabling Seamless Communication

Digital Realty offers a range of interconnection solutions that facilitate smooth and efficient communication between businesses.

- Direct Connect: Through their Direct Connect service, Digital Realty enables direct and private connectivity between customers and major cloud providers like AWS, Microsoft Azure, and Google Cloud. This eliminates the need for data to travel through public networks, resulting in lower latency and improved security.

- Internet Exchange: With Digital Realty’s Internet Exchange platform, customers can connect to multiple internet service providers (ISPs) via a single physical connection. This allows for faster data exchange and redundancy, ensuring reliable network performance.

- Service Exchange: The Service Exchange feature enables enterprises to establish private connections with various service providers such as managed hosting services or content delivery networks (CDNs). By bypassing the public internet, organizations benefit from better bandwidth availability and reduced latency.

Digital Realty recognizes the importance of seamless communication in today’s interconnected world. Their interconnection solutions provide businesses with the infrastructure needed to enhance performance, capitalize on cloud services efficiently, and improve overall productivity.

The Importance of Disaster Recovery: Digital Realty’s Strategies

The Importance of Disaster Recovery

In today’s technology-driven world, disaster recovery strategies are essential for businesses to protect their data and ensure uninterrupted operations. Digital Realty understands the significance of disaster recovery and has implemented robust measures to address potential risks.

- Data Loss Prevention: Digital Realty employs advanced technologies that continuously monitor, backup, and replicate critical data across multiple locations. This redundancy minimizes the risk of data loss in case of system failures or disasters.

- Business Continuity Planning: To mitigate disruptions caused by unforeseen events such as power outages or natural disasters, Digital Realty maintains redundant power feeds, backup generators, and alternative connectivity options. These measures ensure seamless operations even during emergencies.

- Testing and Validation: Regular testing is crucial to validate the effectiveness of disaster recovery plans. Digital Realty conducts periodic system-level tests to identify vulnerabilities and refine its strategies continually.

By prioritizing disaster recovery efforts through comprehensive planning, continuous monitoring, and rigorous testing procedures, Digital Realty provides customers with a robust tech infrastructure that ensures secure storage solutions while minimizing downtime risks.

Exploring Digital Realty’s Global Footprint: A World-Class Network

Digital Realty’s Global Footprint

- With data centers located across the globe, Digital Realty has established a truly world-class network.

- Their global footprint includes over 275 facilities in various strategic markets worldwide.

- These locations are chosen for their proximity to key business hubs and major metropolitan areas, ensuring optimal connectivity and accessibility.

Unmatched Connectivity

- One of Digital Realty’s main advantages is its extensive interconnection capabilities.

- Through their Data Hub ConnectTM platform, customers can have direct access to a wide range of networks and cloud service providers.

- This ensures seamless connectivity and enhances performance for businesses relying on digital infrastructure.

Commitment to Sustainability

- In addition to their vast network, Digital Realty demonstrates a strong commitment to sustainability practices.

- They prioritize energy efficiency in their data center operations, utilizing innovative technologies like airside economization and low-power servers.

- This not only reduces environmental impact but also helps businesses improve cost savings by minimizing energy consumption.

Cloud Connectivity Solutions: Digital Realty’s Direct Access Offerings

Digital Realty offers a range of cloud connectivity solutions that provide direct access to leading cloud service providers. This means that businesses can bypass the public internet and establish faster, more reliable connections to their preferred cloud platforms. With direct access, companies can enjoy improved performance, lower latency, and increased security for their data transfers.

By leveraging Digital Realty’s interconnection services, organizations can easily connect to top-tier cloud providers such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). These robust network solutions enable seamless integration between on-premises infrastructure and the chosen cloudscape. Whether it is private or hybrid cloud deployments, Digital Realty ensures efficient communication channels with minimal impact on bandwidth capacity.

With these state-of-the-art direct access offerings from Digital Realty, businesses gain a competitive advantage in today’s rapidly evolving digital landscape. By eliminating bottlenecks associated with traditional internet connections and leveraging high-speed networking capabilities within secure data center environments, companies can achieve optimal operational efficiency while enhancing overall user experience.

Scaling for Success: Digital Realty’s Scalable Infrastructure Solutions

Digital Realty understands the importance of scalability in today’s fast-paced digital landscape. With their scalable infrastructure solutions, businesses can easily adapt and grow to meet increasing demands.

Their data center facilities are designed to accommodate changing needs, allowing businesses to scale up or down as required. This flexibility ensures that companies have the necessary resources to support their operations without unnecessary costs or inefficiencies.

Digital Realty also offers a range of connectivity options, including access to major network providers and internet exchanges. This enables businesses to seamlessly connect with partners, customers, and suppliers around the world, ensuring reliable and high-performance connectivity at all times.

With Digital Realty’s scalable infrastructure solutions, businesses can focus on what matters most – their core operations – while leaving the complexities of IT infrastructure management in capable hands. Whether a company is starting small or aiming for global reach, Digital Realty has the expertise and technology needed for success in the digital era.

The Role of Artificial Intelligence in Digital Realty’s Tech Infrastructure

Artificial Intelligence (AI) plays a crucial role in Digital Realty’s tech infrastructure, enhancing efficiency and enabling advanced data management.

- AI algorithms analyze vast amounts of data to identify patterns and make predictions, allowing for more informed decision-making.

- Machine learning techniques enable the development of self-learning systems that continuously improve their performance over time without the need for explicit programming.

- AI-powered automation streamlines processes and optimizes resource allocation, resulting in cost savings and improved operational efficiencies.

With AI at its core, Digital Realty is able to provide cutting-edge solutions that meet the evolving demands of a rapidly changing digital landscape. By harnessing the power of AI, they empower businesses with greater agility and scalability while ensuring optimal use of resources.

Harnessing the Power of Big Data: Digital Realty’s Analytics Solutions

Digital Realty leverages cutting-edge analytics solutions to harness the power of big data. With their advanced data analytics platforms, they are able to analyze vast amounts of structured and unstructured data in real-time, providing valuable insights for their clients.

Using state-of-the-art machine learning algorithms and deep learning techniques, Digital Realty is able to uncover patterns, trends, and correlations within massive datasets. This helps businesses make informed decisions and predict future outcomes with greater accuracy.

By harnessing big data through their analytics solutions, Digital Realty offers a competitive advantage to businesses across various industries. Whether it’s optimizing operations, improving customer experiences, or enhancing cybersecurity measures, their powerful analytics tools provide actionable insights that drive business growth.

The Impact of Digital Realty’s Technology on Industry Verticals

Digital Realty’s cutting-edge technology has revolutionized various industry verticals. Here are a few key ways their solutions have made an impact:

- Healthcare: Digital Realty’s technology has transformed the healthcare industry by enabling secure and efficient storage of patient data. With advanced data centers and robust security measures, hospitals and medical facilities can store, analyze, and share sensitive information in real time. This has significantly improved patient care, diagnosis accuracy, and overall operational efficiency.

- Finance: In the financial sector, Digital Realty’s technology has paved the way for enhanced security and faster transactions. By leveraging advanced networking infrastructure, financial institutions can handle massive amounts of data securely while maintaining high-speed connectivity for trading platforms. This enables seamless global transactions, reducing latency and minimizing risks associated with cyber threats.

- E-commerce: Digital Realty’s tech infrastructure plays a critical role in supporting the booming e-commerce industry. Their highly scalable data centers ensure uninterrupted website performance even during peak times of online shopping events like Black Friday or Cyber Monday. Furthermore, seamless integration with cloud providers allows e-commerce businesses to effectively manage inventory levels, process orders efficiently, and provide personalized shopping experiences to customers.

Digital Realty continues to shape various industries through its innovative technological solutions that enable reliable digital operations across diverse sectors such as healthcare, finance, e-commerce, and beyond.

Digital Realty’s Commitment to Customer Support and Service Excellence

Commitment to Customer Support and Service Excellence

Digital Realty understands the importance of providing exceptional customer support and service excellence.

- Dedicated Support Team:

Digital Realty has a dedicated support team that is available 24/7 to assist customers with any technical issues or concerns they may have. Our knowledgeable and experienced team members are trained to provide quick and effective solutions, ensuring minimal disruptions in our clients’ operations.

- Proactive Monitoring:

We employ advanced monitoring tools that enable us to identify potential problems before they escalate into major issues. Through constant vigilance, we strive to maintain optimal performance levels for our clients’ digital infrastructure.

- Regular Performance Reviews:

We believe in constantly improving our services, which is why we conduct regular performance reviews with each of our customers. This allows us to address any areas of concern and tailor our solutions to meet their specific needs.

- Continuous Learning:

To keep up with the rapidly evolving technology landscape, Digital Realty invests in continuous learning for its customer support team. By staying up-to-date on the latest industry trends, we can better serve our customers and provide them with cutting-edge solutions.

Digital Realty’s commitment to exceptional customer support and service excellence sets us apart from other providers in the industry. We understand that reliable infrastructure is crucial for businesses today, and we go above and beyond to ensure that our clients receive unparalleled assistance whenever they need it.

The Future of Tech Infrastructure: A Look Ahead with Digital Realty

In the ever-evolving digital landscape, tech infrastructure plays a pivotal role in shaping the future. With advances in artificial intelligence, cloud computing, and big data analytics, the demand for robust and scalable infrastructure is only expected to grow. This is where Digital Realty comes into play.

Digital Realty is at the forefront of providing cutting-edge technology solutions that empower businesses to thrive in this fast-paced digital era. Their state-of-the-art data centers are equipped with advanced security measures, ensuring maximum protection for sensitive information. These facilities are designed to handle vast amounts of data and deliver lightning-fast connectivity, enabling companies to process and analyze complex datasets more efficiently.

The future holds immense opportunities for tech infrastructure advancements, and Digital Realty is poised to lead the way. As more industries rely on data-driven decision making, their innovative solutions will continue to revolutionize how organizations operate. By investing in advanced technologies like edge computing and Internet of Things (IoT), Digital Realty aims to create a seamless ecosystem that allows for real-time connectivity and intelligent processing capabilities. In the coming years, we can expect even greater reliability, speed, and efficiency from our tech infrastructure as Digital Realty continues pushing boundaries with their forward-thinking approach.

Welcome to our comprehensive guide on unlocking the power of Jira Software Data Center! In this highly informative article, we will delve into the world of Jira Software Data Center and explore its capabilities as a robust project management tool. Whether you are new to Jira or looking to enhance your team’s productivity, this introductory guide is designed to provide you with a solid foundation and unlock the true potential of Jira Software Data Center. So, let’s embark on this journey together and discover how this powerful platform can revolutionize your organization’s project management processes.

Understanding Jira Software Data Center: An Overview

Jira Software Data Center is a powerful tool that allows teams to collaborate and track their projects efficiently.

- Improved Performance: With Jira Software Data Center, users can expect faster response times even as the number of users or issues increases.

- High Availability: The data center ensures uninterrupted access to your projects by providing automatic failover capabilities.

- Scalability: As your team grows, the data center can scale horizontally by adding more nodes, ensuring seamless collaboration for large teams.

Using Jira Software Data Center enables organizations to optimize their project management processes and improve productivity.

Key Features and Benefits of Jira Software Data Center

Increased scalability: Jira Software Data Center offers increased scalability, allowing organizations to accommodate growing teams and projects. With the ability to distribute load across multiple nodes, it can handle large volumes of data and users without compromising performance or productivity.

Improved reliability: By providing high availability with active-active clustering, Jira Software Data Center ensures that your team will experience minimal downtime. In case one node fails, another takes over seamlessly, reducing disruptions to your workflow.

Enhanced performance: With its advanced caching capabilities and distributed architecture, Jira Software Data Center optimizes performance even during peak usage times. This means faster response times for your teams and improved overall efficiency.

Unlimited customization options: Jira Software Data Center offers extensive customization options through add-ons and APIs. Customize dashboards, workflows, reports, and more according to your organization’s unique requirements.

Enterprise-level security features: Protect valuable project data with enterprise-level security features like encryption at rest and in transit. You can also implement additional security measures using access controls such as SAML single sign-on (SSO) integration.

Seamless integrations with other tools: Extend the power of Jira by integrating it with other leading software development tools like Confluence or Bitbucket easily. Increase collaboration within your team by connecting all relevant processes in one place.

Getting Started with Jira Software Data Center: Installation and Setup

Installation and Setup

Installing Jira Software Data Center is a straightforward process. Here are the steps you need to follow:

- System Requirements: Before installing Jira Software Data Center, ensure that your system meets the minimum requirements for memory, disk space, and operating system compatibility.

- Download and Install: Visit Atlassian’s website to download the installer package specific to your operating system. Once downloaded, run the installer and follow the on-screen instructions to complete the installation.

- License Activation: After installation, navigate to the Jira administration panel and enter your license key to activate your software.

- Database Configuration: Configure a database connection for Jira before proceeding further with setup.

- Application Setup Wizard: Launching Jira will open up an application setup wizard where you can customize various settings such as language preferences, user directories, email configurations, etc.

- 10Finally! You’re all set up with Jira Software Data Center—ready for action!

Following these steps will enable you to install and set up Jira Software Data Center easily and efficiently without any hassle or confusion.

Navigating the Jira Software Data Center Interface

Once you have accessed Jira Software Data Center, navigating the interface is simple and intuitive. The user-friendly dashboard provides an overview of your projects, issues, and team’s progress.

Here are some key features to help you navigate effectively:

- Project Navigation: Use the project sidebar to quickly switch between your different projects. It provides easy access to project-specific boards, backlog items, and reports.

- Search Bar: Locate specific issues or projects by using the search bar located at the top of every page. Simply enter keywords or issue numbers for instant results.