Data center architecture refers to the design and layout of a data center facility, including the physical infrastructure, equipment, and systems that support the storage, processing, and management of data. It is crucial for organizations to implement best practices in data center architecture to ensure efficiency, reliability, and scalability.

Efficiency is a key consideration in data center design as it directly impacts the operational costs and environmental footprint of the facility. By implementing best practices for data center architecture, organizations can optimize energy usage, reduce cooling requirements, and improve overall performance.

There are several best practices that organizations can follow when designing and managing their data center architecture. These include selecting the right location and site for the facility, designing an efficient layout, choosing appropriate equipment, implementing effective cooling systems, optimizing power usage, designing for scalability and flexibility, leveraging virtualization technologies, implementing monitoring and management tools, and selecting the right hardware and software.

Key Takeaways

- Data center architecture best practices are essential for efficient and effective operations.

- Efficiency is a critical factor in data center design, impacting both cost and performance.

- Key considerations for data center efficiency include power usage, cooling systems, scalability, and virtualization.

- Best practices for cooling systems include using hot and cold aisles, optimizing airflow, and using efficient cooling technologies.

- Optimizing power usage involves using energy-efficient hardware and software, implementing power management tools, and using renewable energy sources.

Understanding the Importance of Efficiency in Data Center Design

Efficiency in data center design refers to the ability of a facility to maximize performance while minimizing energy consumption and operational costs. It involves optimizing various aspects of the data center architecture to ensure that resources are used effectively and efficiently.

Efficient data center design offers several benefits. Firstly, it reduces energy consumption, resulting in lower utility bills and reduced environmental impact. By implementing energy-efficient technologies and practices, organizations can significantly reduce their carbon footprint.

Secondly, efficient data center design improves reliability and uptime. By optimizing cooling systems, power distribution, and equipment placement, organizations can minimize the risk of equipment failure and downtime. This is crucial for businesses that rely on their data centers to deliver critical services.

Lastly, efficient data center design helps organizations save costs. By reducing energy consumption and improving overall performance, organizations can lower their operational expenses. This includes savings on electricity bills, maintenance costs, and equipment replacement.

Key Considerations for Data Center Efficiency

When designing a data center, there are several key considerations that organizations should keep in mind to ensure efficiency.

Location and site selection is an important consideration. The location of the data center can impact its energy efficiency and reliability. It is important to select a site that is not prone to natural disasters, has access to reliable power and network infrastructure, and is in close proximity to the organization’s users or customers.

Layout and design also play a crucial role in data center efficiency. The layout should be designed to minimize the distance between equipment, reduce cable lengths, and optimize airflow. This can be achieved through the use of hot and cold aisle containment, raised floors, and efficient equipment placement.

Equipment selection is another important consideration. Organizations should choose energy-efficient servers, storage systems, networking equipment, and other hardware components. It is also important to consider the scalability and flexibility of the equipment to accommodate future growth and changes in technology.

Maintenance and management practices are also critical for data center efficiency. Regular maintenance and monitoring of equipment can help identify and address issues before they become major problems. Implementing effective management tools and processes can also help optimize resource allocation, track energy usage, and improve overall performance.

Best Practices for Cooling Systems in Data Centers

Cooling systems are a critical component of data center architecture as they help maintain optimal operating temperatures for the equipment. There are several types of cooling systems that organizations can choose from, including air-based cooling, liquid-based cooling, and hybrid cooling systems.

When selecting a cooling system, organizations should consider factors such as the heat load of the data center, the available space, energy efficiency ratings, maintenance requirements, and scalability. It is important to choose a system that can effectively remove heat from the facility while minimizing energy consumption.

There are several best practices that organizations can follow when designing and maintaining their cooling systems. Firstly, it is important to implement hot and cold aisle containment to separate the hot and cold air streams. This helps prevent the mixing of hot and cold air, reducing energy consumption and improving cooling efficiency.

Secondly, organizations should optimize airflow management within the data center. This can be achieved through the use of raised floors, perforated tiles, and efficient equipment placement. By ensuring proper airflow, organizations can improve cooling efficiency and reduce the risk of hot spots.

Regular maintenance and cleaning of cooling systems is also crucial for efficiency. Dust and debris can accumulate on cooling equipment, reducing its effectiveness. Regular inspections and cleaning can help ensure that the cooling systems are operating at optimal levels.

Optimizing Power Usage in Data Center Design

Power usage optimization is another important aspect of data center efficiency. By reducing power consumption, organizations can lower their operational costs and minimize their environmental impact.

There are several strategies that organizations can implement to reduce power usage in their data center design. Firstly, it is important to choose energy-efficient servers, storage systems, and networking equipment. Energy-efficient hardware components consume less power while delivering the same level of performance.

Secondly, organizations should implement power distribution and management strategies to optimize energy usage. This includes using power distribution units (PDUs) with energy monitoring capabilities, implementing power management software to control and monitor power usage, and implementing virtualization technologies to consolidate workloads and reduce the number of physical servers.

Efficient power distribution is also crucial for data center efficiency. By implementing redundant power supplies, uninterruptible power supply (UPS) systems, and efficient power distribution units (PDUs), organizations can ensure that power is delivered reliably and efficiently to the equipment.

Designing for Scalability and Flexibility in Data Centers

Scalability and flexibility are important considerations in data center design as they allow organizations to accommodate future growth and changes in technology.

Scalability refers to the ability of a data center to expand its capacity as demand increases. This can be achieved through the use of modular designs, where additional capacity can be added as needed. It is important to design the data center with scalability in mind to avoid costly and disruptive expansions in the future.

Flexibility refers to the ability of a data center to adapt to changes in technology and business requirements. This can be achieved through the use of standardized and modular components, virtualization technologies, and flexible cabling infrastructure. By designing for flexibility, organizations can easily upgrade or replace equipment without major disruptions to operations.

There are several best practices that organizations can follow when designing for scalability and flexibility. Firstly, it is important to use standardized and modular components that can be easily replaced or upgraded. This includes using standardized server racks, cabling infrastructure, and power distribution units.

Secondly, organizations should leverage virtualization technologies to consolidate workloads and improve resource utilization. Virtualization allows organizations to run multiple virtual machines on a single physical server, reducing the number of physical servers required and improving overall efficiency.

Lastly, organizations should design their data centers with flexible cabling infrastructure. This includes using structured cabling systems that can easily accommodate changes in technology and equipment placement. By implementing a flexible cabling infrastructure, organizations can reduce the cost and complexity of future upgrades or reconfigurations.

The Role of Virtualization in Data Center Efficiency

Virtualization is a technology that allows organizations to run multiple virtual machines on a single physical server. It plays a crucial role in data center efficiency by improving resource utilization, reducing power consumption, and simplifying management.

By consolidating workloads onto fewer physical servers, organizations can reduce the number of servers required, resulting in lower power consumption and reduced cooling requirements. This not only saves costs but also improves overall energy efficiency.

Virtualization also allows for better resource allocation and utilization. By dynamically allocating resources based on demand, organizations can optimize resource usage and improve performance. This helps prevent underutilization of resources and reduces the need for additional hardware.

Furthermore, virtualization simplifies management and maintenance of the data center. By centralizing the management of virtual machines, organizations can streamline operations, reduce administrative overhead, and improve overall efficiency.

There are several best practices that organizations can follow when implementing virtualization in their data center design. Firstly, it is important to carefully plan and design the virtualization infrastructure to ensure optimal performance and scalability. This includes selecting the right hypervisor, storage systems, and networking infrastructure.

Secondly, organizations should implement effective monitoring and management tools to ensure the performance and availability of virtual machines. This includes implementing virtual machine monitoring software, performance monitoring tools, and capacity planning tools.

Lastly, organizations should regularly assess and optimize their virtualization infrastructure to ensure that it is meeting their needs. This includes regularly reviewing resource allocation, optimizing virtual machine placement, and implementing performance tuning techniques.

Implementing Monitoring and Management Tools for Efficiency

Monitoring and management tools play a crucial role in data center efficiency by providing real-time visibility into the performance and health of the infrastructure. These tools help organizations identify bottlenecks, optimize resource allocation, and proactively address issues before they impact operations.

There are several types of monitoring and management tools that organizations can implement in their data center architecture. These include environmental monitoring tools, power monitoring tools, performance monitoring tools, capacity planning tools, and configuration management tools.

Environmental monitoring tools help organizations monitor temperature, humidity, and other environmental factors in the data center. This helps identify potential cooling issues or equipment failures that could impact performance.

Power monitoring tools provide real-time visibility into power usage and help organizations identify areas of high power consumption or inefficiency. This allows organizations to optimize power distribution and reduce energy consumption.

Performance monitoring tools help organizations monitor the performance of servers, storage systems, networking equipment, and other components. This helps identify bottlenecks or performance issues that could impact the overall efficiency of the data center.

Capacity planning tools help organizations forecast future resource requirements and optimize resource allocation. By analyzing historical data and trends, organizations can ensure that they have the right amount of resources to meet current and future demands.

Configuration management tools help organizations manage and track changes to the data center infrastructure. This includes tracking hardware and software configurations, managing firmware updates, and ensuring compliance with industry standards and best practices.

When implementing monitoring and management tools, it is important to consider factors such as scalability, ease of use, integration with existing systems, and cost. It is also important to regularly review and update the tools to ensure that they are meeting the organization’s needs.

Choosing the Right Hardware and Software for Data Center Efficiency

Choosing the right hardware and software is crucial for data center efficiency as it directly impacts performance, reliability, and energy consumption.

When selecting hardware components such as servers, storage systems, and networking equipment, organizations should consider factors such as energy efficiency ratings, performance benchmarks, scalability, and reliability. It is important to choose hardware components that are designed for data center environments and can deliver high performance while minimizing power consumption.

Software selection is also important for data center efficiency. Organizations should choose software solutions that are optimized for performance, scalability, and energy efficiency. This includes operating systems, virtualization software, management tools, and other software applications.

When selecting software solutions, organizations should consider factors such as compatibility with existing systems, ease of use, scalability, and support. It is important to choose software solutions that can integrate seamlessly with the existing infrastructure and provide the necessary features and functionality.

Regularly reviewing and updating hardware and software is also crucial for data center efficiency. This includes upgrading hardware components to take advantage of new technologies or improved energy efficiency ratings. It also includes updating software applications to ensure that they are running on the latest versions with the latest security patches and performance improvements.

Future Trends and Innovations in Data Center Architecture for Efficiency

The field of data center architecture is constantly evolving, with new trends and innovations emerging to improve efficiency, performance, and scalability.

One emerging trend is the use of modular data center designs. Modular data centers are pre-fabricated units that can be quickly deployed and easily scaled. They offer flexibility, scalability, and reduced construction costs compared to traditional data center designs.

Another trend is the use of renewable energy sources to power data centers. With the increasing focus on sustainability and reducing carbon footprints, organizations are exploring the use of solar, wind, and other renewable energy sources to power their data centers. This not only reduces environmental impact but also lowers operational costs.

Edge computing is another emerging trend in data center architecture. Edge computing involves moving computing resources closer to the source of data generation, reducing latency and improving performance. This is particularly important for applications that require real-time processing or low latency, such as Internet of Things (IoT) devices and autonomous vehicles.

Artificial intelligence (AI) and machine learning (ML) are also playing a role in data center efficiency. AI and ML algorithms can analyze large amounts of data to identify patterns, optimize resource allocation, and predict failures or performance issues. This helps organizations improve efficiency, reduce downtime, and optimize resource usage.

To stay up-to-date with emerging trends and innovations in data center architecture, organizations should regularly attend industry conferences and events, participate in industry forums and communities, and engage with technology vendors and experts. It is also important to regularly review industry publications, research papers, and case studies to learn about new technologies and best practices.

In conclusion, implementing best practices in data center architecture is crucial for organizations to ensure efficiency, reliability, and scalability. By considering factors such as location and site selection, layout and design, equipment selection, maintenance and management practices, cooling systems design, power usage optimization, scalability and flexibility design, virtualization implementation, monitoring and management tools, and hardware and software selection, organizations can optimize energy usage, reduce costs, and improve overall performance. By staying up-to-date with emerging trends and innovations in data center architecture, organizations can continue to improve efficiency and stay ahead of the competition.

If you’re interested in understanding the future of data storage, you should check out the article “The Emergence of Hyperscale Data Centers: Understanding the Future of Data Storage” on DataCenterInfo.com. This informative piece explores the concept of hyperscale data centers and their role in meeting the growing demands of data storage. It delves into the benefits and challenges associated with hyperscale architecture and provides valuable insights into how these data centers are shaping the future of the industry. Don’t miss out on this fascinating read!

Scalable data center architecture refers to the design and implementation of a data center that can easily accommodate growth and expansion. It is a crucial aspect of modern data centers, as businesses are constantly generating and storing more data than ever before. Scalability allows organizations to meet the increasing demands for storage, processing power, and network bandwidth without disrupting operations or incurring significant costs.

In today’s digital age, data is the lifeblood of businesses. From customer information to transaction records, companies rely on data to make informed decisions and drive growth. As the volume of data continues to grow exponentially, it is essential for organizations to have a scalable data center architecture in place. Without scalability, businesses may face numerous challenges such as limited storage capacity, slow processing speeds, and network congestion.

Key Takeaways

- Scalable data center architecture is essential for businesses to accommodate growth and changing needs.

- Planning for growth is crucial to ensure that the data center can handle increased demand and traffic.

- Key components of scalable data center architecture include modular design, virtualization, and automation.

- Best practices for designing a scalable data center include using standardized hardware, implementing redundancy, and optimizing cooling and power usage.

- Capacity planning is necessary to ensure that the data center can handle future growth and avoid downtime.

Understanding the Importance of Planning for Growth

Not planning for growth can have severe consequences for businesses. One of the risks is running out of storage capacity. As data continues to accumulate, organizations may find themselves struggling to store and manage their data effectively. This can lead to delays in accessing critical information and hinder decision-making processes.

Another risk is inadequate processing power. As businesses grow, they require more computing resources to handle complex tasks and analyze large datasets. Without a scalable data center architecture, organizations may experience slow processing speeds and performance bottlenecks, which can impact productivity and customer satisfaction.

On the other hand, planning for growth brings several benefits. Firstly, it allows businesses to stay ahead of the competition by ensuring they have the necessary infrastructure to support their expanding operations. Scalable data center architecture enables organizations to scale up their resources seamlessly as demand increases, ensuring they can meet customer needs efficiently.

Additionally, planning for growth helps businesses optimize their IT investments. By anticipating future requirements and designing a scalable infrastructure, organizations can avoid unnecessary expenses on hardware or software that may become obsolete or insufficient in the long run. This strategic approach to scalability ensures that businesses can adapt to changing technology trends and market demands without incurring significant costs.

Key Components of Scalable Data Center Architecture

1. Modular design: A modular design allows for the easy addition or removal of components as needed. It involves breaking down the data center into smaller, self-contained units that can be scaled independently. This modular approach enables organizations to add more storage, computing power, or network capacity without disrupting the entire data center.

2. Virtualization: Virtualization is a key component of scalable data center architecture as it allows for the efficient utilization of resources. By abstracting physical hardware and creating virtual machines, organizations can consolidate their infrastructure and allocate resources dynamically based on demand. This flexibility enables businesses to scale up or down their computing resources as needed, optimizing efficiency and reducing costs.

3. Automation: Automation plays a crucial role in scalable data center architecture by streamlining operations and reducing manual intervention. By automating routine tasks such as provisioning, configuration, and monitoring, organizations can free up IT staff to focus on more strategic initiatives. Automation also enables faster response times and improves overall efficiency, ensuring that the data center can scale seamlessly.

4. High-density computing: High-density computing refers to the ability to pack more computing power into a smaller physical footprint. This is achieved through technologies such as blade servers, which allow for higher processing capacity in a compact form factor. High-density computing is essential for scalability as it enables organizations to maximize their resources and accommodate more servers within limited space.

5. Energy efficiency: Energy efficiency is a critical consideration in scalable data center architecture due to the increasing power demands of modern IT infrastructure. By implementing energy-efficient technologies such as server virtualization, efficient cooling systems, and power management tools, organizations can reduce their energy consumption and lower operational costs. Energy efficiency also contributes to sustainability efforts and reduces the environmental impact of data centers.

Best Practices for Designing a Scalable Data Center

1. Conducting a thorough needs assessment: Before designing a scalable data center, it is essential to conduct a comprehensive needs assessment to understand the current and future requirements of the organization. This assessment should include factors such as storage capacity, processing power, network bandwidth, and anticipated growth. By gathering this information, businesses can design a data center that meets their specific needs and allows for future scalability.

2. Choosing the right hardware and software: Selecting the right hardware and software is crucial for building a scalable data center. It is important to choose components that are compatible with each other and can be easily integrated into the existing infrastructure. Additionally, organizations should consider factors such as performance, reliability, and scalability when selecting hardware and software solutions.

3. Implementing a modular design: As mentioned earlier, a modular design allows for easy scalability by breaking down the data center into smaller units. When implementing a modular design, organizations should ensure that each module is self-contained and can be scaled independently. This approach enables businesses to add or remove components without disrupting the entire data center.

4. Building in redundancy and resiliency: Redundancy and resiliency are crucial for ensuring uninterrupted operations in a scalable data center. Organizations should implement redundant components such as power supplies, network switches, and storage devices to minimize the risk of single points of failure. Additionally, backup and disaster recovery solutions should be in place to protect against data loss and ensure business continuity.

5. Planning for future growth: Scalable data center architecture should not only address current needs but also anticipate future growth. Organizations should consider factors such as projected data growth, technological advancements, and market trends when designing their data center. By planning for future growth, businesses can avoid costly upgrades or migrations down the line and ensure that their infrastructure can support their long-term objectives.

Capacity Planning for Future Growth

Capacity planning is a critical aspect of scalable data center architecture as it involves assessing current and future resource requirements. By understanding the capacity needs of the organization, businesses can ensure that their data center can accommodate growth without compromising performance or availability.

To conduct a capacity assessment, organizations should start by analyzing their current resource utilization. This includes factors such as storage capacity, CPU utilization, network bandwidth, and memory usage. By gathering this data, businesses can identify any bottlenecks or areas of inefficiency that may hinder scalability.

Once the current utilization is assessed, organizations should project future resource requirements based on anticipated growth. This involves considering factors such as data growth rates, new applications or services, and changes in user demand. By forecasting future needs, businesses can plan for additional resources and design a data center that can scale accordingly.

It is important to note that capacity planning is an ongoing process and should be revisited regularly to ensure that the data center remains scalable. As business needs evolve and technology advances, organizations should reassess their capacity requirements and make necessary adjustments to their infrastructure.

Building Redundancy and Resiliency into Your Data Center

Redundancy and resiliency are crucial for ensuring the availability and reliability of a scalable data center. Redundancy refers to the duplication of critical components to minimize the risk of single points of failure. Resiliency, on the other hand, refers to the ability of the data center to recover quickly from disruptions or failures.

Building in redundancy involves implementing redundant components such as power supplies, network switches, storage devices, and cooling systems. This ensures that if one component fails, there is a backup in place to maintain operations. Redundancy can be achieved through technologies such as RAID (Redundant Array of Independent Disks) for storage redundancy or clustering for server redundancy.

Resiliency is achieved through measures such as backup and disaster recovery solutions. Organizations should have regular backup processes in place to protect against data loss and ensure that critical information can be restored in the event of a failure. Additionally, disaster recovery plans should be developed to outline the steps to be taken in the event of a major disruption, such as a natural disaster or cyberattack.

It is important to regularly test and maintain redundancy and resiliency measures to ensure their effectiveness. This includes conducting regular backups, testing disaster recovery plans, and performing routine maintenance on redundant components. By proactively addressing potential vulnerabilities, organizations can minimize downtime and ensure the continuous availability of their data center.

Network Design Considerations for a Scalable Data Center

Network design is a critical consideration in scalable data center architecture as it determines the connectivity and bandwidth available to applications and services. A well-designed network architecture ensures that data can flow efficiently between servers, storage devices, and end-users, enabling seamless scalability.

When choosing a network architecture for a scalable data center, organizations should consider factors such as performance, reliability, scalability, and security. It is important to select networking equipment that can handle high volumes of traffic and provide sufficient bandwidth for current and future needs.

Building in redundancy is also crucial for network design. Organizations should implement redundant network switches or routers to minimize the risk of network outages. Additionally, load balancing technologies can be used to distribute network traffic across multiple paths, ensuring optimal performance and availability.

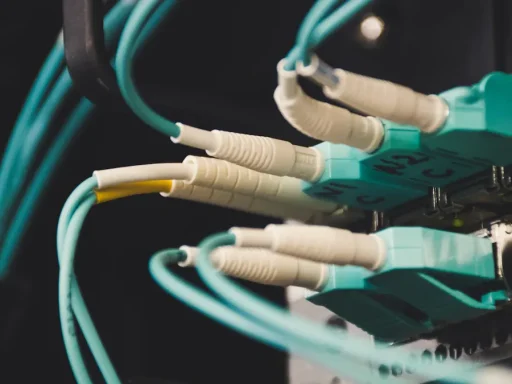

Planning for future growth is another important consideration in network design. Organizations should anticipate increasing network demands and design their infrastructure accordingly. This may involve implementing technologies such as fiber optic cables or upgrading network switches to support higher bandwidth requirements.

Storage Architecture for Scalability and Flexibility

Storage architecture plays a crucial role in scalable data center architecture as it determines how data is stored, accessed, and managed. A well-designed storage architecture enables organizations to scale their storage capacity seamlessly while ensuring high performance and data availability.

When choosing a storage architecture for scalability, organizations should consider factors such as capacity, performance, reliability, and flexibility. There are several options available, including direct-attached storage (DAS), network-attached storage (NAS), and storage area networks (SAN).

DAS involves connecting storage devices directly to servers, providing high performance and low latency. However, it may not be suitable for organizations that require shared storage or centralized management.

NAS, on the other hand, provides shared storage over a network, allowing multiple servers to access the same data. This enables organizations to scale their storage capacity easily and provides flexibility in managing data.

SAN is a more advanced storage architecture that provides high-performance shared storage over a dedicated network. It offers features such as block-level access and advanced data management capabilities. SAN is suitable for organizations with high-performance requirements and complex storage needs.

When designing a storage architecture for scalability, organizations should also consider redundancy and resiliency. Implementing technologies such as RAID or distributed file systems can provide redundancy and protect against data loss. Additionally, backup and disaster recovery solutions should be in place to ensure business continuity in the event of a failure.

Planning for future growth is essential in storage architecture design. Organizations should anticipate increasing data volumes and design their infrastructure to accommodate future storage needs. This may involve implementing technologies such as tiered storage or cloud integration to optimize cost and performance.

Cloud Integration and Hybrid Cloud Solutions for Scalable Data Centers

Cloud integration and hybrid cloud solutions are becoming increasingly popular in scalable data center architecture. Cloud integration refers to the seamless integration of on-premises infrastructure with cloud services, while hybrid cloud solutions involve a combination of on-premises and cloud resources.

Cloud integration offers several benefits for scalability, including the ability to quickly scale up or down resources based on demand. By leveraging cloud services, organizations can offload some of their computing or storage needs to the cloud, reducing the strain on their on-premises infrastructure.

Hybrid cloud solutions provide even greater flexibility and scalability. By combining on-premises resources with cloud services, organizations can leverage the benefits of both environments. This allows for seamless scalability, as businesses can scale their on-premises infrastructure when needed and utilize the cloud for additional capacity or specialized services.

When choosing cloud integration and hybrid cloud solutions, organizations should consider factors such as data security, compliance requirements, and cost. It is important to select a cloud provider that meets the organization’s specific needs and offers the necessary scalability and reliability.

Building in redundancy and resiliency is also crucial when integrating cloud services into a scalable data center. Organizations should ensure that data is backed up and replicated across multiple locations to protect against data loss. Additionally, disaster recovery plans should include provisions for cloud resources to ensure business continuity in the event of a major disruption.

Planning for future growth is essential in cloud integration and hybrid cloud solutions. Organizations should anticipate increasing cloud usage and design their infrastructure to accommodate future needs. This may involve implementing technologies such as cloud bursting, where on-premises resources are supplemented with cloud resources during peak demand periods.

Monitoring and Management of a Scalable Data Center for Efficient Operations

Monitoring and management are critical aspects of scalable data center architecture as they ensure efficient operations and proactive maintenance. By implementing the right monitoring and management tools, organizations can identify potential issues before they become critical and take necessary actions to maintain performance and availability.

Monitoring involves collecting data on various aspects of the data center, including server performance, network traffic, storage utilization, and environmental conditions. This data is then analyzed to identify trends, anomalies, or potential bottlenecks. By monitoring key metrics, organizations can proactively address issues and optimize resource utilization.

Choosing the right monitoring tools is essential for scalable data center architecture. There are numerous options available, ranging from basic monitoring software to advanced analytics platforms. Organizations should select tools that provide real-time visibility into their infrastructure and offer features such as alerting, reporting, and capacity planning.

Management involves the configuration, provisioning, and maintenance of the data center infrastructure. By implementing automation and centralized management tools, organizations can streamline operations and reduce manual intervention. This enables faster response times, reduces human errors, and improves overall efficiency.

Choosing the right management tools is crucial for scalable data center architecture. Organizations should select tools that provide a unified view of the entire infrastructure and offer features such as configuration management, provisioning, and performance optimization.

Building in redundancy and resiliency is also important in monitoring and management. Organizations should implement redundant monitoring systems to ensure continuous visibility into the data center. Additionally, backup and disaster recovery plans should include provisions for monitoring and management tools to ensure business continuity in the event of a failure.

Planning for future growth is essential in monitoring and management. Organizations should anticipate increasing monitoring and management needs as their infrastructure scales. This may involve implementing advanced analytics platforms or upgrading monitoring tools to support higher volumes of data.

Scalable data center architecture is crucial for businesses in today’s digital age. It allows organizations to meet the increasing demands for storage, processing power, and network bandwidth without disrupting operations or incurring significant costs. By understanding the importance of planning for growth, organizations can avoid risks such as limited storage capacity or inadequate processing power.

Key components of scalable data center architecture include modular design, virtualization, automation, high-density computing, and energy efficiency. Best practices for designing a scalable data center involve conducting a thorough needs assessment, choosing the right hardware and software, implementing a redundant infrastructure, and regularly monitoring and optimizing performance.

A needs assessment is crucial in understanding the current and future requirements of the data center. This involves evaluating factors such as expected growth, workload demands, and specific business needs. By conducting a thorough needs assessment, organizations can ensure that their data center architecture is designed to meet their unique requirements.

Choosing the right hardware and software is another important aspect of designing a scalable data center. This includes selecting servers, storage systems, networking equipment, and virtualization platforms that can handle the anticipated workload and provide the necessary scalability. It is also important to consider factors such as reliability, performance, and compatibility with existing systems.

Implementing a redundant infrastructure is essential for ensuring high availability and minimizing downtime. This involves deploying redundant power supplies, network connections, and storage systems to eliminate single points of failure. Redundancy can be achieved through techniques such as clustering, load balancing, and data replication.

Regular monitoring and optimization are critical for maintaining optimal performance in a scalable data center. This involves continuously monitoring key metrics such as CPU utilization, network traffic, and storage capacity to identify potential bottlenecks or performance issues. By proactively addressing these issues, organizations can ensure that their data center remains scalable and efficient.

In conclusion, designing a scalable data center involves a combination of key components such as modular design, virtualization, automation, high-density computing, and energy efficiency. By following best practices such as conducting a thorough needs assessment, choosing the right hardware and software, implementing a redundant infrastructure, and regularly monitoring and optimizing performance, organizations can build a data center architecture that can scale to meet their evolving needs.

If you’re interested in learning more about data center security and how to protect against cyber attacks, check out this informative article: The Importance of Data Center Security and How to Protect Against Cyber Attacks. It provides valuable insights and practical tips for safeguarding your data center from potential threats.

Managed hosting services refer to the outsourcing of IT infrastructure and management to a third-party provider, typically housed in a data center. These services allow businesses to focus on their core competencies while leaving the management and maintenance of their hosting environment to experts. Data centers play a crucial role in providing managed hosting services by offering secure and reliable infrastructure, as well as round-the-clock support.

Data centers are facilities that house servers, storage systems, networking equipment, and other critical IT infrastructure. They are designed to provide a controlled environment with redundant power supplies, cooling systems, and physical security measures. Data centers offer a range of services, including colocation hosting, dedicated hosting, cloud hosting, and managed services. These services are essential for businesses that require high-performance and secure hosting solutions but lack the resources or expertise to manage them in-house.

Key Takeaways

- Managed hosting services provide businesses with a comprehensive solution for their hosting needs in data centers.

- Benefits of managed hosting services include improved security, reduced downtime, and access to expert support.

- Managed hosting services can meet a variety of hosting needs, from basic web hosting to complex cloud infrastructure.

- Data centers play a crucial role in providing reliable and secure managed hosting services.

- Types of managed hosting services offered by data centers include dedicated hosting, cloud hosting, and colocation services.

Understanding the Benefits of Managed Hosting Services

One of the primary benefits of managed hosting services is cost savings. By outsourcing their hosting infrastructure, businesses can avoid the upfront costs associated with purchasing and maintaining hardware and software. Instead, they pay a predictable monthly fee for the services they need. Managed hosting providers also have economies of scale, allowing them to offer cost-effective solutions that would be difficult for businesses to achieve on their own.

Another advantage of managed hosting services is increased reliability and uptime. Data centers are designed with redundant power supplies, backup generators, and multiple internet connections to ensure uninterrupted service. They also have skilled technicians who monitor the infrastructure 24/7 and can quickly respond to any issues that may arise. This level of reliability is crucial for businesses that rely on their websites or applications to generate revenue.

Access to expert support is another key benefit of managed hosting services. Data centers employ highly trained professionals who specialize in managing and maintaining IT infrastructure. These experts can provide assistance with server configuration, software updates, security patches, and troubleshooting. Having access to this level of support can save businesses time and resources, allowing them to focus on their core business objectives.

Improved security and compliance is also a significant advantage of managed hosting services. Data centers have robust security measures in place, including physical security, fire suppression systems, and advanced network security protocols. They also have expertise in compliance requirements, such as HIPAA or PCI DSS, and can help businesses meet these standards. This level of security and compliance is essential for businesses that handle sensitive customer data or operate in regulated industries.

How Managed Hosting Services Can Meet Your Hosting Needs

Managed hosting services offer customizable solutions that can meet the unique hosting needs of businesses. Providers work closely with their clients to understand their requirements and design a hosting environment that aligns with their goals. This customization allows businesses to have the exact infrastructure they need without the burden of managing it themselves.

Scalability and flexibility are also key features of managed hosting services. Data centers have the ability to quickly scale resources up or down based on demand. This flexibility allows businesses to adapt to changing needs without the need for significant upfront investments or lengthy procurement processes. Whether a business experiences sudden spikes in traffic or needs to expand its infrastructure to support growth, managed hosting services can provide the necessary resources.

High-performance infrastructure is another advantage of managed hosting services. Data centers are equipped with state-of-the-art hardware and networking equipment that can deliver fast and reliable performance. They also have redundant systems in place to ensure maximum uptime and minimize any potential downtime. This level of performance is crucial for businesses that rely on their websites or applications to deliver a seamless user experience.

The Role of Data Centers in Managed Hosting Services

Data centers play a critical role in providing managed hosting services. They are responsible for housing and maintaining the infrastructure that supports businesses’ hosting needs. Data centers offer secure and reliable environments with redundant power supplies, cooling systems, fire suppression systems, and physical security measures. They also have skilled technicians who monitor the infrastructure 24/7 and can quickly respond to any issues that may arise.

Data centers also provide the necessary network connectivity for businesses’ hosting environments. They have multiple internet connections from different providers to ensure uninterrupted service. They also have advanced network security protocols in place to protect against cyber threats. This level of connectivity and security is essential for businesses that rely on their websites or applications to operate smoothly.

In addition to infrastructure and connectivity, data centers offer a range of services to support businesses’ hosting needs. These services include colocation hosting, dedicated hosting, cloud hosting, and managed services. Colocation hosting allows businesses to house their own servers in a data center facility while taking advantage of the data center’s infrastructure and support. Dedicated hosting provides businesses with their own dedicated server, offering maximum control and customization. Cloud hosting offers scalable and flexible resources on-demand, allowing businesses to pay for what they use. Managed services provide businesses with a fully managed hosting environment, including server management, software updates, security patches, and support.

Types of Managed Hosting Services Offered by Data Centers

Data centers offer a range of managed hosting services to meet the diverse needs of businesses. These services include dedicated hosting, cloud hosting, colocation hosting, and managed services.

Dedicated hosting involves leasing an entire physical server from a data center provider. This option provides businesses with maximum control and customization over their hosting environment. They have full access to the server’s resources and can configure it to meet their specific requirements. Dedicated hosting is ideal for businesses that have high-performance or resource-intensive applications or require strict security measures.

Cloud hosting is a scalable and flexible solution that allows businesses to pay for the resources they use. With cloud hosting, businesses can quickly scale up or down based on demand without the need for significant upfront investments or lengthy procurement processes. Cloud hosting is ideal for businesses that experience fluctuating traffic or need to rapidly deploy new applications or services.

Colocation hosting allows businesses to house their own servers in a data center facility while taking advantage of the data center’s infrastructure and support. With colocation hosting, businesses have full control over their hardware and software while benefiting from the data center’s secure and reliable environment. Colocation hosting is ideal for businesses that have invested in their own hardware and want to leverage the data center’s infrastructure and expertise.

Managed services provide businesses with a fully managed hosting environment. This includes server management, software updates, security patches, and support. With managed services, businesses can focus on their core competencies while leaving the management and maintenance of their hosting environment to experts. Managed services are ideal for businesses that lack the resources or expertise to manage their hosting infrastructure in-house.

Choosing the Right Managed Hosting Service Provider

When selecting a managed hosting service provider, there are several factors to consider. These factors include reliability, scalability, security, support, and cost.

Reliability is crucial when choosing a managed hosting service provider. The provider should have a track record of high uptime and minimal downtime. They should also have redundant systems in place to ensure maximum availability. It is essential to ask potential providers about their uptime guarantees and their disaster recovery plans.

Scalability is another important factor to consider. The provider should be able to quickly scale resources up or down based on demand. They should also have the flexibility to accommodate future growth without significant disruptions or additional costs. It is important to ask potential providers about their scalability options and how they handle sudden spikes in traffic.

Security is a critical consideration when selecting a managed hosting service provider. The provider should have robust security measures in place to protect against cyber threats. They should also have expertise in compliance requirements, such as HIPAA or PCI DSS, if applicable to your business. It is important to ask potential providers about their security protocols and their compliance certifications.

Support is another key factor to consider. The provider should have skilled technicians who can provide assistance with server configuration, software updates, security patches, and troubleshooting. They should also offer 24/7 support to ensure prompt response times. It is important to ask potential providers about their support options and their average response times.

Cost is also an important consideration when selecting a managed hosting service provider. It is essential to compare pricing models and ensure that the provider offers transparent pricing with no hidden fees. It is also important to consider the value of the services provided and the level of expertise offered by the provider.

Ensuring Security and Compliance in Managed Hosting Services

Security and compliance are critical considerations in managed hosting services. Data centers have robust security measures in place to protect against cyber threats. These measures include physical security, fire suppression systems, advanced network security protocols, and regular security audits.

Data centers also have expertise in compliance requirements and can help businesses meet these standards. Whether it is HIPAA for healthcare organizations or PCI DSS for businesses that handle credit card information, data centers can provide the necessary infrastructure and support to ensure compliance.

It is important for businesses to work closely with their managed hosting service provider to understand the security measures in place and how they align with their specific compliance requirements. Regular communication and collaboration are essential to ensure that all security and compliance needs are met.

Scalability and Flexibility of Managed Hosting Services

One of the key advantages of managed hosting services is scalability and flexibility. Data centers have the ability to quickly scale resources up or down based on demand. This flexibility allows businesses to adapt to changing needs without the need for significant upfront investments or lengthy procurement processes.

Scalable hosting solutions allow businesses to handle sudden spikes in traffic without experiencing performance issues or downtime. This is particularly important for businesses that experience seasonal fluctuations or run marketing campaigns that drive a significant increase in traffic. With scalable hosting solutions, businesses can ensure that their websites or applications can handle the increased demand without any disruptions.

Flexible hosting solutions allow businesses to easily add or remove resources as needed. This is particularly important for businesses that are experiencing growth or have changing requirements. With flexible hosting solutions, businesses can quickly deploy new applications or services and adjust their infrastructure to support their evolving needs.

Managed hosting services provide businesses with the scalability and flexibility they need to stay competitive in today’s fast-paced digital landscape. By leveraging the resources and expertise of a data center, businesses can easily adapt to changing demands and focus on their core competencies.

Managed Hosting Services vs. Self-Hosting: Which is Better?

When considering hosting options, businesses often face the decision between self-hosting and managed hosting services. Both options have their pros and cons, and the choice depends on the specific needs and resources of the business.

Self-hosting involves purchasing and maintaining the necessary hardware and software to host a website or application in-house. This option provides businesses with maximum control over their hosting environment. They have full access to the hardware and software and can configure it to meet their specific requirements. Self-hosting is ideal for businesses that have the resources and expertise to manage their hosting infrastructure in-house.

However, self-hosting also comes with several challenges. It requires significant upfront investments in hardware and software, as well as ongoing maintenance costs. Businesses are responsible for ensuring the security and reliability of their hosting environment, which can be time-consuming and resource-intensive. Self-hosting also lacks the scalability and flexibility of managed hosting services, making it difficult for businesses to adapt to changing needs.

Managed hosting services, on the other hand, offer several advantages over self-hosting. By outsourcing their hosting infrastructure to a third-party provider, businesses can avoid the upfront costs associated with purchasing and maintaining hardware and software. They also benefit from the expertise of the provider, who can ensure the security, reliability, and performance of the hosting environment.

Managed hosting services also offer scalability and flexibility, allowing businesses to quickly scale resources up or down based on demand. This level of agility is crucial for businesses that experience fluctuating traffic or need to rapidly deploy new applications or services. Managed hosting services also provide access to expert support, saving businesses time and resources.

While managed hosting services offer many advantages, they may not be suitable for every business. Some businesses may have specific requirements or compliance needs that can only be met through self-hosting. It is important for businesses to carefully evaluate their options and consider their specific needs and resources before making a decision.

Why Managed Hosting Services in Data Centers are Essential for Businesses

Managed hosting services in data centers offer numerous benefits and advantages for businesses. These services provide cost savings, increased reliability and uptime, access to expert support, and improved security and compliance. They also offer customizable solutions, scalability and flexibility, and high-performance infrastructure.

Data centers play a crucial role in providing managed hosting services by offering secure and reliable infrastructure, as well as round-the-clock support. They provide a range of services, including dedicated hosting, cloud hosting, colocation hosting, and managed services. Businesses must carefully select a managed hosting service provider based on factors such as reliability, scalability, security, support, and cost.

Managed hosting services in data centers are essential for businesses that require high-performance and secure hosting solutions but lack the resources or expertise to manage them in-house. By outsourcing their hosting infrastructure to a third-party provider, businesses can focus on their core competencies while leaving the management and maintenance of their hosting environment to experts. With the scalability, flexibility, and support provided by managed hosting services in data centers, businesses can adapt to changing demands and stay competitive in today’s digital landscape.

If you’re interested in learning more about securing information in data centers, you may find the article “Securing Information with Data Center Security: Best Practices for Physical and Digital Measures” informative. This article discusses the importance of implementing robust security measures to protect sensitive data in data centers. From physical security measures like access controls and surveillance systems to digital security practices such as encryption and firewalls, this article provides valuable insights into safeguarding information in data center environments. Check it out here.

FAQs

What are managed hosting services?

Managed hosting services refer to the outsourcing of IT infrastructure management and maintenance to a third-party provider. This includes server management, security, backups, and technical support.

What are data centers?

Data centers are facilities that house computer systems and associated components, such as telecommunications and storage systems. They are designed to provide a secure and reliable environment for IT infrastructure.

What are the benefits of managed hosting services?

Managed hosting services offer several benefits, including reduced IT infrastructure costs, improved security, increased uptime, and access to technical expertise. They also allow businesses to focus on their core competencies rather than IT management.

What types of businesses can benefit from managed hosting services?

Managed hosting services can benefit businesses of all sizes and industries. They are particularly useful for businesses that require high levels of uptime, security, and technical expertise, such as e-commerce sites, financial institutions, and healthcare providers.

What should I look for in a managed hosting services provider?

When choosing a managed hosting services provider, it is important to consider factors such as reliability, security, scalability, and technical expertise. You should also look for a provider that offers flexible pricing and customizable solutions to meet your specific hosting needs.

What is the difference between managed hosting and unmanaged hosting?

Managed hosting services involve outsourcing IT infrastructure management and maintenance to a third-party provider, while unmanaged hosting requires businesses to manage their own IT infrastructure. Managed hosting services offer greater convenience, security, and technical expertise, while unmanaged hosting offers greater control and customization options.

Data center architecture refers to the design and structure of a data center, which is a centralized facility that houses computer systems and associated components, such as telecommunications and storage systems. It is the physical infrastructure that supports the operations of an organization’s IT infrastructure. Data center architecture plays a crucial role in modern businesses as it ensures the reliability, availability, and scalability of IT services.

In today’s digital age, businesses rely heavily on technology to operate efficiently and effectively. Data centers are at the heart of this technology-driven world, providing the necessary infrastructure to store, process, and manage vast amounts of data. Without a well-designed data center architecture, businesses would struggle to meet the demands of their customers and compete in the market.

Key Takeaways

- Data centers are critical infrastructure for storing and processing digital data.

- Key components of data center architecture include network, storage, server, power and cooling systems, and security measures.

- Network architecture in data centers involves designing and managing the flow of data between servers and devices.

- Storage architecture in data centers involves selecting and configuring storage devices to meet performance and capacity requirements.

- Server architecture in data centers involves selecting and configuring servers to meet performance and workload requirements.

Understanding the Importance of Data Centers

Data centers play a vital role in modern businesses by providing a secure and reliable environment for storing and processing data. They serve as the backbone of an organization’s IT infrastructure, supporting critical business operations such as data storage, application hosting, and network connectivity.

One of the key benefits of data centers is their ability to ensure high availability and uptime for IT services. With redundant power supplies, backup generators, and cooling systems, data centers can minimize downtime and ensure that services are always accessible to users. This is especially important for businesses that rely on real-time data processing or have strict service level agreements with their customers.

Data centers also offer scalability, allowing businesses to easily expand their IT infrastructure as their needs grow. With modular designs and flexible configurations, data centers can accommodate additional servers, storage devices, and networking equipment without disrupting ongoing operations. This scalability is crucial for businesses that experience rapid growth or seasonal fluctuations in demand.

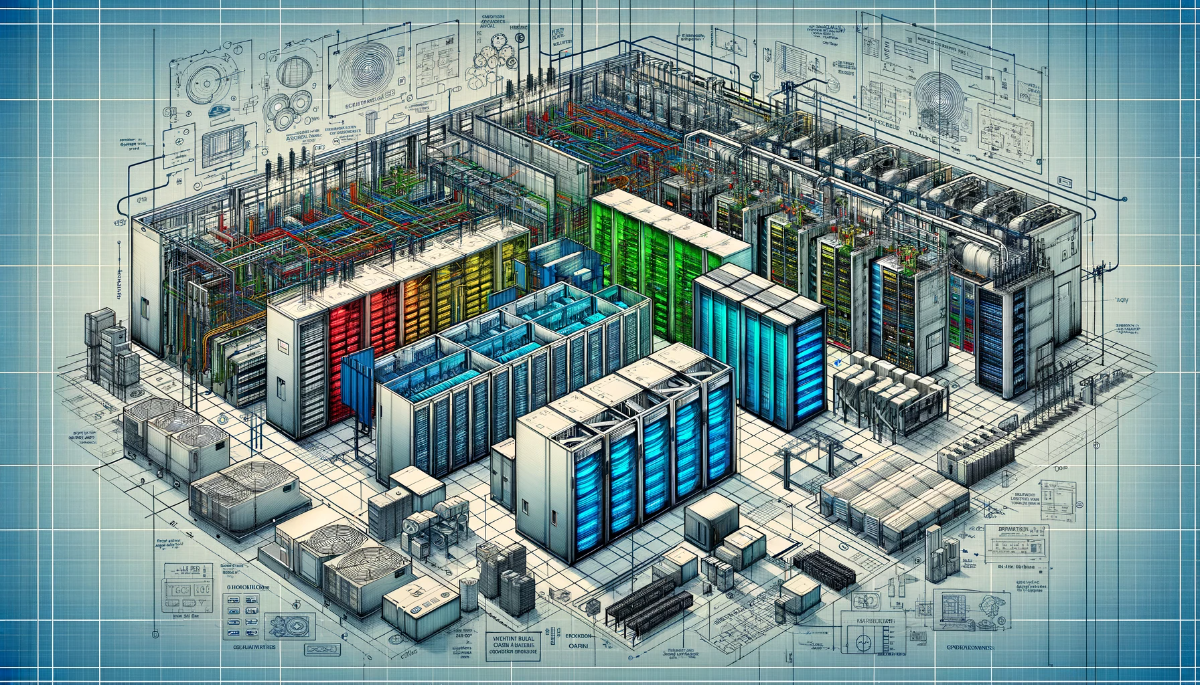

Key Components of Data Center Architecture

Data center architecture consists of several key components that work together to provide a reliable and efficient IT infrastructure. These components include network architecture, storage architecture, server architecture, power and cooling systems, and security measures.

Network architecture is responsible for connecting various devices within the data center and providing connectivity to external networks. It includes routers, switches, firewalls, and load balancers that ensure efficient data transfer and secure communication. Network architecture plays a crucial role in ensuring high performance, low latency, and reliable connectivity for IT services.

Storage architecture involves the design and implementation of storage systems that store and manage data in the data center. It includes storage area networks (SANs), network-attached storage (NAS), and backup systems. Storage architecture is essential for ensuring data availability, reliability, and scalability.

Server architecture refers to the design and configuration of servers in the data center. It includes server hardware, virtualization technologies, and server management software. Server architecture is critical for optimizing server performance, resource allocation, and workload management.

Power and cooling systems are essential components of data center architecture as they ensure the proper functioning and longevity of IT equipment. They include uninterruptible power supplies (UPS), backup generators, precision air conditioning units, and environmental monitoring systems. Power and cooling systems are crucial for maintaining optimal operating conditions and preventing equipment failures.

Security measures are an integral part of data center architecture to protect sensitive data and prevent unauthorized access. They include physical security measures such as access controls, surveillance cameras, and biometric authentication systems. Additionally, they include cybersecurity measures such as firewalls, intrusion detection systems, and encryption technologies.

Network Architecture in Data Centers

Network architecture in data centers is responsible for connecting various devices within the data center and providing connectivity to external networks. It ensures efficient data transfer, low latency, high bandwidth, and secure communication.

In a data center environment, network architecture typically consists of routers, switches, firewalls, load balancers, and other networking devices. These devices are interconnected to form a network infrastructure that enables the flow of data between servers, storage systems, and other devices.

The importance of network architecture in data centers cannot be overstated. It is the backbone of the IT infrastructure, enabling communication and data transfer between different components. A well-designed network architecture ensures high performance, low latency, and reliable connectivity for IT services.

Network architecture also plays a crucial role in ensuring security within the data center. Firewalls and intrusion detection systems are used to monitor and control network traffic, preventing unauthorized access and protecting sensitive data. Load balancers distribute network traffic across multiple servers, ensuring optimal performance and preventing bottlenecks.

Storage Architecture in Data Centers

Storage architecture in data centers involves the design and implementation of storage systems that store and manage data. It includes storage area networks (SANs), network-attached storage (NAS), backup systems, and other storage devices.

The main goal of storage architecture is to ensure data availability, reliability, and scalability. It provides a centralized repository for storing and managing vast amounts of data generated by modern businesses.

SANs are commonly used in data centers to provide high-performance storage solutions. They use fiber channel or Ethernet connections to connect servers to storage devices, allowing for fast data transfer rates and low latency. SANs are ideal for applications that require high-speed access to large amounts of data, such as databases or virtualized environments.

NAS, on the other hand, is a file-level storage solution that provides shared access to files over a network. It is commonly used for file sharing, backup, and archiving purposes. NAS devices are easy to deploy and manage, making them suitable for small to medium-sized businesses.

Backup systems are an essential component of storage architecture as they ensure data protection and disaster recovery. They create copies of critical data and store them on separate storage devices or off-site locations. Backup systems can be tape-based or disk-based, depending on the organization’s requirements.

Server Architecture in Data Centers

Server architecture in data centers refers to the design and configuration of servers. It includes server hardware, virtualization technologies, and server management software.

Server architecture plays a crucial role in optimizing server performance, resource allocation, and workload management. It ensures that servers are configured to meet the specific requirements of the applications and services they host.

Server hardware is a key component of server architecture. It includes physical servers, processors, memory, storage devices, and network interfaces. The choice of server hardware depends on factors such as performance requirements, scalability, and budget constraints.

Virtualization technologies are widely used in data centers to maximize server utilization and reduce hardware costs. Virtualization allows multiple virtual machines (VMs) to run on a single physical server, enabling better resource allocation and flexibility. It also simplifies server management and improves disaster recovery capabilities.

Server management software is used to monitor and control servers in the data center. It provides tools for provisioning, configuring, and managing servers remotely. Server management software helps administrators optimize server performance, troubleshoot issues, and ensure high availability of IT services.

Power and Cooling Systems in Data Centers

Power and cooling systems are essential components of data center architecture as they ensure the proper functioning and longevity of IT equipment. They provide a stable power supply and maintain optimal operating conditions for servers, storage systems, and networking devices.

Power systems in data centers typically include uninterruptible power supplies (UPS), backup generators, and power distribution units (PDUs). UPS systems provide temporary power during outages or fluctuations in the main power supply. Backup generators are used to provide long-term power during extended outages. PDUs distribute power from the UPS or generator to the IT equipment.

Cooling systems in data centers are responsible for maintaining optimal operating temperatures for IT equipment. They include precision air conditioning units, ventilation systems, and environmental monitoring systems. Cooling systems remove heat generated by servers and other devices, preventing overheating and equipment failures.

The importance of power and cooling systems in data centers cannot be overstated. Without reliable power and proper cooling, IT equipment can suffer from downtime, performance degradation, and premature failure. Power outages and temperature fluctuations can cause data loss, service disruptions, and financial losses for businesses.

Security Measures in Data Centers

Security measures are an integral part of data center architecture to protect sensitive data and prevent unauthorized access. They include physical security measures, such as access controls and surveillance cameras, as well as cybersecurity measures, such as firewalls and encryption technologies.

Physical security measures are designed to prevent unauthorized access to the data center facility. They include access controls, such as key cards or biometric authentication systems, that restrict entry to authorized personnel only. Surveillance cameras are used to monitor the facility and deter potential intruders.

Cybersecurity measures are essential for protecting data from external threats. Firewalls are used to monitor and control network traffic, preventing unauthorized access and protecting against malware and other cyber threats. Intrusion detection systems (IDS) and intrusion prevention systems (IPS) monitor network traffic for suspicious activity and take action to prevent attacks.

Encryption technologies are used to protect data at rest and in transit. They ensure that data is encrypted before it is stored or transmitted, making it unreadable to unauthorized users. Encryption helps prevent data breaches and ensures the confidentiality and integrity of sensitive information.

Scalability and Flexibility in Data Center Architecture

Scalability and flexibility are crucial aspects of data center architecture as they allow businesses to easily expand their IT infrastructure as their needs grow.

Scalability refers to the ability of a data center to accommodate additional servers, storage devices, and networking equipment without disrupting ongoing operations. It allows businesses to scale their IT infrastructure up or down based on demand or growth. Scalability is particularly important for businesses that experience rapid growth or seasonal fluctuations in demand.

Flexibility, on the other hand, refers to the ability of a data center to adapt to changing business requirements and technologies. It allows businesses to quickly deploy new applications, services, or technologies without significant reconfiguration or downtime. Flexibility is essential in today’s fast-paced business environment, where agility and innovation are key to staying competitive.

To achieve scalability and flexibility, data center architecture should be designed with modular and flexible configurations. This allows for easy expansion or reconfiguration of the IT infrastructure as needed. Virtualization technologies also play a crucial role in enabling scalability and flexibility by abstracting the underlying hardware and allowing for better resource allocation.

Best Practices for Data Center Architecture Design and Implementation

Designing and implementing a data center architecture requires careful planning and consideration of best practices. Following best practices ensures that the data center is reliable, efficient, and secure.

One of the best practices for data center architecture design is to have a clear understanding of the organization’s requirements and goals. This includes assessing current and future needs, considering factors such as performance, scalability, availability, and security.

Another best practice is to design for redundancy and high availability. This involves implementing redundant power supplies, backup generators, cooling systems, and network connections to minimize downtime and ensure continuous operation of IT services.

Proper cable management is also an important best practice in data center architecture. It ensures that cables are organized, labeled, and routed properly to minimize clutter and prevent accidental disconnections. Good cable management improves airflow, reduces the risk of cable damage, and simplifies troubleshooting.

Regular maintenance and monitoring are essential best practices for data center architecture. This includes performing routine inspections, testing backup systems, monitoring power usage, temperature, and humidity levels, and updating firmware and software regularly.

In conclusion, data center architecture plays a crucial role in modern businesses by providing the necessary infrastructure to store, process, and manage vast amounts of data. It ensures the reliability, availability, scalability, and security of IT services. The key components of data center architecture include network architecture, storage architecture, server architecture, power and cooling systems, and security measures. Scalability and flexibility are also important aspects of data center architecture, allowing businesses to easily expand their IT infrastructure as their needs grow. By following best practices for data center architecture design and implementation, businesses can ensure a reliable, efficient, and secure IT infrastructure that supports their operations and enables growth.

If you’re interested in creating a secure and HIPAA-compliant data center, you should check out this informative article: “Creating a Secure and HIPAA-Compliant Data Center: Tips for Success.” It provides valuable tips and insights on how to ensure the security and compliance of your data center, particularly in relation to the Health Insurance Portability and Accountability Act (HIPAA). With the increasing importance of data security in healthcare, this article offers practical advice for organizations looking to protect sensitive patient information. Read more

HIPAA (Health Insurance Portability and Accountability Act) compliance is a critical aspect of data center operations, especially in the healthcare industry. Data centers play a crucial role in storing and managing sensitive patient information, making it essential for them to adhere to HIPAA regulations to ensure the security and privacy of this data. In this article, we will explore the basics of HIPAA compliance in data centers, the importance of data security in healthcare, HIPAA regulations and compliance requirements, administrative, physical, and technical safeguards for HIPAA compliance, risk assessment and management, training and awareness for data center personnel, audit and monitoring processes, and best practices for achieving HIPAA compliance in data centers.

Key Takeaways

- HIPAA compliance is essential for data centers that handle healthcare information.

- Data security is crucial in healthcare to protect patient privacy and prevent data breaches.

- HIPAA regulations and compliance requirements must be followed to avoid penalties and legal consequences.

- Administrative, physical, and technical safeguards are necessary to ensure HIPAA compliance in data centers.

- Regular risk assessments, training, and monitoring are key components of maintaining HIPAA compliance in data centers.

Understanding the Basics of HIPAA Compliance in Data Centers

HIPAA is a federal law enacted in 1996 that sets standards for the protection of sensitive patient health information. Its primary goal is to ensure the privacy and security of this information while allowing for its efficient exchange between healthcare providers, insurers, and other entities involved in healthcare operations. HIPAA compliance is particularly important in data centers that handle healthcare data because any breach or unauthorized access to this information can have severe consequences for patients and healthcare organizations.

HIPAA regulations consist of three main rules: the Security Rule, the Privacy Rule, and the Breach Notification Rule. The Security Rule establishes standards for protecting electronic protected health information (ePHI) by requiring covered entities to implement administrative, physical, and technical safeguards. The Privacy Rule governs the use and disclosure of individuals’ health information by covered entities and sets limits on how this information can be shared. The Breach Notification Rule requires covered entities to notify affected individuals, the Department of Health and Human Services (HHS), and sometimes the media in the event of a breach of unsecured ePH

The Importance of Data Security in Healthcare

Data security is crucial in healthcare due to the sensitive nature of patient health information. Healthcare data includes personal identifiers, medical history, diagnoses, treatments, and other sensitive information that, if exposed or accessed by unauthorized individuals, can lead to identity theft, fraud, and other harmful consequences for patients. Additionally, healthcare organizations have a legal and ethical obligation to protect patient privacy and maintain the confidentiality of their health information.