Data center solutions refer to the infrastructure and services that support the storage, management, and processing of data in a centralized location. In the healthcare sector, data center solutions play a crucial role in managing and securing sensitive patient information, as well as facilitating the efficient delivery of healthcare services. With the increasing digitization of medical records and the growing reliance on technology in healthcare, data centers have become essential for healthcare organizations to effectively store, access, and analyze large volumes of data.

Key Takeaways

- Data center solutions are crucial for the healthcare sector to manage and store large amounts of data.

- Healthcare data centers face challenges such as power and cooling requirements, data privacy, and regulatory compliance.

- Understanding the architecture of healthcare data centers is important for efficient management and maintenance.

- Scalability and flexibility are essential for healthcare data centers to adapt to changing needs and technologies.

- Security, compliance, disaster recovery, and cloud computing are important considerations for healthcare organizations when choosing a data center solution.

The Importance of Data Centers in Healthcare

Data centers play a vital role in the healthcare industry by providing a secure and reliable environment for storing and managing patient data. With the transition from paper-based records to electronic health records (EHRs), healthcare organizations generate and accumulate vast amounts of data on a daily basis. Data centers provide the necessary infrastructure to store and process this data, ensuring its availability and accessibility when needed.

One of the key benefits of data centers in healthcare is improved efficiency and productivity. By centralizing data storage and management, healthcare professionals can easily access patient information from anywhere within the organization, eliminating the need for manual record-keeping and reducing administrative tasks. This streamlined process allows healthcare providers to focus more on patient care, leading to improved outcomes and patient satisfaction.

Key Challenges Facing Healthcare Data Centers

While data centers offer numerous benefits to healthcare organizations, they also face several challenges unique to the healthcare sector. One of the primary challenges is ensuring data security and privacy. Healthcare data is highly sensitive and subject to strict regulations such as HIPAA (Health Insurance Portability and Accountability Act). Data centers must implement robust security measures to protect patient information from unauthorized access or breaches.

Another challenge is regulatory compliance. Healthcare organizations must adhere to various regulations and standards related to data storage, privacy, and security. Data centers must ensure that their infrastructure and processes comply with these regulations to avoid penalties and legal consequences.

Data center downtime is another significant challenge in the healthcare sector. Any interruption in data center operations can have severe consequences, including delayed patient care, loss of critical data, and financial losses. Healthcare data centers must have robust backup and disaster recovery plans in place to minimize downtime and ensure business continuity.

Additionally, data center maintenance and upgrades can be challenging in healthcare organizations. Healthcare providers cannot afford to have their systems offline for extended periods, as it can disrupt patient care. Data centers must carefully plan and execute maintenance activities to minimize disruptions and ensure seamless operations.

Understanding Healthcare Data Center Architecture

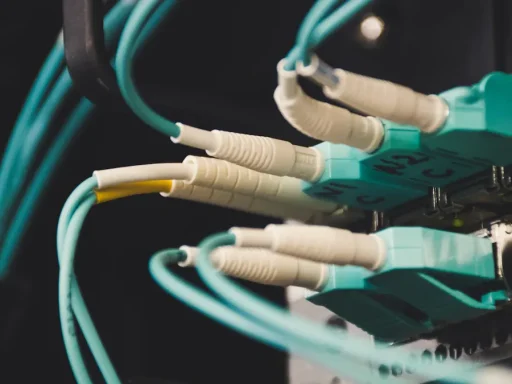

Healthcare data center architecture refers to the design and structure of the infrastructure that supports the storage, processing, and management of healthcare data. It typically consists of several components, including servers, storage devices, networking equipment, and security systems.

The components of healthcare data center architecture are designed to work together to ensure the availability, reliability, and security of healthcare data. Servers are responsible for processing and storing data, while storage devices provide the necessary capacity to store large volumes of data. Networking equipment enables communication between different components within the data center and facilitates connectivity with external systems.

There are different types of healthcare data center architecture, depending on the specific needs and requirements of the organization. Traditional data center architecture involves a centralized approach, where all components are located in a single physical location. This type of architecture offers high control and security but may be limited in terms of scalability and flexibility.

On the other hand, distributed or hybrid data center architecture involves multiple locations or cloud-based solutions. This approach allows for greater scalability and flexibility but may require additional security measures to protect data across different locations or cloud environments.

Scalability and Flexibility in Healthcare Data Centers

Scalability and flexibility are crucial aspects of healthcare data centers as they allow organizations to adapt to changing needs and accommodate future growth. In the healthcare sector, where data volumes are constantly increasing, it is essential to have a data center infrastructure that can scale up or down as needed.

Scalability refers to the ability of a data center to handle increasing workloads and data volumes without compromising performance or availability. A scalable data center can easily accommodate additional servers, storage devices, and networking equipment to meet growing demands. This ensures that healthcare organizations can continue to store and process data efficiently without experiencing performance issues or downtime.

Flexibility, on the other hand, refers to the ability of a data center to adapt to changing requirements and technologies. Healthcare organizations often need to integrate new applications, devices, or systems into their data center environment. A flexible data center architecture allows for easy integration and migration of new technologies without disrupting existing operations.

To achieve scalability and flexibility in healthcare data centers, organizations can adopt several strategies. Virtualization is one such strategy that allows for the consolidation of multiple servers onto a single physical server, reducing hardware costs and improving resource utilization. Cloud computing is another strategy that enables organizations to scale their infrastructure on-demand and access resources as needed.

Security and Compliance in Healthcare Data Centers

Security and compliance are critical considerations in healthcare data centers due to the sensitive nature of patient information and the regulatory requirements imposed on healthcare organizations. Data breaches in the healthcare sector can have severe consequences, including financial losses, reputational damage, and legal liabilities.

To ensure security in healthcare data centers, organizations must implement robust security measures at various levels. This includes physical security measures such as access controls, surveillance systems, and secure storage facilities. Network security measures such as firewalls, intrusion detection systems, and encryption protocols are also essential to protect data from unauthorized access or breaches.

Compliance with regulations such as HIPAA is equally important in healthcare data centers. Organizations must ensure that their data center infrastructure and processes comply with these regulations to avoid penalties and legal consequences. This includes implementing policies and procedures for data handling, access controls, and data breach notification.

Strategies for achieving security and compliance in healthcare data centers include regular security audits and assessments, employee training on security best practices, and the use of encryption and other security technologies. It is also important to partner with data center providers that have experience and expertise in healthcare data security and compliance.

Disaster Recovery and Business Continuity in Healthcare Data Centers

Disaster recovery and business continuity are crucial aspects of healthcare data centers to ensure the availability and accessibility of critical patient data. Any interruption in data center operations can have severe consequences, including delayed patient care, loss of critical data, and financial losses.

Disaster recovery refers to the process of recovering data and systems after a catastrophic event such as a natural disaster, power outage, or cyber-attack. Healthcare organizations must have robust backup and recovery plans in place to minimize downtime and ensure the rapid restoration of critical systems and data.

Business continuity, on the other hand, refers to the ability of an organization to continue its operations during and after a disruptive event. This includes having redundant systems, backup power sources, and alternative communication channels to ensure uninterrupted access to patient data.

Strategies for achieving disaster recovery and business continuity in healthcare data centers include regular backups of critical data, offsite storage of backups, redundant hardware and networking equipment, and the implementation of failover systems. It is also important to regularly test and update disaster recovery plans to ensure their effectiveness.

The Role of Cloud Computing in Healthcare Data Centers

Cloud computing has emerged as a game-changer in healthcare data centers, offering numerous benefits such as scalability, flexibility, cost savings, and improved collaboration. Cloud computing refers to the delivery of computing resources over the internet on-demand, allowing organizations to access virtualized resources such as servers, storage, and applications.

In healthcare data centers, cloud computing offers several advantages. One of the key benefits is scalability. Cloud-based solutions can easily scale up or down based on the organization’s needs, allowing healthcare organizations to handle increasing data volumes and workloads without investing in additional hardware or infrastructure.

Flexibility is another advantage of cloud computing in healthcare data centers. Cloud-based solutions enable organizations to easily integrate new applications, devices, or systems into their data center environment without disrupting existing operations. This allows for faster deployment of new technologies and improved collaboration between different healthcare providers.

Cost savings are also a significant benefit of cloud computing in healthcare data centers. By leveraging cloud-based solutions, organizations can reduce their hardware and infrastructure costs, as well as the costs associated with maintenance and upgrades. Cloud computing also offers a pay-as-you-go model, allowing organizations to only pay for the resources they use.

However, cloud computing in healthcare data centers also presents challenges. One of the main concerns is data security and privacy. Healthcare organizations must ensure that their data is adequately protected when stored or processed in the cloud. This includes implementing encryption, access controls, and other security measures to prevent unauthorized access or breaches.

Emerging Trends in Healthcare Data Center Solutions

The healthcare industry is constantly evolving, driven by technological advancements and changing patient needs. Several emerging trends are shaping the future of healthcare data center solutions, including artificial intelligence (AI) and machine learning, the Internet of Things (IoT), and edge computing.

Artificial intelligence and machine learning have the potential to revolutionize healthcare data centers by enabling advanced analytics, predictive modeling, and personalized medicine. AI algorithms can analyze large volumes of patient data to identify patterns, predict outcomes, and provide insights for better decision-making. Machine learning algorithms can continuously learn from new data to improve accuracy and efficiency over time.

The Internet of Things (IoT) is another trend that is transforming healthcare data centers. IoT devices such as wearables, remote monitoring devices, and smart medical equipment generate vast amounts of data that need to be collected, stored, and analyzed. Healthcare data centers must be equipped to handle the influx of IoT data and ensure its security and accessibility.

Edge computing is also gaining traction in healthcare data centers. Edge computing refers to the processing and analysis of data at or near the source, rather than sending it to a centralized data center. This approach reduces latency and enables real-time decision-making, which is critical in healthcare applications such as telemedicine, remote patient monitoring, and emergency response.

Choosing the Right Data Center Solution for Your Healthcare Organization

Choosing the right data center solution for a healthcare organization requires careful consideration of several factors. These factors include the organization’s specific needs and requirements, budget constraints, scalability and flexibility requirements, security and compliance considerations, and future growth plans.

There are different types of data center solutions available for healthcare organizations, including on-premises data centers, colocation facilities, and cloud-based solutions. On-premises data centers offer complete control and customization but require significant upfront investments in infrastructure and maintenance. Colocation facilities provide a middle ground by offering shared infrastructure and services, reducing costs and maintenance efforts. Cloud-based solutions offer scalability, flexibility, and cost savings but require careful consideration of security and compliance requirements.

When selecting a data center solution for a healthcare organization, it is important to consider best practices such as conducting thorough due diligence on potential providers, assessing their track record and experience in the healthcare sector, evaluating their security measures and compliance certifications, and considering their disaster recovery and business continuity capabilities.

In conclusion, data center solutions play a crucial role in the healthcare sector by providing the infrastructure and services necessary for storing, managing, and processing large volumes of patient data. Data centers offer numerous benefits to healthcare organizations, including improved efficiency, productivity, security, compliance, scalability, flexibility, disaster recovery, and business continuity.

However, healthcare data centers also face unique challenges such as data security and privacy concerns, regulatory compliance requirements, downtime risks, and maintenance and upgrade complexities. To overcome these challenges, healthcare organizations must adopt strategies and best practices that ensure the security, availability, and accessibility of critical patient data.

Furthermore, emerging trends such as artificial intelligence, machine learning, IoT, and edge computing are shaping the future of healthcare data center solutions. These trends offer opportunities for improved analytics, personalized medicine, remote patient monitoring, and real-time decision-making.

In light of the importance of data center solutions in healthcare, it is crucial for healthcare organizations to invest in robust and secure data center infrastructure. By doing so, they can ensure the efficient delivery of healthcare services, improve patient outcomes, and stay ahead in an increasingly digital and data-driven industry.

If you’re interested in learning more about data center infrastructure and its importance in the healthcare sector, you may find the article “Understanding Data Center Tiers: What They Are and Why They Matter” informative. This article dives into the different tiers of data centers and explains why they are crucial for ensuring reliability, availability, and scalability. To read more about it, click here.

FAQs

What are data center solutions for the healthcare sector?

Data center solutions for the healthcare sector refer to the technology infrastructure and services that support the storage, processing, and management of healthcare data. These solutions include hardware, software, and networking components that are designed to meet the unique needs of healthcare organizations.

Why are data center solutions important for the healthcare sector?

Data center solutions are important for the healthcare sector because they enable healthcare organizations to store and manage large amounts of sensitive patient data securely and efficiently. These solutions also support the use of advanced technologies such as artificial intelligence and machine learning, which can help healthcare providers improve patient outcomes and reduce costs.

What types of data center solutions are available for the healthcare sector?

There are several types of data center solutions available for the healthcare sector, including cloud-based solutions, on-premises solutions, and hybrid solutions that combine both cloud and on-premises components. These solutions can be customized to meet the specific needs of healthcare organizations, such as compliance with regulatory requirements and the ability to scale up or down as needed.

What are the benefits of using data center solutions in the healthcare sector?

The benefits of using data center solutions in the healthcare sector include improved data security, increased efficiency and productivity, better patient outcomes, and reduced costs. These solutions also enable healthcare organizations to leverage advanced technologies such as artificial intelligence and machine learning to improve diagnosis and treatment.

What are the challenges of implementing data center solutions in the healthcare sector?

The challenges of implementing data center solutions in the healthcare sector include the need to comply with strict regulatory requirements, the complexity of integrating multiple systems and applications, and the need to ensure data privacy and security. Healthcare organizations must also ensure that their data center solutions are scalable and flexible enough to meet changing needs and requirements.

Data center best practices refer to a set of guidelines and procedures that are designed to optimize the performance, efficiency, and security of data centers. These best practices are developed based on industry standards, regulations, and the experiences of industry leaders in data center management. Implementing best practices in data center management is crucial for organizations as it ensures the smooth operation of their IT infrastructure, minimizes downtime, reduces costs, and enhances overall productivity.

In this blog post, we will explore the importance of implementing data center best practices and how industry leaders play a significant role in shaping these practices. We will also delve into case studies of successful data center management by industry leaders and discuss the key takeaways from their experiences. Furthermore, we will examine the key elements of data center best practices and provide insights on how to implement them effectively. Lastly, we will discuss specific best practices for data center design and layout, cooling and power management, security and access control, monitoring and maintenance, as well as disaster recovery and business continuity.

Key Takeaways

- Data center best practices are essential for improved performance and efficiency.

- Industry leaders play a crucial role in data center management and can provide valuable lessons.

- Key elements of data center best practices include design, cooling, power management, security, access control, monitoring, maintenance, and disaster recovery.

- Best practices for data center design and layout involve careful planning and consideration of space, power, and cooling requirements.

- Best practices for data center cooling and power management include using efficient equipment and implementing proper airflow management.

Importance of Industry Leaders in Data Center Management

Industry leaders play a crucial role in shaping data center best practices. These leaders are organizations or individuals who have demonstrated excellence in managing data centers and have achieved exceptional results in terms of performance, efficiency, and security. They serve as benchmarks for other organizations to follow and learn from.

By following industry leaders in data center management, organizations can benefit from their expertise and experience. Industry leaders have often invested significant time and resources into researching and implementing best practices in their own data centers. They have tested various strategies and technologies to optimize performance, improve efficiency, and enhance security. By adopting these proven practices, organizations can save time and effort in trial-and-error processes and achieve better results more quickly.

Some examples of industry leaders in data center management include Google, Amazon Web Services (AWS), Microsoft Azure, and Facebook. These companies have built and operated some of the largest and most efficient data centers in the world. They have developed innovative solutions to address the challenges of managing massive amounts of data and have set new standards for performance, efficiency, and security in the industry.

Lessons Learned from Industry Leaders in Data Center Management

Case studies of successful data center management by industry leaders provide valuable insights and lessons for organizations looking to improve their own data center operations. By studying these case studies, organizations can gain a deeper understanding of the challenges faced by industry leaders and the strategies they employed to overcome them.

One such case study is Google’s data center management practices. Google has implemented several innovative solutions to optimize the performance and efficiency of its data centers. For example, they use machine learning algorithms to predict and optimize cooling requirements, resulting in significant energy savings. They also employ advanced monitoring systems to detect and resolve issues proactively, minimizing downtime.

Key takeaways from Google’s experience include the importance of leveraging technology to automate processes, the value of predictive analytics in optimizing resource allocation, and the need for proactive monitoring and maintenance. Organizations can apply these lessons by investing in advanced technologies, implementing predictive analytics tools, and establishing robust monitoring and maintenance procedures.

Another case study is Amazon Web Services’ (AWS) data center management practices. AWS has developed a highly scalable and flexible infrastructure that allows organizations to easily provision and manage their IT resources. They have also implemented strict security measures to protect customer data and ensure compliance with industry regulations.

Key takeaways from AWS’s experience include the importance of scalability and flexibility in data center design, the need for robust security measures, and the value of compliance with industry regulations. Organizations can apply these lessons by designing their data centers with scalability and flexibility in mind, implementing comprehensive security measures, and ensuring compliance with relevant regulations.

Key Elements of Data Center Best Practices

Data center best practices encompass several essential components that are crucial for effective data center management. These elements include data center design and layout, cooling and power management, security and access control, monitoring and maintenance, as well as disaster recovery and business continuity planning.

Each of these elements plays a vital role in ensuring the smooth operation of data centers and optimizing their performance, efficiency, and security. Let’s explore each element in more detail.

Best Practices for Data Center Design and Layout

Proper data center design and layout are essential for optimizing performance, efficiency, and scalability. A well-designed data center should provide adequate space for equipment, efficient airflow management, and easy access for maintenance and upgrades.

One best practice for data center design is to use a modular approach. This involves dividing the data center into separate modules or zones, each with its own power and cooling infrastructure. This allows for easier scalability and flexibility as new equipment can be added or removed without disrupting the entire data center.

Another best practice is to implement hot aisle/cold aisle containment. This involves arranging server racks in alternating rows with cold air intakes facing one aisle (cold aisle) and hot air exhausts facing the other aisle (hot aisle). This helps to prevent hot and cold air mixing, improving cooling efficiency.

To optimize data center design and layout for improved performance and efficiency, organizations should also consider factors such as cable management, equipment placement, and accessibility. Proper cable management reduces the risk of cable damage or accidental disconnections. Strategic equipment placement ensures efficient airflow and minimizes hotspots. Easy accessibility allows for quick maintenance and upgrades.

Best Practices for Data Center Cooling and Power Management

Efficient cooling and power management are crucial for maintaining optimal operating conditions in data centers. Excessive heat can cause equipment failure, while inadequate power supply can lead to downtime. Implementing best practices in cooling and power management can help organizations optimize energy usage, reduce costs, and improve overall performance.

One best practice for cooling management is to use a combination of air and liquid cooling. Air cooling involves using fans and air conditioning units to remove heat from the data center, while liquid cooling involves circulating coolants through server racks to dissipate heat more efficiently. By combining these two methods, organizations can achieve better cooling efficiency and reduce energy consumption.

Another best practice is to implement intelligent temperature monitoring and control systems. These systems use sensors to monitor temperature levels in real-time and adjust cooling accordingly. By maintaining optimal temperature levels, organizations can prevent equipment overheating and reduce energy waste.

In terms of power management, one best practice is to use energy-efficient hardware. This includes servers, storage devices, and networking equipment that are designed to consume less power without compromising performance. By using energy-efficient hardware, organizations can reduce their electricity bills and minimize their carbon footprint.

Another best practice is to implement power management software that allows for centralized control and monitoring of power usage. This software enables organizations to identify power-hungry devices or applications and implement measures to optimize their power consumption.

Best Practices for Data Center Security and Access Control

Data center security and access control are critical for protecting sensitive information and preventing unauthorized access. Implementing best practices in these areas helps organizations safeguard their data, comply with industry regulations, and maintain the trust of their customers.

One best practice for data center security is to implement multi-factor authentication (MFA) for access control. MFA requires users to provide multiple forms of identification, such as a password, a fingerprint scan, or a smart card, before granting access to the data center. This adds an extra layer of security and reduces the risk of unauthorized access.

Another best practice is to implement physical security measures such as surveillance cameras, biometric locks, and access control systems. These measures help prevent unauthorized individuals from entering the data center premises and provide a record of any suspicious activities.

In terms of network security, organizations should implement firewalls, intrusion detection systems, and encryption protocols to protect data in transit and at rest. Regular security audits and vulnerability assessments should also be conducted to identify and address any potential security risks.

Best Practices for Data Center Monitoring and Maintenance

Regular monitoring and maintenance are crucial for identifying and resolving issues before they escalate into major problems. Implementing best practices in data center monitoring and maintenance helps organizations ensure the smooth operation of their IT infrastructure, minimize downtime, and optimize performance.

One best practice for data center monitoring is to use advanced monitoring tools that provide real-time visibility into the performance and health of the data center. These tools can monitor various parameters such as temperature, humidity, power usage, network traffic, and server performance. By analyzing this data, organizations can identify potential issues and take proactive measures to address them.

Another best practice is to establish a comprehensive maintenance schedule that includes regular inspections, equipment cleaning, firmware updates, and hardware replacements. This helps prevent equipment failure, prolongs the lifespan of the equipment, and ensures optimal performance.

Organizations should also implement a robust incident management process that includes clear escalation procedures, documentation of incidents, root cause analysis, and corrective actions. This helps ensure that incidents are resolved promptly and that preventive measures are implemented to avoid similar incidents in the future.

Best Practices for Data Center Disaster Recovery and Business Continuity

Disaster recovery and business continuity planning are essential for minimizing downtime and ensuring the uninterrupted operation of data centers. Implementing best practices in these areas helps organizations recover quickly from disasters or disruptions and maintain the continuity of their operations.

One best practice for disaster recovery is to implement a comprehensive backup strategy that includes regular backups of critical data and applications. These backups should be stored offsite or in the cloud to protect against physical damage or loss of data.

Another best practice is to conduct regular disaster recovery drills to test the effectiveness of the recovery plan. These drills simulate various disaster scenarios and allow organizations to identify any gaps or weaknesses in their recovery processes.

In terms of business continuity, organizations should develop a detailed business continuity plan that outlines the steps to be taken in the event of a disruption. This plan should include procedures for relocating operations, communicating with stakeholders, and restoring critical services.

Implementing Data Center Best Practices for Improved Performance and Efficiency

In conclusion, implementing data center best practices is crucial for organizations looking to optimize the performance, efficiency, and security of their data centers. By following industry leaders in data center management and learning from their experiences, organizations can gain valuable insights and apply proven strategies to improve their own data center operations.

Key elements of data center best practices include data center design and layout, cooling and power management, security and access control, monitoring and maintenance, as well as disaster recovery and business continuity planning. By implementing best practices in these areas, organizations can enhance the performance, efficiency, and security of their data centers.

It is important for organizations to prioritize the implementation of data center best practices to ensure the smooth operation of their IT infrastructure, minimize downtime, reduce costs, and enhance overall productivity. By doing so, they can stay ahead of the competition and meet the ever-increasing demands of the digital age.

If you’re interested in exploring the cutting-edge technologies and transformative impact on the industry’s future, you should definitely check out this article on AI-powered data centers. It delves into how artificial intelligence is revolutionizing data center operations and management, leading to increased efficiency, reduced costs, and improved performance. Discover how AI is being integrated into various aspects of data centers, from predictive maintenance to intelligent resource allocation. This article is a must-read for anyone looking to stay ahead in the rapidly evolving data center landscape. Read more

FAQs

What are data center best practices?

Data center best practices are a set of guidelines and procedures that ensure the efficient and effective operation of a data center. These practices cover various aspects of data center management, including design, construction, maintenance, security, and energy efficiency.

What are the benefits of following data center best practices?

Following data center best practices can help organizations achieve several benefits, such as improved reliability, increased uptime, reduced downtime, enhanced security, better energy efficiency, and lower operating costs. These benefits can ultimately lead to improved business performance and customer satisfaction.

What are some common data center best practices?

Some common data center best practices include regular maintenance and testing, proper cooling and ventilation, effective power management, robust security measures, efficient use of space, and adherence to industry standards and regulations. Other best practices may vary depending on the specific needs and requirements of the organization.

What are some lessons learned from industry leaders in data center management?

Industry leaders in data center management have learned several valuable lessons over the years, such as the importance of proactive maintenance, the need for effective disaster recovery plans, the benefits of modular and scalable designs, the value of automation and monitoring tools, and the significance of energy efficiency and sustainability.

How can organizations implement data center best practices?

Organizations can implement data center best practices by conducting a thorough assessment of their current data center operations, identifying areas for improvement, and developing a comprehensive plan for implementing best practices. This plan should include specific goals, timelines, and metrics for measuring success. It may also involve investing in new technologies, hiring specialized staff, and partnering with experienced data center service providers.

Data centers are centralized locations where organizations store, manage, and distribute large amounts of data. In the digital age, data centers play a crucial role in supporting the growing demand for data storage and processing. They are the backbone of modern businesses, enabling them to store and access vast amounts of information, run complex applications, and deliver services to customers.

The current state of data centers is characterized by their increasing size and complexity. As more businesses rely on data-driven operations, the demand for data center capacity continues to grow. This has led to the construction of massive data centers that consume significant amounts of energy and require advanced cooling systems to prevent overheating.

Key Takeaways

- Edge computing is becoming more prevalent and is changing the way data centers operate.

- Artificial intelligence is being used to improve data center management and efficiency.

- Quantum computing has the potential to revolutionize data center operations.

- Cybersecurity is becoming increasingly important in data centers due to the rise of cyber threats.

- Advancements in cooling technologies and the integration of renewable energy sources are improving data center sustainability.

The Rise of Edge Computing and its Impact on Data Centers

Edge computing is a distributed computing paradigm that brings computation and data storage closer to the location where it is needed. Unlike traditional cloud computing, which relies on centralized data centers, edge computing enables processing and analysis to be done at or near the source of the data.

One of the main advantages of edge computing is reduced latency. By processing data closer to where it is generated, edge computing can significantly reduce the time it takes for data to travel back and forth between devices and centralized data centers. This is particularly important for applications that require real-time processing, such as autonomous vehicles or industrial automation.

The rise of edge computing has a significant impact on data centers. With more processing being done at the edge, there is less reliance on centralized data centers for computation and storage. This means that data centers can focus on handling larger-scale tasks and storing long-term data, while edge devices handle real-time processing.

The Role of Artificial Intelligence in Data Center Management

Artificial intelligence (AI) refers to the simulation of human intelligence in machines that are programmed to think and learn like humans. In the context of data center management, AI can be used to automate various tasks, optimize resource allocation, and improve overall efficiency.

One of the main advantages of using AI in data center management is the ability to predict and prevent system failures. By analyzing large amounts of data in real-time, AI algorithms can identify patterns and anomalies that may indicate potential issues. This allows data center operators to take proactive measures to prevent downtime and minimize the impact of failures.

AI can also be used to optimize resource allocation in data centers. By analyzing historical data and real-time demand, AI algorithms can dynamically allocate computing resources to different applications and workloads. This ensures that resources are used efficiently and that performance is optimized.

The Emergence of Quantum Computing and its Potential for Data Centers

Quantum computing is a revolutionary technology that leverages the principles of quantum mechanics to perform computations that are exponentially faster than classical computers. Unlike classical computers, which use bits to represent information as either a 0 or a 1, quantum computers use quantum bits, or qubits, which can represent both 0 and 1 simultaneously.

The potential advantages of quantum computing for data centers are immense. Quantum computers have the potential to solve complex optimization problems, perform advanced simulations, and break encryption algorithms that are currently considered secure. This could have significant implications for data center operations, such as optimizing resource allocation, improving security protocols, and accelerating data processing.

However, there are also challenges and limitations associated with quantum computing in data centers. Quantum computers are still in the early stages of development and are not yet commercially available at scale. They also require extremely cold temperatures and precise control environments, which may pose challenges for integration into existing data center infrastructure.

The Increasing Importance of Cybersecurity in Data Centers

Cybersecurity refers to the practice of protecting computer systems, networks, and data from unauthorized access, use, disclosure, disruption, modification, or destruction. In the context of data centers, cybersecurity is of paramount importance due to the sensitive nature of the data stored and processed.

Data centers are prime targets for cyberattacks due to the valuable information they hold. Common cybersecurity threats to data centers include malware, ransomware, distributed denial-of-service (DDoS) attacks, and insider threats. These threats can result in data breaches, service disruptions, financial losses, and damage to an organization’s reputation.

To mitigate these risks, data centers must implement robust cybersecurity measures. This includes implementing firewalls, intrusion detection systems, encryption protocols, access controls, and regular security audits. It is also important for data center operators to stay up-to-date with the latest cybersecurity threats and best practices to ensure the highest level of protection.

The Advancements in Cooling Technologies for Data Centers

Cooling is a critical aspect of data center operations as the high-density computing equipment generates a significant amount of heat. Without proper cooling, data centers can experience overheating, which can lead to equipment failure and downtime.

Traditional cooling methods for data centers include air conditioning systems and raised floors. However, these methods are not always efficient and can consume a substantial amount of energy. As data centers continue to grow in size and complexity, there is a need for more advanced cooling technologies that are both energy-efficient and effective at dissipating heat.

Advancements in cooling technologies for data centers include liquid cooling systems, direct-to-chip cooling, and free cooling using outside air. Liquid cooling systems involve circulating coolants directly to the heat-generating components, allowing for more efficient heat transfer. Direct-to-chip cooling involves placing cooling elements directly on the chips to remove heat at its source. Free cooling uses outside air to cool the data center instead of relying solely on mechanical cooling systems.

The Integration of Renewable Energy Sources in Data Centers

The integration of renewable energy sources in data centers is becoming increasingly important as organizations strive to reduce their carbon footprint and rely on sustainable energy sources. Traditional energy sources for data centers, such as fossil fuels, contribute to greenhouse gas emissions and are not sustainable in the long term.

Renewable energy sources, such as solar and wind power, offer a more sustainable alternative for powering data centers. By harnessing the power of the sun and wind, data centers can reduce their reliance on traditional energy sources and lower their environmental impact.

There are several advantages to integrating renewable energy sources in data centers. First, it helps reduce operating costs by reducing energy consumption and reliance on expensive traditional energy sources. Second, it improves the environmental sustainability of data centers by reducing greenhouse gas emissions. Finally, it enhances the reputation of organizations by demonstrating their commitment to sustainability and corporate social responsibility.

The Growing Trend of Modular Data Centers

Modular data centers are pre-fabricated units that contain all the necessary components of a traditional data center, including servers, cooling systems, power distribution units, and networking equipment. These units can be quickly deployed and easily scaled to meet the growing demand for data center capacity.

One of the main advantages of modular data centers is their flexibility. They can be deployed in remote locations or areas with limited space, allowing organizations to bring computing resources closer to where they are needed. Modular data centers also offer scalability, as additional units can be added as demand increases.

There are several examples of modular data centers in practice. For example, Microsoft has deployed modular data centers in shipping containers that can be transported to different locations as needed. These modular data centers have been used to support cloud services in remote areas or during events that require additional computing capacity.

The Impact of 5G Networks on Data Center Infrastructure

5G networks are the next generation of wireless technology that promises faster speeds, lower latency, and greater capacity compared to previous generations. This has significant implications for data center infrastructure as it enables new applications and services that require high-speed connectivity and real-time processing.

The importance of 5G networks for data centers lies in their ability to support the growing demand for data-intensive applications, such as autonomous vehicles, virtual reality, and the Internet of Things (IoT). These applications require low latency and high bandwidth, which can be provided by 5G networks.

The impact of 5G networks on data center infrastructure is twofold. First, data centers will need to handle the increased volume of data generated by 5G-enabled devices. This will require data centers to scale their capacity and optimize their infrastructure to handle the increased workload. Second, data centers will need to be located closer to the edge to reduce latency and ensure real-time processing for 5G applications.

The Future of Data Center Workforce: Automation and Skills Requirements

The current data center workforce consists of skilled professionals who are responsible for managing and maintaining data center operations. However, with the advancements in technology, particularly in automation and artificial intelligence, the role of the data center workforce is expected to change.

Automation has the potential to streamline data center operations and reduce the need for manual intervention. Tasks such as provisioning resources, monitoring performance, and troubleshooting can be automated, allowing data center operators to focus on more strategic activities.

While automation offers many advantages, it also raises concerns about job displacement. As more tasks become automated, there may be a reduced need for certain roles within the data center workforce. However, this also presents an opportunity for upskilling and reskilling. Data center professionals can acquire new skills in areas such as AI, cybersecurity, and cloud computing to adapt to the changing demands of the industry.

In conclusion, the future of data centers is characterized by advancements in technology that are reshaping the way they operate. Edge computing is bringing computation closer to where it is needed, AI is optimizing resource allocation and improving efficiency, quantum computing has the potential to revolutionize data processing, cybersecurity is becoming increasingly important, cooling technologies are advancing to meet the growing demand for data center capacity, renewable energy sources are being integrated to reduce environmental impact, modular data centers offer flexibility and scalability, 5G networks are enabling new applications and services, and automation is changing the role of the data center workforce. It is crucial for businesses to stay up-to-date with these developments and adapt their data center strategies to remain competitive in the digital age.

If you’re interested in setting up your own home data center, you should definitely check out “The Best Guide to Setting Up a Home Data Center” on DataCenterInfo.com. This comprehensive article provides valuable insights and practical tips for creating a reliable and efficient data center right in the comfort of your own home. From choosing the right equipment to optimizing power consumption, this guide covers everything you need to know to get started. Whether you’re a tech enthusiast or a small business owner, this article is a must-read for anyone looking to harness the power of data centers. Read more

FAQs

What are data centers?

Data centers are facilities that house computer systems and associated components, such as telecommunications and storage systems. They are used to store, process, and manage large amounts of data.

What is the future of data centers?

The future of data centers is focused on emerging technologies and innovations that will improve efficiency, reduce costs, and increase sustainability. These include artificial intelligence, edge computing, 5G networks, and renewable energy sources.

What is edge computing?

Edge computing is a distributed computing paradigm that brings computation and data storage closer to the location where it is needed, improving response times and reducing bandwidth usage. It is becoming increasingly important as more devices are connected to the internet of things (IoT).

What is artificial intelligence (AI) in data centers?

AI is being used in data centers to optimize energy usage, improve cooling efficiency, and automate routine tasks. It can also be used to predict and prevent equipment failures, reducing downtime and improving reliability.

What are 5G networks and how will they impact data centers?

5G networks are the next generation of mobile networks, offering faster speeds, lower latency, and greater capacity. They will enable new applications and services that require high bandwidth and low latency, such as autonomous vehicles and virtual reality. Data centers will play a critical role in supporting these new applications and services.

What are renewable energy sources and how are they being used in data centers?

Renewable energy sources, such as solar and wind power, are being used in data centers to reduce carbon emissions and improve sustainability. Some data centers are even becoming self-sufficient, generating all of their own energy from renewable sources.

Data Center as a Service (DCaaS) is a cloud computing model that provides businesses with access to virtualized data center resources over the internet. It allows organizations to outsource their data center infrastructure and management to a third-party provider, eliminating the need for on-premises hardware and reducing operational costs. DCaaS offers a range of benefits, including cost savings, scalability, flexibility, reduced downtime, and improved disaster recovery.

The concept of DCaaS has its roots in the early days of cloud computing. As businesses started to realize the potential of virtualization and the benefits of moving their infrastructure to the cloud, the demand for more comprehensive and specialized services grew. This led to the emergence of DCaaS providers who offered businesses the ability to outsource their entire data center infrastructure and management.

In today’s fast-paced business environment, where agility and scalability are crucial for success, DCaaS has become an essential component of modern business operations. It allows organizations to focus on their core competencies while leaving the management of their data center infrastructure to experts. With DCaaS, businesses can quickly scale their infrastructure up or down based on their needs, without having to invest in expensive hardware or worry about maintenance and upgrades.

Key Takeaways

- DCaaS is a cloud-based service that provides businesses with access to data center resources without the need for physical infrastructure.

- DCaaS offers benefits such as cost savings, scalability, and flexibility for businesses of all sizes.

- DCaaS streamlines infrastructure management by providing a centralized platform for monitoring and managing data center resources.

- Key features of DCaaS include on-demand resource allocation, automated provisioning, and real-time monitoring.

- Understanding the DCaaS model is crucial for businesses looking to adopt this service and reap its benefits.

Benefits of DCaaS for Businesses

One of the primary benefits of DCaaS is cost savings. By outsourcing their data center infrastructure and management, businesses can significantly reduce their capital expenditure (CapEx) and operational expenditure (OpEx). They no longer need to invest in expensive hardware or hire a dedicated IT team to manage their infrastructure. Instead, they can pay a monthly fee to a DCaaS provider and have access to all the resources they need.

Scalability is another key advantage of DCaaS. With traditional data centers, businesses often face challenges when it comes to scaling their infrastructure up or down. They need to purchase additional hardware or decommission existing equipment, which can be time-consuming and costly. With DCaaS, businesses can easily scale their infrastructure based on their needs. They can quickly add or remove virtual machines, storage, and networking resources, allowing them to respond to changing business requirements more effectively.

Flexibility is also a significant benefit of DCaaS. Businesses can choose the specific services and resources they need, tailoring their infrastructure to meet their unique requirements. They can select the level of storage, networking, and security that best suits their needs, without having to invest in excess capacity. This flexibility allows businesses to optimize their infrastructure and ensure they are only paying for what they actually use.

Reduced downtime is another advantage of DCaaS. With traditional data centers, businesses often experience downtime due to hardware failures, maintenance activities, or power outages. This can result in significant financial losses and damage to the organization’s reputation. DCaaS providers typically have redundant infrastructure and robust disaster recovery mechanisms in place, ensuring high availability and minimizing the risk of downtime.

Improved disaster recovery is also a critical benefit of DCaaS. Data loss or system failures can have severe consequences for businesses. DCaaS providers typically have robust backup and recovery mechanisms in place, ensuring that data is protected and can be quickly restored in the event of a disaster. This allows businesses to minimize downtime and ensure business continuity.

How DCaaS Streamlines Infrastructure Management

DCaaS streamlines infrastructure management by providing businesses with centralized management, automation, monitoring and reporting, and resource optimization capabilities.

Centralized management is a key feature of DCaaS. Instead of managing multiple data centers spread across different locations, businesses can consolidate their infrastructure into a single centralized location. This allows for more efficient management and reduces the complexity associated with managing multiple data centers. With centralized management, businesses can easily provision and manage virtual machines, storage, networking resources, and security policies from a single interface.

Automation is another critical aspect of DCaaS. By automating routine tasks and processes, businesses can reduce the time and effort required to manage their infrastructure. DCaaS providers typically offer automation capabilities that allow businesses to automate tasks such as provisioning and deprovisioning virtual machines, scaling resources up or down, and applying security policies. This not only improves operational efficiency but also reduces the risk of human error.

Monitoring and reporting are essential components of effective infrastructure management. DCaaS providers typically offer robust monitoring and reporting capabilities that allow businesses to track the performance and health of their infrastructure in real-time. This enables businesses to identify and address any issues or bottlenecks before they impact the performance or availability of their applications. Additionally, detailed reports provide businesses with insights into resource utilization, allowing them to optimize their infrastructure and ensure they are only paying for what they actually use.

Resource optimization is another key aspect of DCaaS. By leveraging virtualization technologies, businesses can optimize the utilization of their infrastructure resources. Virtualization allows multiple virtual machines to run on a single physical server, maximizing resource utilization and reducing hardware costs. Additionally, businesses can easily scale their resources up or down based on demand, ensuring they have the right amount of capacity at all times.

Key Features of DCaaS

DCaaS offers a range of key features that enable businesses to leverage the benefits of cloud computing for their data center infrastructure.

Virtualization is a fundamental feature of DCaaS. It allows businesses to run multiple virtual machines on a single physical server, maximizing resource utilization and reducing hardware costs. Virtualization also provides businesses with the flexibility to scale their resources up or down based on demand, ensuring they have the right amount of capacity at all times.

Storage is another critical feature of DCaaS. Businesses can leverage scalable storage solutions provided by DCaaS providers to store and manage their data. These solutions typically offer high availability, data redundancy, and data protection mechanisms to ensure data integrity and availability.

Networking is also a key feature of DCaaS. DCaaS providers offer networking solutions that allow businesses to connect their virtual machines and other resources to the internet or private networks. These solutions typically include features such as load balancing, firewalling, and virtual private networking (VPN), enabling businesses to securely connect their infrastructure to other networks.

Security is a critical aspect of DCaaS. DCaaS providers typically offer robust security mechanisms to protect businesses’ data and infrastructure. These mechanisms include features such as firewalls, intrusion detection and prevention systems (IDPS), and encryption. Additionally, DCaaS providers often have stringent security policies and procedures in place to ensure the confidentiality, integrity, and availability of their customers’ data.

Backup and recovery is another key feature of DCaaS. DCaaS providers typically offer robust backup and recovery mechanisms that allow businesses to protect their data and quickly restore it in the event of a disaster. These mechanisms include features such as regular backups, offsite storage, and data replication.

Understanding the DCaaS Model

To effectively leverage DCaaS, businesses need to understand the various aspects of the DCaaS model, including service level agreements (SLAs), pricing models, service delivery models, and service customization options.

Service level agreements (SLAs) are contractual agreements between businesses and DCaaS providers that define the level of service that will be provided. SLAs typically include metrics such as uptime guarantees, response times for support requests, and performance guarantees. It is essential for businesses to carefully review SLAs before signing up with a DCaaS provider to ensure that the service meets their specific requirements.

Pricing models for DCaaS can vary depending on the provider and the specific services being offered. Common pricing models include pay-as-you-go, where businesses pay based on their actual usage, and fixed monthly pricing, where businesses pay a fixed fee regardless of their usage. It is important for businesses to carefully consider their usage patterns and requirements when selecting a pricing model to ensure they are getting the best value for their money.

Service delivery models for DCaaS can also vary depending on the provider. Common service delivery models include Infrastructure as a Service (IaaS), where businesses have access to virtualized infrastructure resources, and Platform as a Service (PaaS), where businesses have access to a complete development and deployment platform. It is important for businesses to understand the specific service delivery model being offered by a DCaaS provider to ensure it aligns with their needs.

Service customization is another important aspect of the DCaaS model. Businesses should look for DCaaS providers that offer customization options that allow them to tailor the service to their specific requirements. This could include options such as selecting the level of storage, networking, and security, as well as the ability to integrate with existing systems and applications.

DCaaS vs Traditional Data Center Management

DCaaS differs from traditional data center management in several key ways, including infrastructure ownership, management responsibilities, cost structure, and scalability.

In terms of infrastructure ownership, traditional data center management involves businesses owning and maintaining their own hardware and infrastructure. This requires significant upfront capital investment and ongoing operational costs. In contrast, with DCaaS, businesses do not own the underlying infrastructure. Instead, they lease the infrastructure from a third-party provider, eliminating the need for upfront capital investment and reducing operational costs.

In terms of management responsibilities, traditional data center management requires businesses to handle all aspects of infrastructure management, including hardware procurement, installation, maintenance, and upgrades. This can be time-consuming and resource-intensive. With DCaaS, these management responsibilities are outsourced to the provider. Businesses can focus on their core competencies while leaving the management of their infrastructure to experts.

In terms of cost structure, traditional data center management involves significant upfront capital expenditure (CapEx) for hardware procurement and ongoing operational expenditure (OpEx) for maintenance and upgrades. With DCaaS, businesses pay a monthly fee to the provider, which covers the cost of infrastructure, management, and support. This allows businesses to shift from a CapEx to an OpEx model, reducing upfront costs and providing more predictable and manageable expenses.

In terms of scalability, traditional data center management can be challenging when it comes to scaling infrastructure up or down. Businesses need to purchase additional hardware or decommission existing equipment, which can be time-consuming and costly. With DCaaS, businesses can easily scale their infrastructure based on their needs. They can quickly add or remove virtual machines, storage, and networking resources, allowing them to respond to changing business requirements more effectively.

Choosing the Right DCaaS Provider

When choosing a DCaaS provider, businesses should consider several factors, including reliability, security, scalability, support, and cost.

Reliability is a critical factor to consider when selecting a DCaaS provider. Businesses should look for providers that have a proven track record of delivering high availability and uptime. This can be determined by reviewing the provider’s SLAs and customer testimonials.

Security is another important consideration. Businesses should ensure that the DCaaS provider has robust security mechanisms in place to protect their data and infrastructure. This includes features such as firewalls, intrusion detection and prevention systems (IDPS), encryption, and physical security measures.

Scalability is also a key factor to consider. Businesses should choose a DCaaS provider that offers flexible scalability options, allowing them to easily scale their infrastructure up or down based on their needs. This includes the ability to add or remove virtual machines, storage, and networking resources as required.

Support is another critical consideration. Businesses should ensure that the DCaaS provider offers responsive and knowledgeable support services. This includes 24/7 technical support, proactive monitoring and maintenance, and regular updates and patches.

Cost is also an important factor to consider when selecting a DCaaS provider. Businesses should carefully review the pricing models and compare them to their specific usage patterns and requirements. It is important to consider both the upfront costs and the ongoing operational costs to ensure that the service is affordable and provides good value for money.

DCaaS and Cloud Computing

DCaaS and cloud computing are closely related concepts, with DCaaS being a specific implementation of cloud computing for data center infrastructure. DCaaS leverages the principles and technologies of cloud computing to provide businesses with virtualized data center resources over the internet.

The relationship between DCaaS and cloud computing lies in the shared characteristics and benefits they offer. Both DCaaS and cloud computing provide businesses with access to scalable, flexible, and cost-effective infrastructure resources. They allow businesses to leverage virtualization technologies to maximize resource utilization and reduce hardware costs. They also provide businesses with the ability to scale their infrastructure up or down based on demand, ensuring they have the right amount of capacity at all times.

By combining DCaaS with cloud computing, businesses can further enhance the benefits they derive from both models. For example, businesses can leverage DCaaS for their data center infrastructure while using cloud computing for other aspects of their IT infrastructure, such as software development and deployment. This allows businesses to take advantage of the scalability, flexibility, and cost savings offered by both models.

However, integrating DCaaS and cloud computing can also present challenges. Businesses need to carefully consider factors such as data security, compliance, and integration with existing systems and applications. They need to ensure that their data is protected when it is transferred between the DCaaS provider’s infrastructure and the cloud computing platform. They also need to ensure that their applications can seamlessly integrate with both the DCaaS infrastructure and the cloud computing platform.

Security and Compliance in DCaaS

Security and compliance are critical considerations when it comes to DCaaS. Businesses need to ensure that their data is protected and that they meet any regulatory or industry-specific compliance requirements.

Security is of utmost importance in DCaaS. Businesses should ensure that the DCaaS provider has robust security mechanisms in place to protect their data and infrastructure. This includes features such as firewalls, intrusion detection and prevention systems (IDPS), encryption, and physical security measures. Businesses should also ensure that the provider has stringent security policies and procedures in place to ensure the confidentiality, integrity, and availability of their data.

Compliance is another important consideration in DCaaS. Businesses need to ensure that they meet any regulatory or industry-specific compliance requirements when it comes to their data and infrastructure. This could include requirements such as data privacy, data residency, and data protection. Businesses should carefully review the compliance capabilities of the DCaaS provider and ensure that they align with their specific requirements.

Best practices for securing DCaaS infrastructure include implementing strong access controls, regularly patching and updating systems, monitoring for security incidents, and conducting regular security audits. Businesses should also consider implementing multi-factor authentication, encryption, and data loss prevention mechanisms to further enhance the security of their infrastructure.

Compliance considerations for DCaaS users include understanding the specific compliance requirements that apply to their industry or region, ensuring that the DCaaS provider has appropriate certifications and accreditations, and regularly reviewing and updating their compliance policies and procedures.

Future Trends in DCaaS

The future of DCaaS is expected to be shaped by emerging technologies and trends that will impact both providers and users.

One of the key trends in DCaaS is the increasing adoption of edge computing. Edge computing involves processing data closer to the source or the end user, rather than relying on a centralized cloud infrastructure. This trend is driven by the need for real-time data processing and low-latency applications, especially in industries such as autonomous vehicles, IoT, and augmented reality. By bringing computing resources closer to the edge of the network, organizations can reduce the time it takes to transmit data to the cloud and receive a response, enabling faster decision-making and improved user experiences. Additionally, edge computing can help alleviate bandwidth constraints by offloading processing tasks from the cloud to local devices, reducing network congestion and improving overall system performance. As a result, DCaaS providers are increasingly offering edge computing capabilities to meet the growing demand for faster and more responsive applications.

If you’re interested in streamlining your cloud computing with Data Center as a Service (DCaaS), you may also want to check out this article on “Streamlining Infrastructure Management” from OpenDCIM. OpenDCIM is revolutionizing the way data center infrastructure is managed, providing a comprehensive solution for optimizing and organizing your IT infrastructure. With OpenDCIM, you can easily track and manage your data center assets, streamline workflows, and improve overall efficiency. To learn more about how OpenDCIM can help you streamline your infrastructure management, click here.

FAQs

What is Data Center as a Service (DCaaS)?

Data Center as a Service (DCaaS) is a cloud-based service that provides businesses with access to a virtual data center infrastructure. It allows businesses to outsource their data center infrastructure management to a third-party provider, who manages the hardware, software, and networking components of the data center.

What are the benefits of using DCaaS?

The benefits of using DCaaS include reduced costs, increased scalability, improved security, and simplified infrastructure management. DCaaS allows businesses to pay only for the resources they need, and to easily scale up or down as their needs change. It also provides businesses with access to enterprise-level security and compliance measures, and frees up IT staff to focus on more strategic initiatives.

How does DCaaS work?

DCaaS works by providing businesses with access to a virtual data center infrastructure that is hosted and managed by a third-party provider. The provider manages the hardware, software, and networking components of the data center, and provides businesses with access to the resources they need through a web-based portal or API. Businesses can then use these resources to run their applications and store their data.

What types of businesses can benefit from using DCaaS?

DCaaS can benefit businesses of all sizes and industries, but it is particularly useful for businesses that have limited IT resources or that need to quickly scale up or down their infrastructure. It is also useful for businesses that need to comply with strict security and compliance regulations, as DCaaS providers typically offer enterprise-level security measures.

What are some examples of DCaaS providers?

Some examples of DCaaS providers include Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform, and IBM Cloud. There are also many smaller, specialized DCaaS providers that cater to specific industries or use cases.

Colocation services refer to the practice of housing privately-owned servers and networking equipment in a third-party data center facility. These facilities provide businesses with the physical space, power, cooling, and network connectivity necessary to operate their IT infrastructure. Colocation services offer numerous benefits for businesses, including improved reliability, better connectivity, and cost savings.

Colocation services work by allowing businesses to rent space within a data center facility. The business brings their own servers and networking equipment to the facility and installs them in designated racks or cabinets. The data center provides the necessary power, cooling, and network connectivity to ensure that the equipment operates optimally. This arrangement allows businesses to take advantage of the data center’s robust infrastructure without having to invest in building and maintaining their own facility.

Key Takeaways

- Colocation services provide businesses with a secure and reliable way to store their IT infrastructure off-site.

- Benefits of colocation services include improved reliability, connectivity, and data security.

- There are different types of colocation services, including wholesale, retail, and managed colocation.

- Choosing the right colocation provider is crucial for meeting your business needs and saving money on IT infrastructure.

- Colocation services play a critical role in disaster recovery and business continuity planning.

Benefits of Colocation Services for Businesses

One of the key benefits of colocation services is improved reliability. Data centers are designed with redundant power and cooling systems to ensure that servers and networking equipment remain operational even in the event of a power outage or cooling failure. This level of redundancy is often beyond what most businesses can afford to implement in their own facilities. By colocating their equipment in a data center, businesses can benefit from this high level of reliability.

Another advantage of colocation services is better connectivity. Data centers are typically located in areas with excellent network connectivity, allowing businesses to take advantage of high-speed internet connections and direct connections to major internet service providers (ISPs). This ensures that businesses have fast and reliable access to the internet, which is crucial for running online applications and services.

Colocation services also offer cost savings for businesses. Building and maintaining a dedicated data center facility can be prohibitively expensive for many organizations. By utilizing colocation services, businesses can avoid these upfront costs and instead pay a monthly fee for the space, power, cooling, and network connectivity they need. This allows businesses to allocate their resources more efficiently and focus on their core competencies.

How Colocation Services Can Help Improve Data Security

Data security is a top concern for businesses, and colocation services can help address this issue. Data centers employ physical security measures to protect the equipment housed within their facilities. These measures may include 24/7 security personnel, video surveillance, biometric access controls, and locked cabinets or cages for individual customers. By colocating their equipment in a data center, businesses can benefit from these robust physical security measures.

In addition to physical security, data centers also provide redundant power and cooling systems. These systems ensure that servers and networking equipment remain operational even in the event of a power outage or cooling failure. This level of redundancy helps to minimize the risk of data loss or downtime due to infrastructure failures.

Furthermore, data centers often comply with industry regulations and standards related to data security. This can be particularly important for businesses that handle sensitive customer data or operate in regulated industries such as healthcare or finance. By colocating their equipment in a compliant data center, businesses can ensure that they meet the necessary security requirements.

Understanding the Different Types of Colocation Services

There are different types of colocation services available to businesses, each catering to different needs and requirements.

Wholesale colocation refers to the rental of large amounts of space within a data center facility. This type of colocation is typically used by large enterprises or service providers that require significant amounts of space for their IT infrastructure. Wholesale colocation offers flexibility and scalability, allowing businesses to expand their infrastructure as needed.

Retail colocation, on the other hand, refers to the rental of smaller amounts of space within a data center facility. This type of colocation is suitable for small and medium-sized businesses that have more modest IT infrastructure needs. Retail colocation often includes additional services such as remote hands support, which provides assistance with tasks such as server reboots or hardware replacements.

Cloud colocation combines the benefits of colocation services with cloud computing. In this model, businesses colocate their servers and networking equipment in a data center facility, while also leveraging cloud services for additional computing resources. Cloud colocation offers the flexibility and scalability of the cloud, while also providing the physical security and reliability of a data center.

Choosing the Right Colocation Provider for Your Business Needs

When selecting a colocation provider, there are several factors to consider. First and foremost, businesses should assess their specific needs and requirements. This includes determining the amount of space, power, cooling, and network connectivity they require. Businesses should also consider their growth plans and ensure that the colocation provider can accommodate their future needs.

It is also important to evaluate the reliability and security measures of the colocation provider. This includes assessing the facility’s power and cooling systems, as well as its physical security measures. Additionally, businesses should inquire about the provider’s compliance with industry regulations and standards related to data security.

Another factor to consider is the level of customer support provided by the colocation provider. Businesses should inquire about the provider’s remote hands support, which can be crucial in case of hardware failures or other issues that require on-site assistance. It is also important to assess the provider’s responsiveness and availability for support requests.

How Colocation Services Can Help Businesses Save Money on IT Infrastructure